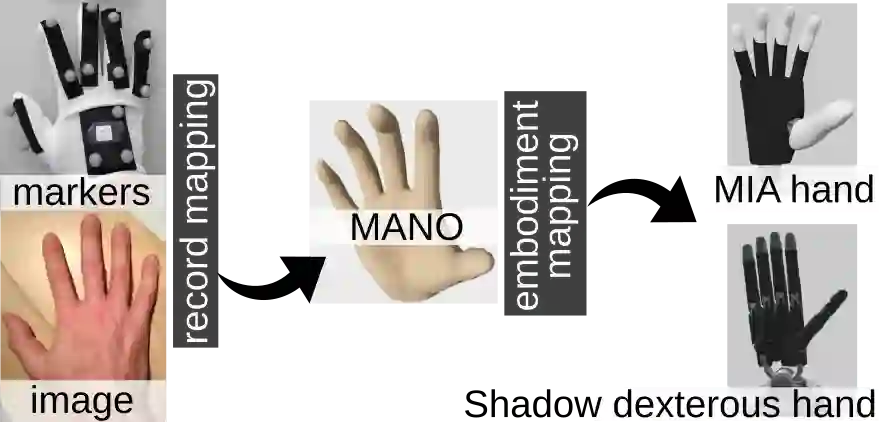

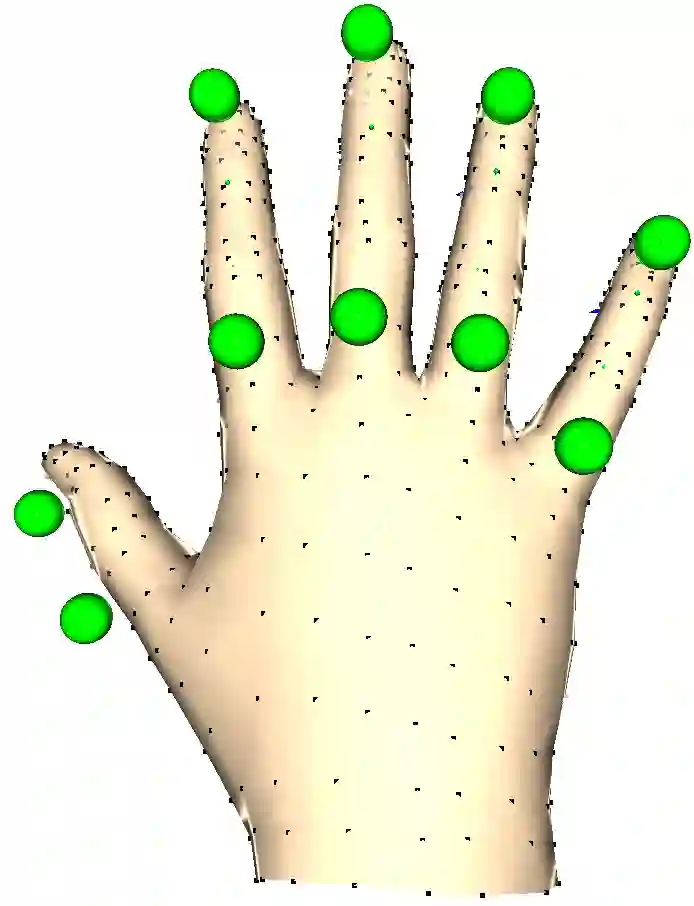

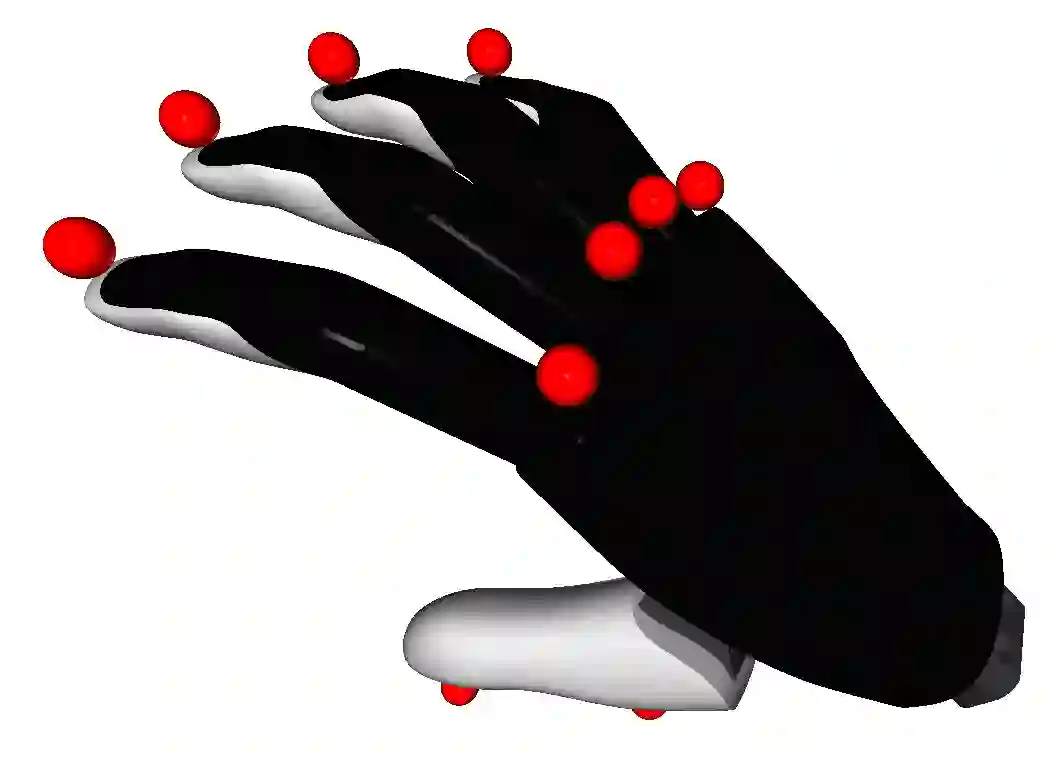

Manipulating objects with robotic hands is a complicated task. Not only the fingers of the hand, but also the pose of the robot's end effector need to be coordinated. Using human demonstrations of movements is an intuitive and data-efficient way of guiding the robot's behavior. We propose a modular framework with an automatic embodiment mapping to transfer recorded human hand motions to robotic systems. In this work, we use motion capture to record human motion. We evaluate our approach on eight challenging tasks, in which a robotic hand needs to grasp and manipulate either deformable or small and fragile objects. We test a subset of trajectories in simulation and on a real robot and the overall success rates are aligned.

翻译:用机器人手操纵物体是一项复杂的任务。 不仅需要协调手手指, 还需要协调机器人尾部效应的构成。 使用人类运动演示是一种直觉和数据高效的方式来指导机器人的行为。 我们提出了一个模块框架, 包含一个自动化映射, 将记录到的人类手动转移到机器人系统。 在这项工作中, 我们使用运动捕捉来记录人类运动。 我们评估了我们对于八项具有挑战性的任务的方法, 其中机器人手需要捕捉和操纵变形的或小的和脆弱的物体。 我们在模拟和真实的机器人上测试一组轨迹, 并且总体成功率是一致的。