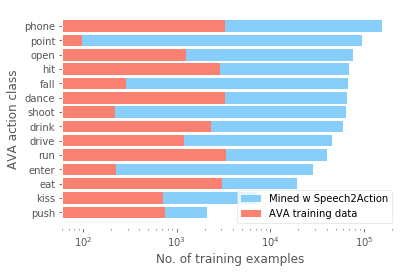

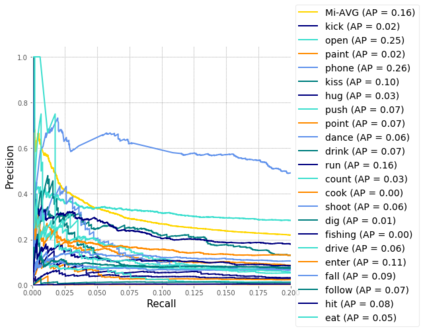

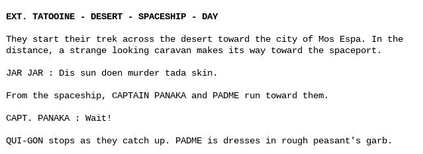

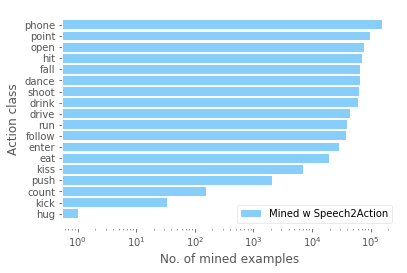

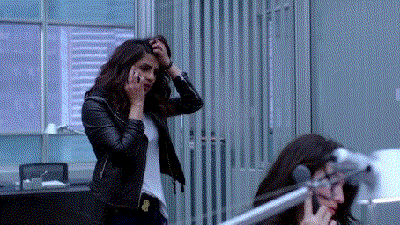

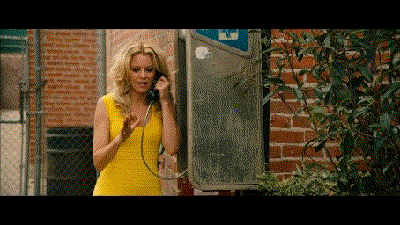

Is it possible to guess human action from dialogue alone? In this work we investigate the link between spoken words and actions in movies. We note that movie screenplays describe actions, as well as contain the speech of characters and hence can be used to learn this correlation with no additional supervision. We train a BERT-based Speech2Action classifier on over a thousand movie screenplays, to predict action labels from transcribed speech segments. We then apply this model to the speech segments of a large unlabelled movie corpus (188M speech segments from 288K movies). Using the predictions of this model, we obtain weak action labels for over 800K video clips. By training on these video clips, we demonstrate superior action recognition performance on standard action recognition benchmarks, without using a single manually labelled action example.

翻译:仅从对话中可以猜测人类的行为吗? 在这项工作中,我们调查电影中口头言词和行动之间的联系。 我们注意到电影剧本描述动作,包含人物的言语,因此可以在没有额外监督的情况下用于学习这种关联性。我们在一千多部电影剧本上对基于BERT的Speople2Action分类员进行了培训,以预测转录演讲部分的行动标签。然后,我们将这个模型应用到一个大型未贴标签的电影集(288K电影中的188M 语音片段)的演讲部分。我们利用这一模型的预测,我们获得了800多段视频片段的微弱动作标签。通过这些视频剪辑的培训,我们展示了在标准行动识别基准上的高级行动识别表现,而没有使用单一的手动标记动作示例。