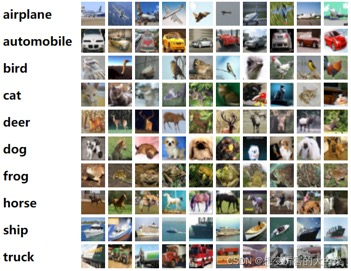

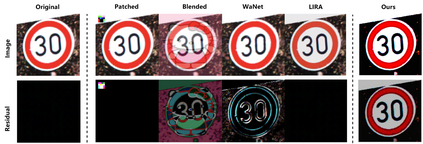

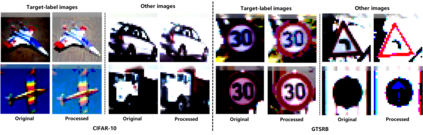

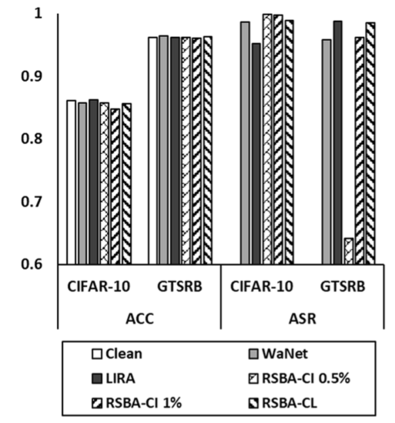

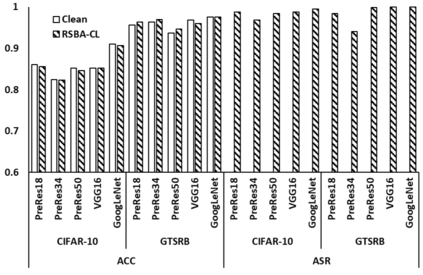

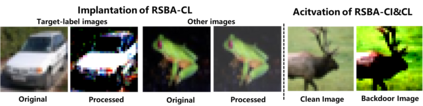

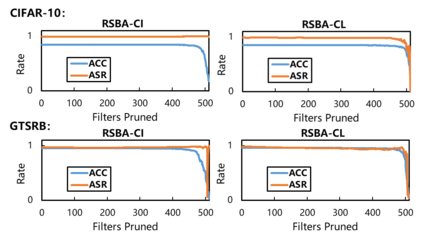

Learning-based systems have been demonstrated to be vulnerable to backdoor attacks, wherein malicious users manipulate model performance by injecting backdoors into the target model and activating them with specific triggers. Previous backdoor attack methods primarily focused on two key metrics: attack success rate and stealthiness. However, these methods often necessitate significant privileges over the target model, such as control over the training process, making them challenging to implement in real-world scenarios. Moreover, the robustness of existing backdoor attacks is not guaranteed, as they prove sensitive to defenses such as image augmentations and model distillation. In this paper, we address these two limitations and introduce RSBA (Robust Statistical Backdoor Attack under Privilege-constrained Scenarios). The key insight of RSBA is that statistical features can naturally divide images into different groups, offering a potential implementation of triggers. This type of trigger is more robust than manually designed ones, as it is widely distributed in normal images. By leveraging these statistical triggers, RSBA enables attackers to conduct black-box attacks by solely poisoning the labels or the images. We empirically and theoretically demonstrate the robustness of RSBA against image augmentations and model distillation. Experimental results show that RSBA achieves a 99.83\% attack success rate in black-box scenarios. Remarkably, it maintains a high success rate even after model distillation, where attackers lack access to the training dataset of the student model (1.39\% success rate for baseline methods on average).

翻译:暂无翻译