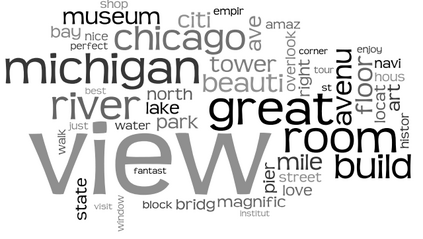

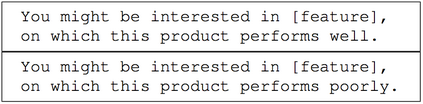

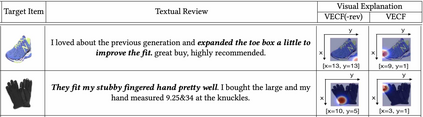

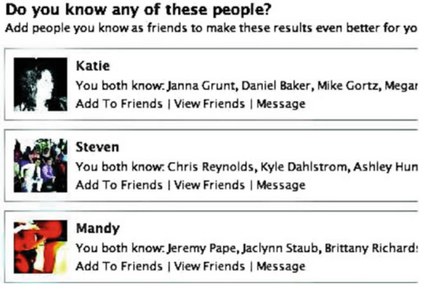

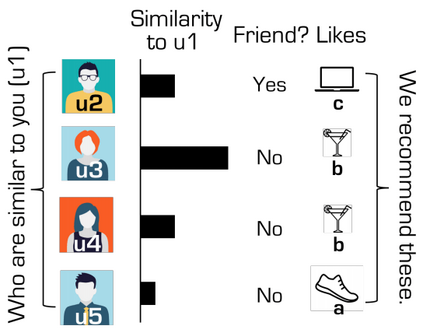

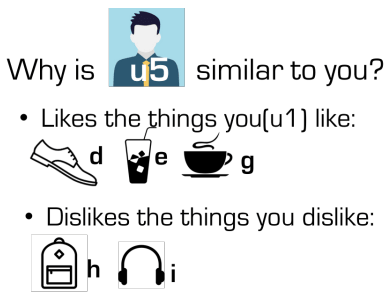

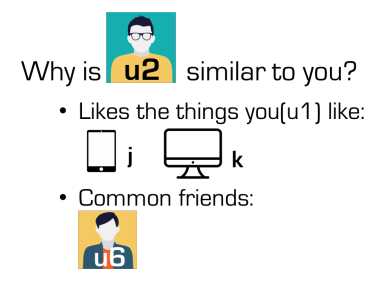

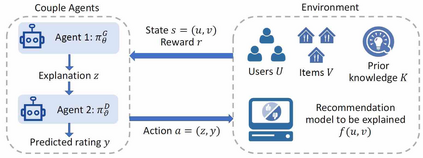

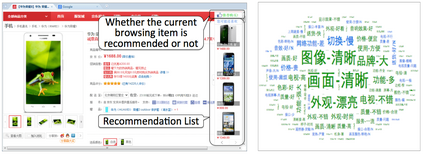

Explainable recommendation attempts to develop models that generate not only high-quality recommendations but also intuitive explanations. The explanations may either be post-hoc or directly come from an explainable model (also called interpretable or transparent model in some context). Explainable recommendation tries to address the problem of why: by providing explanations to users or system designers, it helps humans to understand why certain items are recommended by the algorithm, where the human can either be users or system designers. Explainable recommendation helps to improve the transparency, persuasiveness, effectiveness, trustworthiness, and satisfaction of recommendation systems. In this survey, we review works on explainable recommendation in or before the year of 2019. We first highlight the position of explainable recommendation in recommender system research by categorizing recommendation problems into the 5W, i.e., what, when, who, where, and why. We then conduct a comprehensive survey of explainable recommendation on three perspectives: 1) We provide a chronological research timeline of explainable recommendation, including user study approaches in the early years and more recent model-based approaches. 2) We provide a two-dimensional taxonomy to classify existing explainable recommendation research: one dimension is the information source (or display style) of the explanations, and the other dimension is the algorithmic mechanism to generate explainable recommendations. 3) We summarize how explainable recommendation applies to different recommendation tasks, such as product recommendation, social recommendation, and POI recommendation. We also devote a section to discuss the explanation perspectives in broader IR and AI/ML research. We end the survey by discussing potential future directions to promote the explainable recommendation research area and beyond.

翻译:可解释的建议试图发展不仅产生高质量建议而且产生直观解释的模型。解释可以是事后的,也可以是直接来自可解释的模型(在某些情况下也称为可解释或透明模型)。可解释的建议试图解决为什么这样的问题:通过向用户或系统设计者提供解释,它帮助人类了解某些项目为什么是由算法推荐的,因为人类可以是用户或系统设计者。可解释的建议有助于改进建议系统的透明度、说服性、有效性、可信赖性和满意度。在本调查中,我们审查的是2019年年或之前可解释的建议。我们首先强调建议系统研究中可解释的建议的立场,将建议问题分为5W,即哪些、哪些、哪些、哪些和为什么。我们随后对可解释的建议进行一项全面的调查,说明三个方面:(1) 我们提供了一个可解释的建议按时间顺序排列的研究时间表,包括早期的用户研究方法和较近期基于模型的方法。我们提供了一种二维的分类方法,将现有的可解释性建议分类为可解释性研究方式,一个层面是用建议解释,用一个方面来解释,我们用建议从一个方面来解释,将建议从一个方面解释,用一个方面来解释,我们用信息来源来解释。