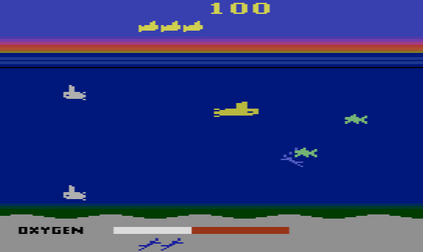

Balancing exploration and conservatism in the constrained setting is an important problem if we are to use reinforcement learning for meaningful tasks in the real world. In this paper, we propose a principled algorithm for safe exploration based on the concept of shielding. Previous approaches to shielding assume access to a safety-relevant abstraction of the environment or a high-fidelity simulator. Instead, our work is based on latent shielding - another approach that leverages world models to verify policy roll-outs in the latent space of a learned dynamics model. Our novel algorithm builds on this previous work, using safety critics and other additional features to improve the stability and farsightedness of the algorithm. We demonstrate the effectiveness of our approach by running experiments on a small set of Atari games with state dependent safety labels. We present preliminary results that show our approximate shielding algorithm effectively reduces the rate of safety violations, and in some cases improves the speed of convergence and quality of the final agent.

翻译:在受限制的环境中平衡探索和保守性是一个重要的问题,如果我们要将强化学习用于现实世界中的有意义的任务。在本文中,我们提出了一种基于屏蔽概念的安全探索方法。以前的屏蔽方法假定可以访问环境的安全相关抽象或高保真度模拟器。相反,我们的工作基于潜在屏蔽,这是另一种利用世界模型在学习的动态模型的潜在空间中验证策略展开的方法。我们的新算法建立在这个之前的工作基础之上,使用安全批评家和其他附加特征来提高算法的稳定性和远见性。我们通过对几个具有状态相关安全标签的Atari游戏运行实验来展示我们方法的效果。我们呈现初步结果,表明我们的近似屏蔽算法有效降低了安全违规的率,并在某些情况下提高了收敛速度和最终代理的质量。