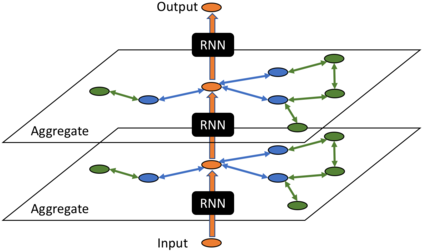

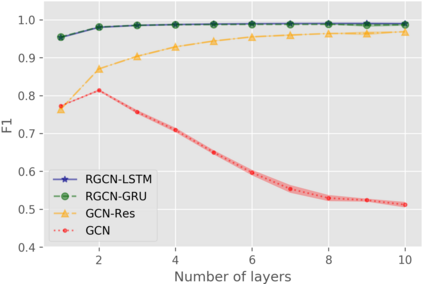

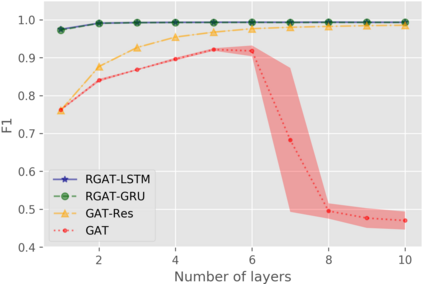

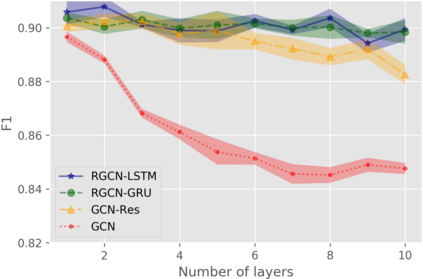

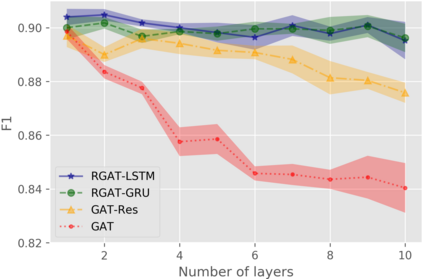

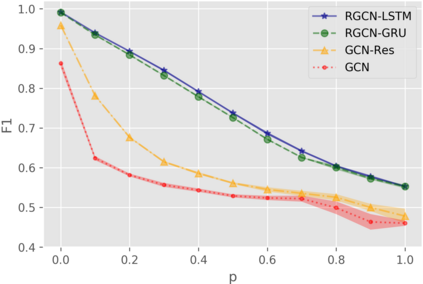

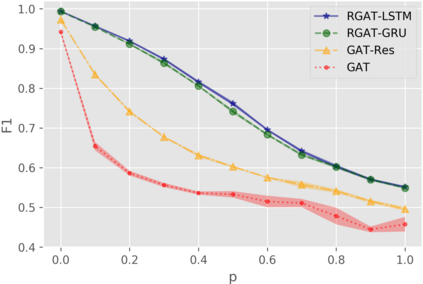

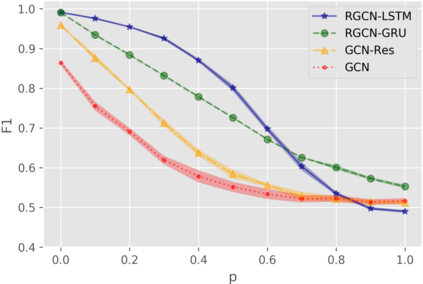

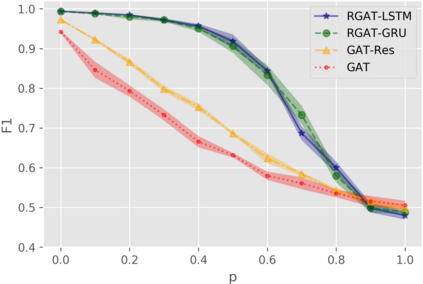

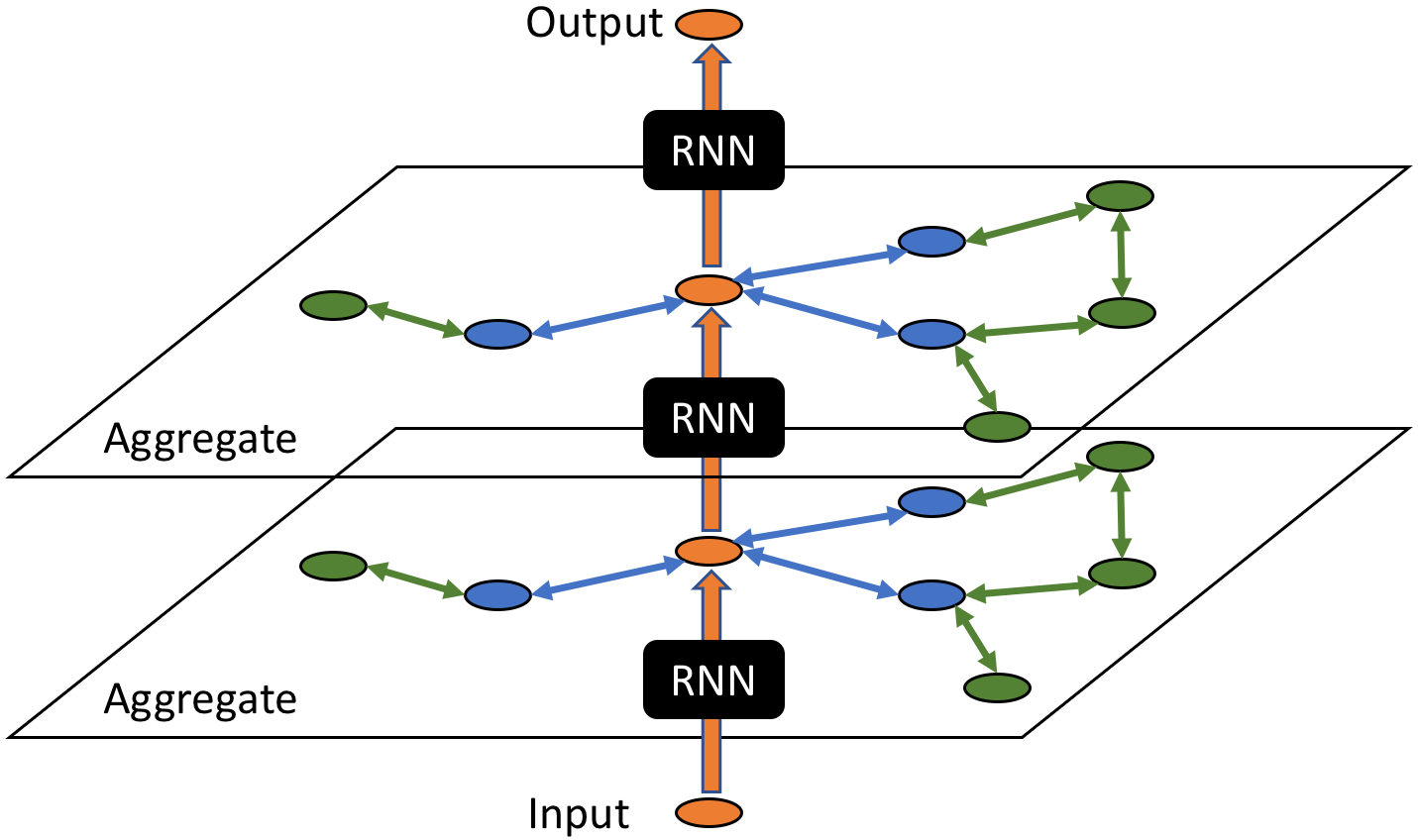

In this paper, we study the problem of node representation learning with graph neural networks. We present a graph neural network class named recurrent graph neural network (RGNN), that address the shortcomings of prior methods. By using recurrent units to capture the long-term dependency across layers, our methods can successfully identify important information during recursive neighborhood expansion. In our experiments, we show that our model class achieves state-of-the-art results on three benchmarks: the Pubmed, Reddit, and PPI network datasets. Our in-depth analyses also demonstrate that incorporating recurrent units is a simple yet effective method to prevent noisy information in graphs, which enables a deeper graph neural network.

翻译:在本文中,我们用图表神经网络研究节点代表学习问题。 我们展示了一个名为经常图形神经网络(RGNN)的图表神经网络类, 用来解决先前方法的缺陷。 通过使用经常性单位来捕捉跨层的长期依赖性, 我们的方法可以成功地在循环性邻里扩张过程中识别重要信息。 在我们的实验中, 我们的模型类在三个基准( Pubmed, Reddit, 和 PPPI 网络数据集)上取得了最新的结果。 我们的深入分析还表明, 包含经常性单位是一种简单而有效的方法, 防止图表中出现噪音信息, 从而可以建立更深的图形神经网络 。