内涵网络嵌入:Content-rich Network Embedding

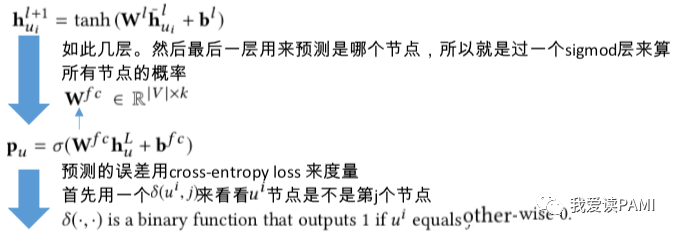

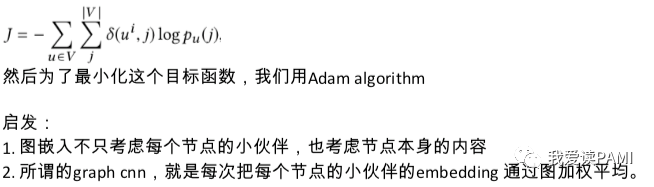

这篇文章说的还是图嵌入,但是每个节点不只有网络结构,还有自己的内容,比如一个文本。作者说要嵌入的话,就网络结是不够的,还要把文本的内容也一起嵌入到矢量里去。先用一个cnn表达文本,然后把一个节点的文本表达,和它的邻居的文本表达,一起放进一个graph cnn提取它的特征,用来预测它是哪个节点。作者是南开大学的Jie Liu教授。

Learning Network-to-Network Model for Content-rich Network Embedding

He, Zhicheng, Jie Liu, Na Li, and Yalou Huang

In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 1037-1045. ACM, 2019.

Recently, network embedding (NE) has achieved great successes in learning low dimensional representations for network nodes and has been increasingly applied to various network analytic tasks. In this paper, we consider the representation learning problem for content-rich networks whose nodes are associated with rich content information. Content-rich network embedding is challenging in fusing the complex structural dependencies and the rich contents. To tackle the challenges, we propose a generative model, Network-to-Network Network Embedding (Net2Net-NE) model, which can effectively fuse the structure and content information into one continuous embedding vector for each node. Specifically, we regard the content-rich network as a pair of networks with different modalities, i.e., content network and node network. By exploiting the strong correlation between the focal node and the nodes to whom it is connected to, a multilayer recursively composable encoder is proposed to fuse the structure and content information of the entire ego network into the egocentric node embedding. Moreover, a cross-modal decoder is deployed to mapping the egocentric node embeddings into node identities in an interconnected network. By learning the identity of each node according to its content, the mapping from content network to node network is learned in a generative manner. Hence the latent encoding vectors learned by the Net2Net-NE can be used as effective node embeddings. Extensive experimental results on three real-world networks demonstrate the superiority of Net2Net-NE over state-of-the-art methods.