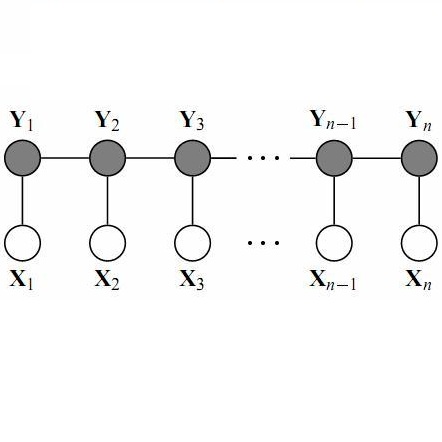

Fine-grained action segmentation and recognition is an important yet challenging task. Given a long, untrimmed sequence of kinematic data, the task is to classify the action at each time frame and segment the time series into the correct sequence of actions. In this paper, we propose a novel framework that combines a temporal Conditional Random Field (CRF) model with a powerful frame-level representation based on discriminative sparse coding. We introduce an end-to-end algorithm for jointly learning the weights of the CRF model, which include action classification and action transition costs, as well as an overcomplete dictionary of mid-level action primitives. This results in a CRF model that is driven by sparse coding features obtained using a discriminative dictionary that is shared among different actions and adapted to the task of structured output learning. We evaluate our method on three surgical tasks using kinematic data from the JIGSAWS dataset, as well as on a food preparation task using accelerometer data from the 50 Salads dataset. Our results show that the proposed method performs on par or better than state-of-the-art methods.

翻译:精细区分动作和识别是一项重要但又具有挑战性的任务。 在一个漫长、未剪剪的运动数据序列中,任务在于将每个时间框架的行动分类,并将时间序列分成正确的行动序列。 在本文件中,我们提出了一个新框架,将时间条件随机字段模型与基于歧视性稀疏编码的强大框架级代表制相结合。 我们引入了一种端对端算法,用于共同学习通用报告格式模型的重量,其中包括行动分类和动作过渡成本,以及中层行动原始词典的过于完整。这导致形成一种通用报告格式模型,该模型的驱动因素是使用不同行动共享的歧视性词典获得的稀少的编码特征,并适应了结构化产出学习的任务。我们用来自JIGSAWS数据集的动态数据来评估我们三项外科任务的方法,并利用来自50萨拉德数据集的加速计数据来评估食品准备任务。 我们的结果表明,拟议的方法在平面或优于状态方法上进行。