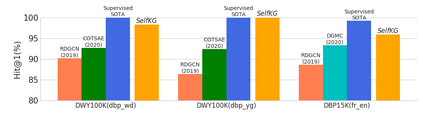

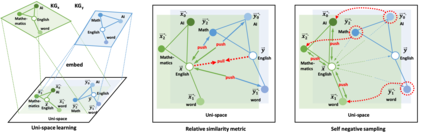

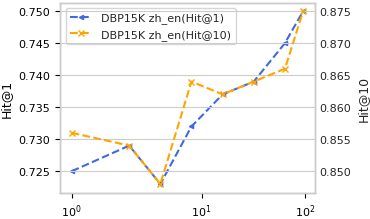

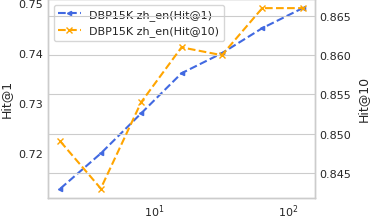

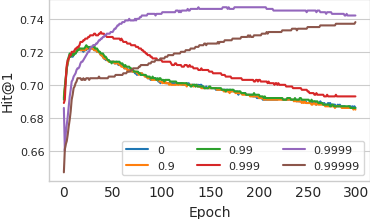

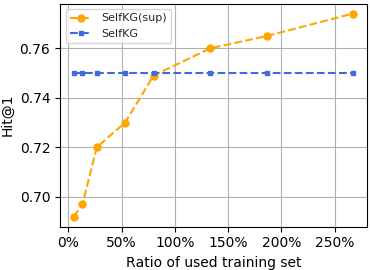

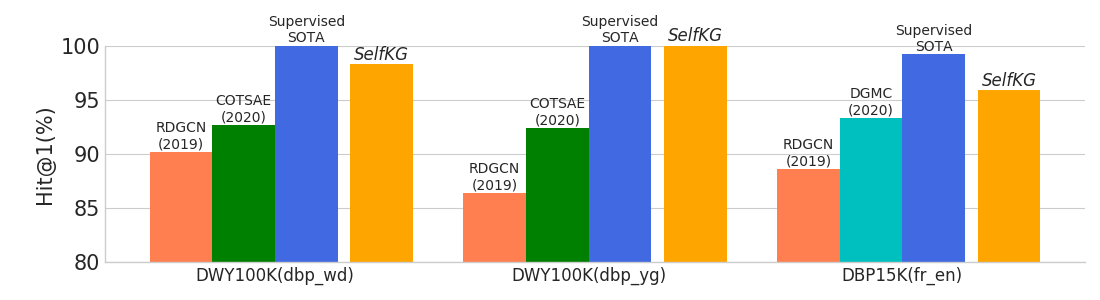

Entity alignment, aiming to identify equivalent entities across different knowledge graphs (KGs), is a fundamental problem for constructing large-scale KGs. Over the course of its development, supervision has been considered necessary for accurate alignments. Inspired by the recent progress of self-supervised learning, we explore the extent to which we can get rid of supervision for entity alignment. Existing supervised methods for this task focus on pulling each pair of positive (labeled) entities close to each other. However, our analysis suggests that the learning of entity alignment can actually benefit more from pushing sampled (unlabeled) negatives far away than pulling positive aligned pairs close. We present SelfKG by leveraging this discovery to design a contrastive learning strategy across two KGs. Extensive experiments on benchmark datasets demonstrate that SelfKG without supervision can match or achieve comparable results with state-of-the-art supervised baselines. The performance of SelfKG demonstrates self-supervised learning offers great potential for entity alignment in KGs.

翻译:实体调整的目的是通过不同的知识图表(KGs)确定同等实体,这是构建大型KG公司的一个根本问题。在开发过程中,监督被视为对准确的调整是必要的。在自我监督学习最近进展的启发下,我们探索了我们在多大程度上可以摆脱对实体调整的监督。这项任务的现有监督方法侧重于拉动每一对相互接近的正(标签)实体。然而,我们的分析表明,将抽样(未贴标签)的负差推远远,而不是拉近正对齐对对齐,实体调整的学习实际上会更有利于实体调整的学习。我们通过利用这一发现设计两个KGs之间的对比学习战略来介绍SelfKG。关于基准数据集的广泛实验表明,没有监督的SelfKG公司可以与最先进的监督基线相匹配或取得可比的结果。SelfKG公司的绩效表明,自我控制的学习为KG公司的实体调整提供了巨大的潜力。