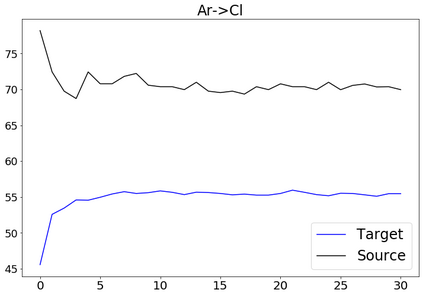

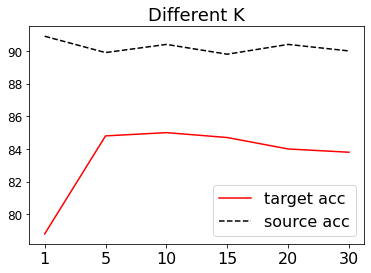

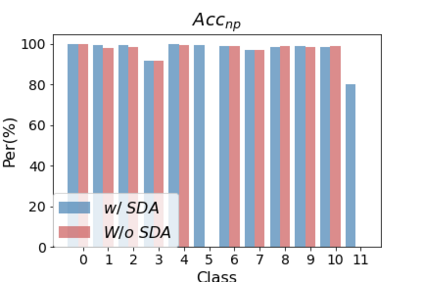

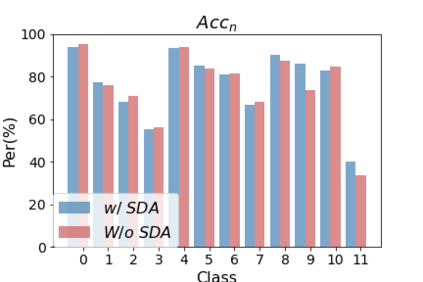

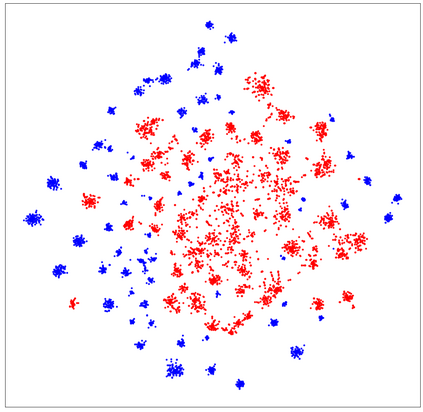

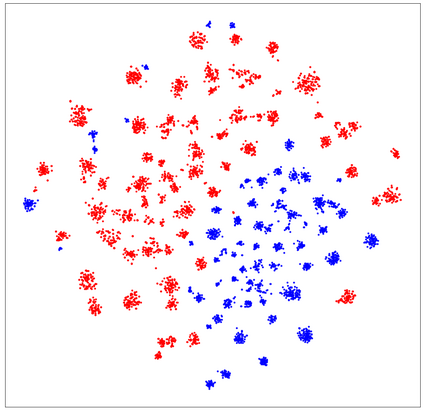

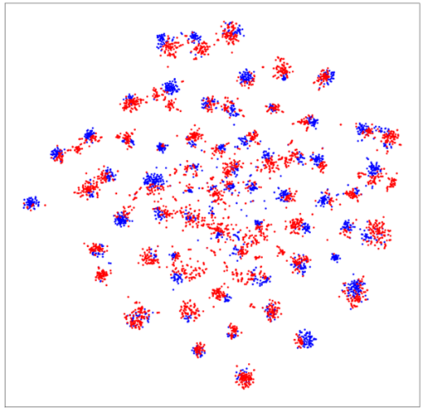

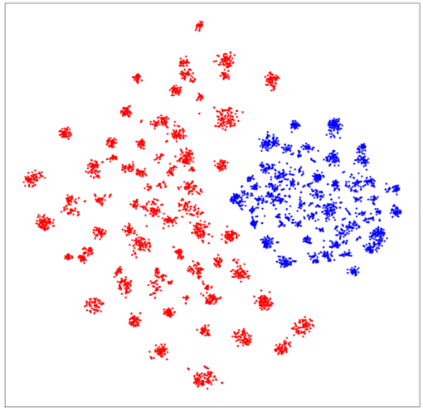

Domain adaptation (DA) aims to transfer the knowledge learned from a source domain to an unlabeled target domain. Some recent works tackle source-free domain adaptation (SFDA) where only a source pre-trained model is available for adaptation to the target domain. However, those methods do not consider keeping source performance which is of high practical value in real world applications. In this paper, we propose a new domain adaptation paradigm called Generalized Source-free Domain Adaptation (G-SFDA), where the learned model needs to perform well on both the target and source domains, with only access to current unlabeled target data during adaptation. First, we propose local structure clustering (LSC), aiming to cluster the target features with its semantically similar neighbors, which successfully adapts the model to the target domain in the absence of source data. Second, we propose sparse domain attention (SDA), it produces a binary domain specific attention to activate different feature channels for different domains, meanwhile the domain attention will be utilized to regularize the gradient during adaptation to keep source information. In the experiments, for target performance our method is on par with or better than existing DA and SFDA methods, specifically it achieves state-of-the-art performance (85.4%) on VisDA, and our method works well for all domains after adapting to single or multiple target domains. Code is available in https://github.com/Albert0147/G-SFDA.

翻译:域适应(DA) 旨在将从源域到未贴标签的目标领域所学到的知识从源域向未贴标签的目标领域转移。最近的一些工作涉及无源域适应(SFDA),因为只有源预先培训的模型可用于适应目标领域。然而,这些方法并不考虑保持在现实世界应用中具有高实际价值的源性能。在本文件中,我们提出了一个新的域适应模式,称为“通用无源域适应(G-SF47DA) ”, 所学的模型需要在目标和源领域上表现良好,在适应期间只能查阅当前未贴标签的目标数据。首先,我们建议本地结构组合(LSC),目的是将目标特征与其语言上相似的邻居集中起来,在没有源数据的情况下成功地将模型调整到目标领域。第二,我们提议少用域注意(SDA),它产生一个二元域内的具体关注点,为不同领域启动不同的特性渠道,与此同时,将利用域关注在适应期间对梯度进行规范,以保持源信息。在试验中,我们的方法与现有的DA和SFA/SADA多域域的域域(GDA)和SFDA/DA的SBDA方法,具体是,在现有的单一域域域域内,它可以实现。