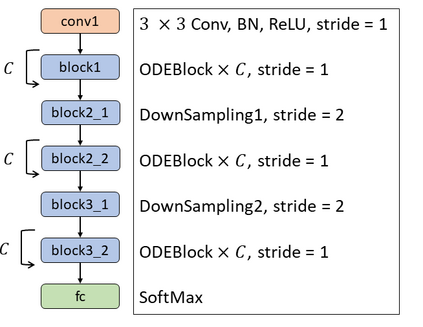

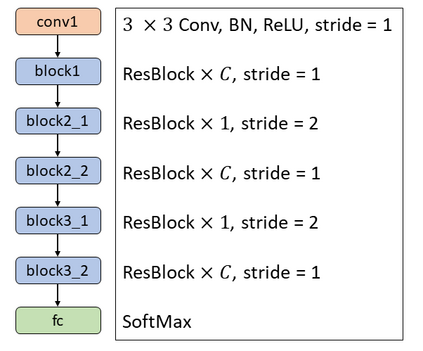

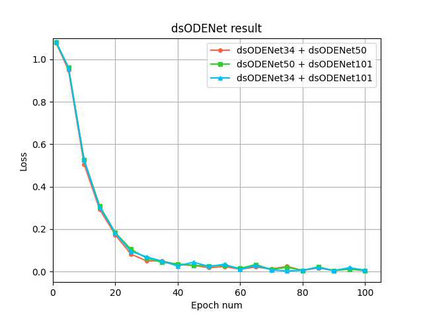

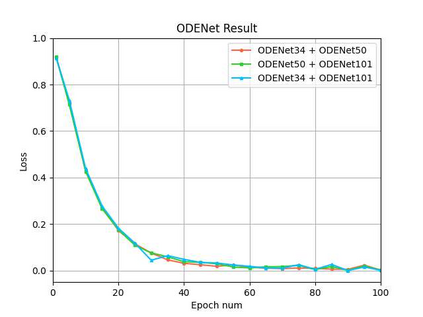

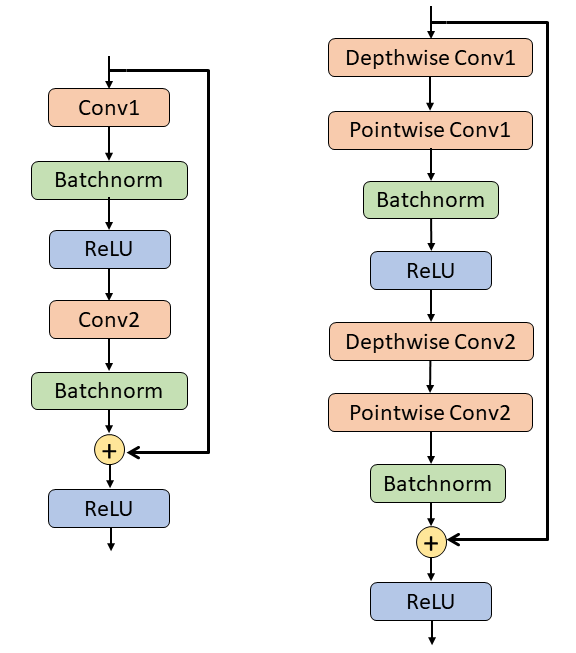

Federated learning is a machine learning method in which data is not aggregated on a server, but is distributed to the edges, in consideration of security and privacy. ResNet is a classic but representative neural network that succeeds in deepening the neural network by learning a residual function that adds the inputs and outputs together. In federated learning, communication is performed between the server and edge devices to exchange weight parameters, but ResNet has deep layers and a large number of parameters, so communication size becomes large. In this paper, we use Neural ODE as a lightweight model of ResNet to reduce communication size in federated learning. In addition, we newly introduce a flexible federated learning using Neural ODE models with different number of iterations, which correspond to ResNet with different depths. The CIFAR-10 dataset is used in the evaluation, and the use of Neural ODE reduces communication size by approximately 90% compared to ResNet. We also show that the proposed flexible federated learning can merge models with different iteration counts.

翻译:联邦学习是一种机器学习方法,其数据不是在服务器上汇总,而是传播到边缘,以考虑到安全和隐私。ResNet是一个经典但有代表性的神经网络,通过学习一个附加投入和产出的剩余功能,成功深化神经网络。在联合学习中,服务器和边缘设备之间进行通信,以交换重量参数,但ResNet有深层和大量参数,因此通信规模很大。在本文中,我们使用Neural DE作为ResNet的轻量级模型,以减少联合学习中的通信规模。此外,我们最近采用了一种灵活的联合学习模式,使用具有不同迭代数的Neoral ODE模型,与ResNet相匹配,深度不同。在评价中使用CIFAR-10数据集,使用Neural CODE将通信规模比ResNet减少约90%。我们还表明,拟议的灵活联合学习可以将模型与不同的迭代数合并。