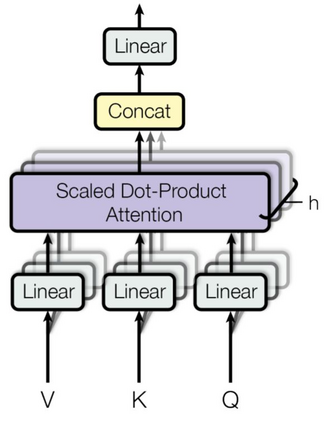

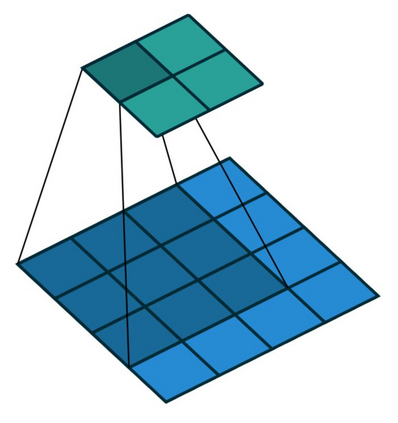

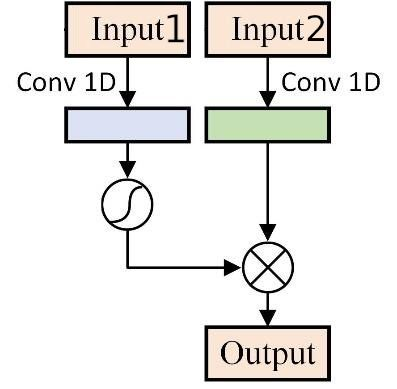

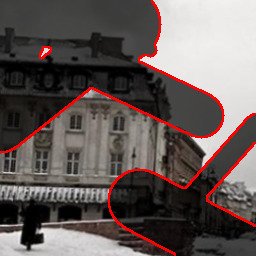

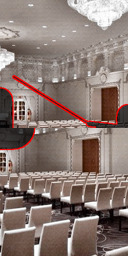

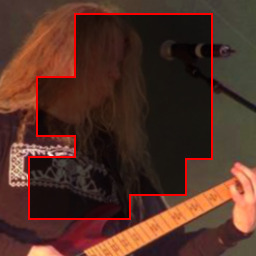

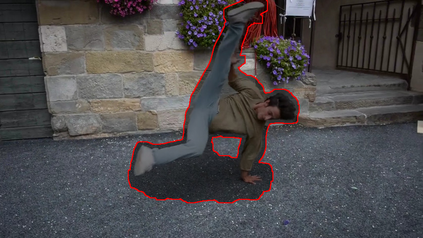

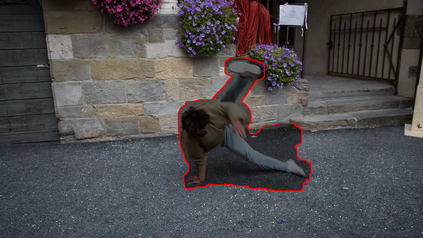

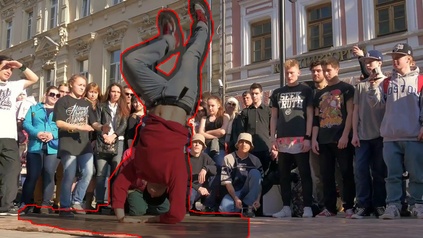

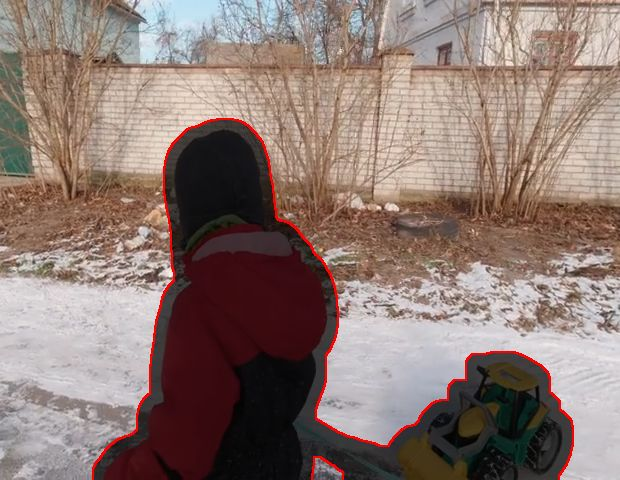

Many video editing tasks such as rotoscoping or object removal require the propagation of context across frames. While transformers and other attention-based approaches that aggregate features globally have demonstrated great success at propagating object masks from keyframes to the whole video, they struggle to propagate high-frequency details such as textures faithfully. We hypothesize that this is due to an inherent bias of global attention towards low-frequency features. To overcome this limitation, we present a two-stream approach, where high-frequency features interact locally and low-frequency features interact globally. The global interaction stream remains robust in difficult situations such as large camera motions, where explicit alignment fails. The local interaction stream propagates high-frequency details through deformable feature aggregation and, informed by the global interaction stream, learns to detect and correct errors of the deformation field. We evaluate our two-stream approach for inpainting tasks, where experiments show that it improves both the propagation of features within a single frame as required for image inpainting, as well as their propagation from keyframes to target frames. Applied to video inpainting, our approach leads to 44% and 26% improvements in FID and LPIPS scores. Code at https://github.com/runwayml/guided-inpainting

翻译:许多视频编辑任务,如 Rodosscopeing 或物体清除等,要求跨框架传播背景。尽管全球综合特征的变压器和其他关注型方法在从键盘向整个视频传播对象面罩方面表现出巨大的成功,但它们努力忠实地传播高频细节,如纹理。 我们假设这是全球关注低频特征的固有偏差造成的。 为了克服这一限制, 我们展示了一种双流方法, 高频特征在全球范围互动, 高频特征在本地和低频特征互动。 全球互动流在诸如大相机动作等困难情况下仍然强劲, 清晰的对齐失败。 本地互动流通过可变功能集传播高频细节, 在全球互动流的启发下, 学会检测和纠正变形场的错误。 我们评估了我们用于插入任务的两个流方法, 实验显示, 它改进了图像绘制所需的单一框架内的特征传播, 以及从关键框架向目标框的传播。 应用到视频平板, 我们的方法通过可变式功能集, 通过全球互动流, 学习发现并纠正和校正的44%/制解码系统/制导系统改进。