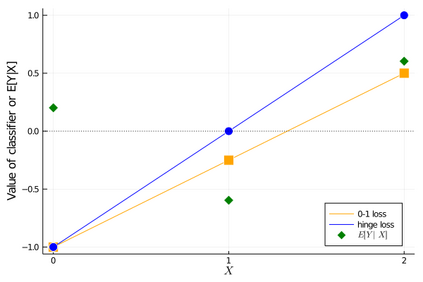

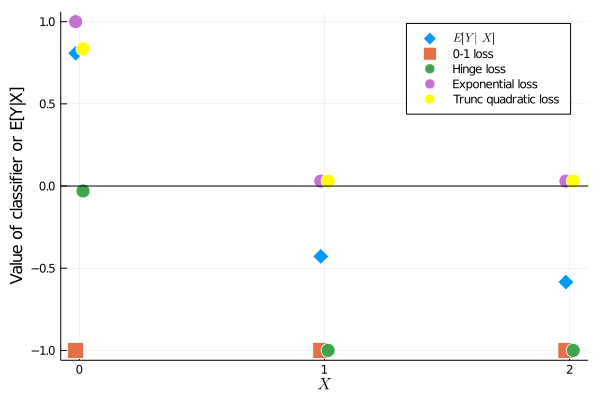

Modern machine learning approaches to classification, including AdaBoost, support vector machines, and deep neural networks, utilize surrogate loss techniques to circumvent the computational complexity of minimizing empirical classification risk. These techniques are also useful for causal policy learning problems, since estimation of individualized treatment rules can be cast as a weighted (cost-sensitive) classification problem. Consistency of the surrogate loss approaches studied in Zhang (2004) and Bartlett et al. (2006) crucially relies on the assumption of correct specification, meaning that the specified set of classifiers is rich enough to contain a first-best classifier. This assumption is, however, less credible when the set of classifiers is constrained by interpretability or fairness, leaving the applicability of surrogate loss based algorithms unknown in such second-best scenarios. This paper studies consistency of surrogate loss procedures under a constrained set of classifiers without assuming correct specification. We show that in the setting where the constraint restricts the classifier's prediction set only, hinge losses (i.e., $\ell_1$-support vector machines) are the only surrogate losses that preserve consistency in second-best scenarios. If the constraint additionally restricts the functional form of the classifier, consistency of a surrogate loss approach is not guaranteed even with hinge loss. We therefore characterize conditions for the constrained set of classifiers that can guarantee consistency of hinge risk minimizing classifiers. Exploiting our theoretical results, we develop robust and computationally attractive hinge loss based procedures for a monotone classification problem.

翻译:AdaBoost、支持矢量机和深神经网络等现代机器的分类学习方法,利用代用损失技术绕过计算复杂性,最大限度地减少实证分类风险的计算复杂性。这些技术对于因果政策学习问题也有帮助,因为个人化处理规则的估算可以被描绘成一个加权(成本敏感的)分类问题。在张(2004年)和巴特利特等人(2006年)中研究的代用损失方法的一致性,关键地依赖于正确规格的假设,这意味着指定的成套分级器足够丰富,足以包含一个第一高分级器。然而,当一组分级器受可解释性或公平性制约时,这种假设就不那么可信,使基于代用损失算法的算法的可适用性在这种次好的情况下是未知的。本文研究的是,在一组受限制的分类师中,代用的代用损失程序的一致性,而不假定正确的规格。我们表明,在限制分级器的预测仅限下,损失(即, $_1美元-美元-支持性的额外矢量机器)只能作为唯一一种代代代用损失的代用形式,因此,保证最低性损失假设性成本性标准的计算,如果以维持了标准不变性标准不变性标准,那么,则限制标准损失的定值不变性损失的定为我们定值的固定性标准,则以不变性标准,则以不变性损失的定值不变性标准。