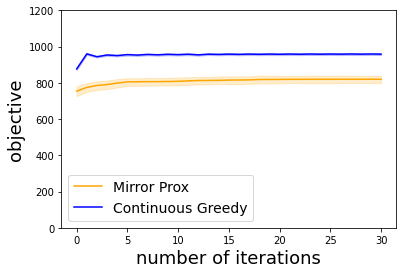

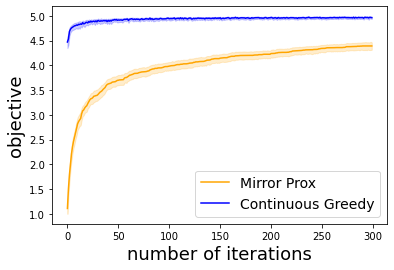

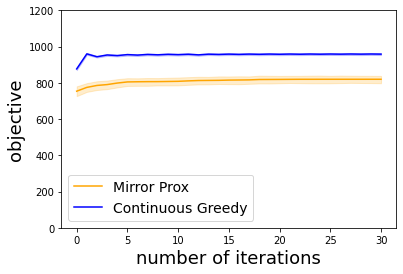

We study the problem of maximizing a continuous DR-submodular function that is not necessarily smooth. We prove that the continuous greedy algorithm achieves an $[(1-1/e)\text{OPT}-\epsilon]$ guarantee when the function is monotone and H\"older-smooth, meaning that it admits a H\"older-continuous gradient. For functions that are non-differentiable or non-smooth, we propose a variant of the mirror-prox algorithm that attains an $[(1/2)\text{OPT}-\epsilon]$ guarantee. We apply our algorithmic frameworks to robust submodular maximization and distributionally robust submodular maximization under Wasserstein ambiguity. In particular, the mirror-prox method applies to robust submodular maximization to obtain a single feasible solution whose value is at least $(1/2)\text{OPT}-\epsilon$. For distributionally robust maximization under Wasserstein ambiguity, we deduce and work over a submodular-convex maximin reformulation whose objective function is H\"older-smooth, for which we may apply both the continuous greedy method and the mirror-prox method.

翻译:我们研究的是使一种不一定平稳的连续 DR- 子模块函数最大化的问题。 我们证明持续贪婪算法在函数为单调和 H\"older- smooth 时实现了$[[1/ e)\ text{ {OPT}-\ epsilon] $的保证。 我们证明, 当函数为单调和 H\"older- smooth " 时, 继续贪婪算法实现了$[[1/ e]\ text{OPT}-\ epsilon] 的保证。 当函数为单调和 H\ " old- protox " 时, 我们应用我们的算法框架来实现强大的子模块最大化和分配强大的次调制子模式最大化。 特别是, 镜子- protox 方法将目标功能H\\\ mal- proal- 方法应用为持续贪婪的H\\ mal- 方法。