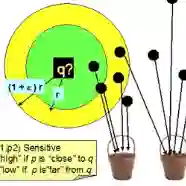

We study the problem of classifier derandomization in machine learning: given a stochastic binary classifier $f: X \to [0,1]$, sample a deterministic classifier $\hat{f}: X \to \{0,1\}$ that approximates the output of $f$ in aggregate over any data distribution. Recent work revealed how to efficiently derandomize a stochastic classifier with strong output approximation guarantees, but at the cost of individual fairness -- that is, if $f$ treated similar inputs similarly, $\hat{f}$ did not. In this paper, we initiate a systematic study of classifier derandomization with metric fairness guarantees. We show that the prior derandomization approach is almost maximally metric-unfair, and that a simple ``random threshold'' derandomization achieves optimal fairness preservation but with weaker output approximation. We then devise a derandomization procedure that provides an appealing tradeoff between these two: if $f$ is $\alpha$-metric fair according to a metric $d$ with a locality-sensitive hash (LSH) family, then our derandomized $\hat{f}$ is, with high probability, $O(\alpha)$-metric fair and a close approximation of $f$. We also prove generic results applicable to all (fair and unfair) classifier derandomization procedures, including a bias-variance decomposition and reductions between various notions of metric fairness.

翻译:我们研究机器学习中的分类解密问题:考虑到一个零碎的二进制分类:X美元至[0,1美元,抽样一个确定性分类:X美元至>1美元,抽样一个确定性分类:X美元至>0,1 美元,与任何数据分配相比,其总产出约为美元;最近的工作揭示了如何以强有力的产出近似保证,但以个人公平为代价,有效地解密一个具有强烈产出近似保证的分类,但以个人公平为代价 -- -- 也就是说,如果美元对类似的投入处理类似,则美元至为美元。在本文中,我们发起了一个系统化的分类解密性分类研究,并提供了标准的公平保障。我们表明,先前的解密性方法几乎是万无一失,简便的“解密”门槛实现了最佳的公平保护,但产出近似于较弱的近效。 然后,我们设计了一种解密程序,这为这两者之间提供了诱人的折价交易:如果美元是美元,那么美元是相当的。我们开始系统化的分解解解的分类, 包括高额的直径直径直径直径直径直径直径直径直径直径直径直径直径直的排序。