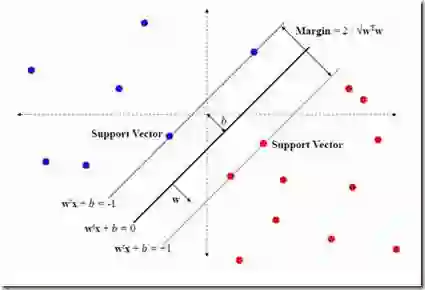

We provide a thorough treatment of one-class classification with hyperparameter optimisation for five data descriptors: Support Vector Machine (SVM), Nearest Neighbour Distance (NND), Localised Nearest Neighbour Distance (LNND), Local Outlier Factor (LOF) and Average Localised Proximity (ALP). The hyperparameters of SVM and LOF have to be optimised through cross-validation, while NND, LNND and ALP allow an efficient form of leave-one-out validation and the reuse of a single nearest-neighbour query. We experimentally evaluate the effect of hyperparameter optimisation with 246 classification problems drawn from 50 datasets. From a selection of optimisation algorithms, the recent Malherbe-Powell proposal optimises the hyperparameters of all data descriptors most efficiently. We calculate the increase in test AUROC and the amount of overfitting as a function of the number of hyperparameter evaluations. After 50 evaluations, ALP and SVM significantly outperform LOF, NND and LNND, and LOF and NND outperform LNND. The performance of ALP and SVM is comparable, but ALP can be optimised more efficiently so constitutes a good default choice. Alternatively, using validation AUROC as a selection criterion between ALP or SVM gives the best overall result, and NND is the least computationally demanding option. We thus end up with a clear trade-off between three choices, allowing practitioners to make an informed decision.

翻译:我们为五种数据解码器提供对单级分类的超参数优化的彻底处理:支持矢量机(SVM)、近邻距离(NND)、近距离近距离(LNND)、本地化近距离距离距离(LNND)、本地外差系数(LOF)和平均本地化近距离(ALP)。SVM和LOF的超参数必须通过交叉校验加以优化,而NND、LNND和ALP允许一种高效的放假验证和再利用单一近邻查询的形式。我们实验性地评估超参数优化的效果,从50套数据集中提取出246个分类问题。从选择优化算法,最近的Malherbe-Powell提议,通过交叉校验,所有数据解码器的超参数必须最优化。我们计算AUROC测试的增加和超标准作为超分数评估的函数。在50次评价后,ALP和SVM明显超出最低端的LF、NND和SLND和LM最后的计算结果,因此可以更高效的ALM标准。