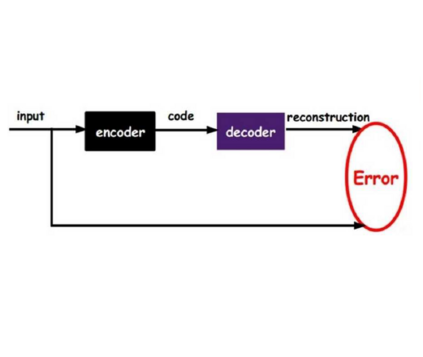

Autoencoders and their variants are among the most widely used models in representation learning and generative modeling. However, autoencoder-based models usually assume that the learned representations are i.i.d. and fail to capture the correlations between the data samples. To address this issue, we propose a novel Sparse Gaussian Process Bayesian Autoencoder (SGPBAE) model in which we impose fully Bayesian sparse Gaussian Process priors on the latent space of a Bayesian Autoencoder. We perform posterior estimation for this model via stochastic gradient Hamiltonian Monte Carlo. We evaluate our approach qualitatively and quantitatively on a wide range of representation learning and generative modeling tasks and show that our approach consistently outperforms multiple alternatives relying on Variational Autoencoders.

翻译:自动编码器及其变体是代表学习和基因模型中最广泛使用的模型之一,然而,基于自动编码器的模型通常假定,所学的表示方式是i.d.d.,未能捕捉数据样品之间的相互关系。为解决这一问题,我们提议了一个新型的Sparse Gaussian process Bayssian Autoencoder(SGPBAE)模型,我们在这个模型中将巴伊西亚稀疏高斯进程前科完全强加给巴伊西亚自动编码器的潜在空间。我们通过随机梯度梯度的汉密尔顿蒙特·蒙特卡洛(Hamilton Monte Carlo)对这一模型进行后科估计。我们从质量和数量上评价了我们的做法,评估了广泛的代表学习和基因模型任务,并表明我们的方法始终比多种替代方法要优于多功能自动编码器。