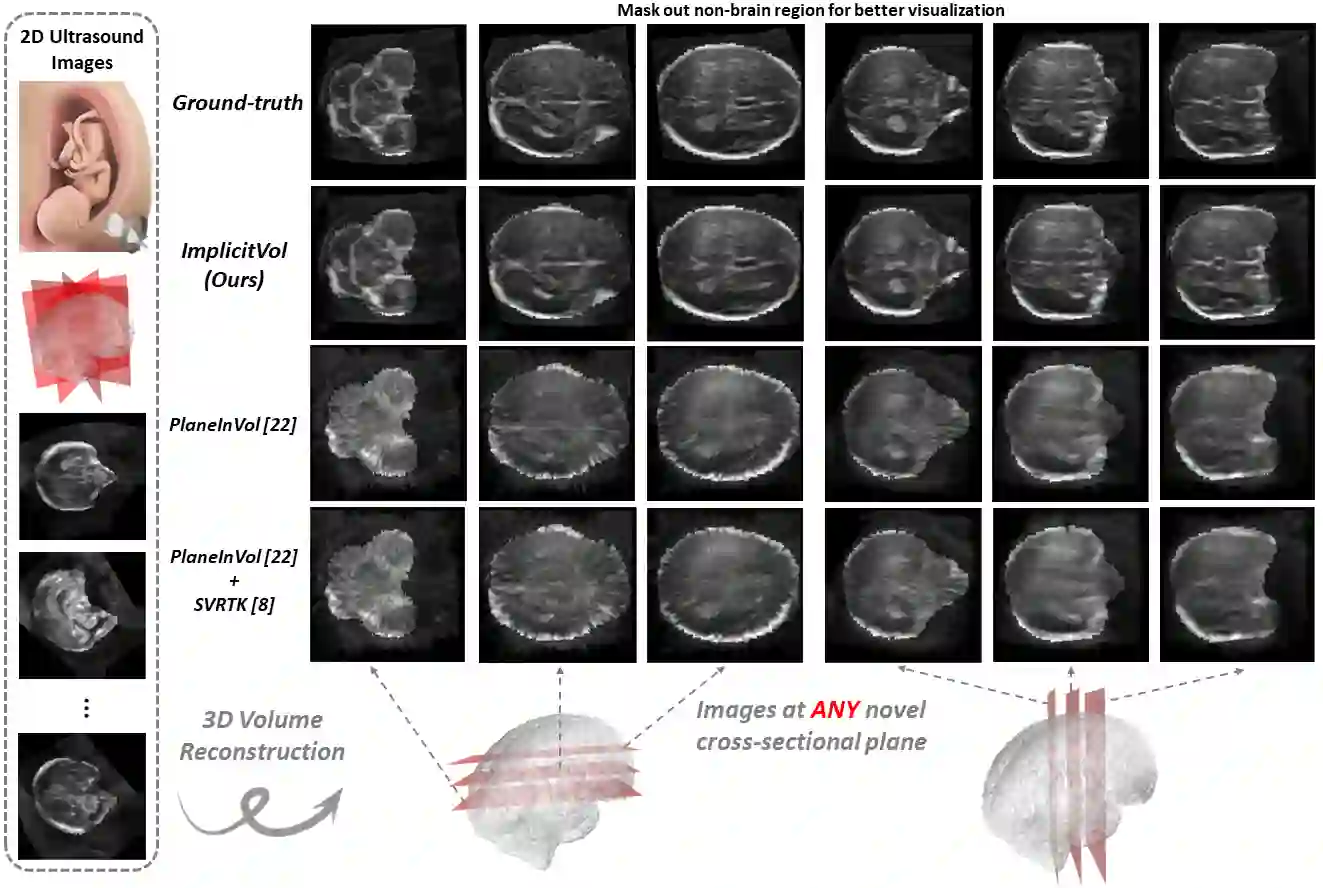

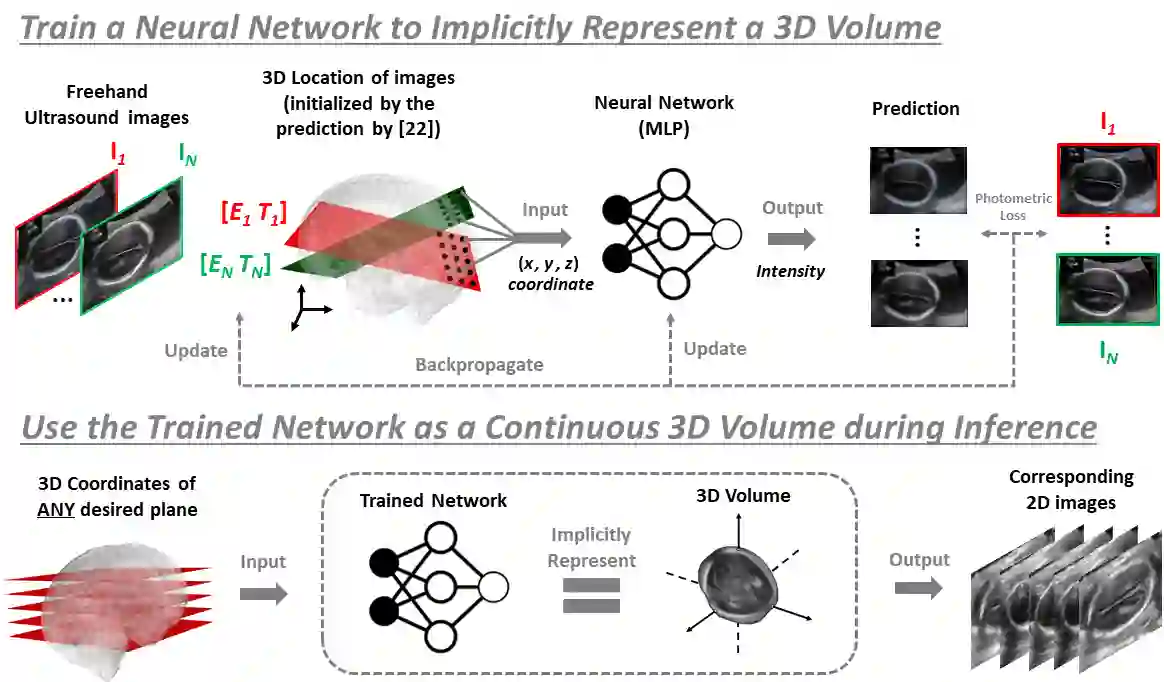

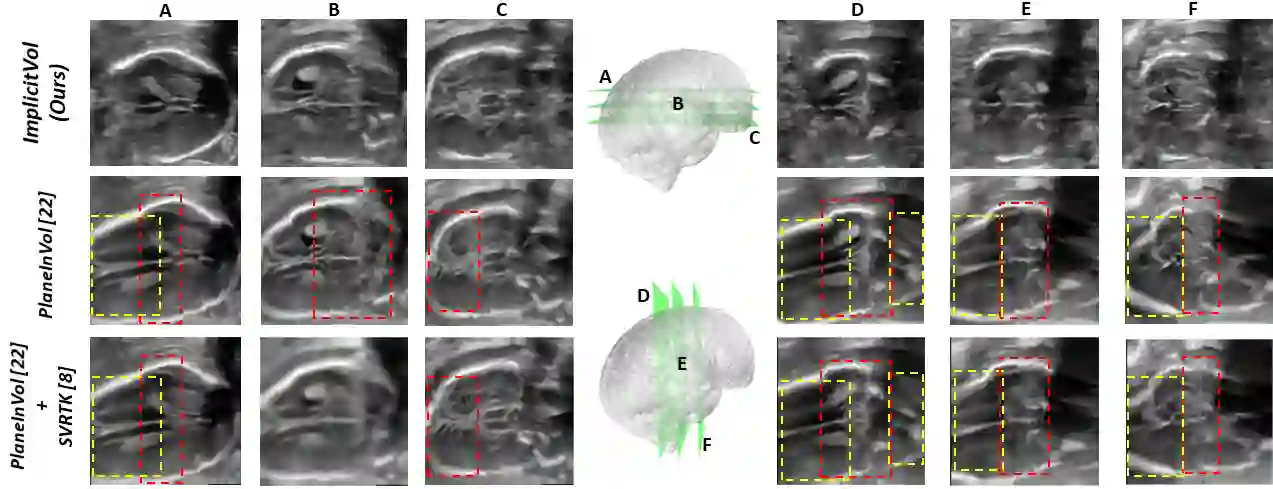

The objective of this work is to achieve sensorless reconstruction of a 3D volume from a set of 2D freehand ultrasound images with deep implicit representation. In contrast to the conventional way that represents a 3D volume as a discrete voxel grid, we do so by parameterizing it as the zero level-set of a continuous function, i.e. implicitly representing the 3D volume as a mapping from the spatial coordinates to the corresponding intensity values. Our proposed model, termed as ImplicitVol, takes a set of 2D scans and their estimated locations in 3D as input, jointly re?fing the estimated 3D locations and learning a full reconstruction of the 3D volume. When testing on real 2D ultrasound images, novel cross-sectional views that are sampled from ImplicitVol show significantly better visual quality than those sampled from existing reconstruction approaches, outperforming them by over 30% (NCC and SSIM), between the output and ground-truth on the 3D volume testing data. The code will be made publicly available.

翻译:这项工作的目标是从一组 2D 自由超声波图像中完成3D 卷的无感重建,其中含有深层隐含表示。 与代表3D 卷的离散 voxel 网格的常规方式相反,我们这样做的方法是将3D 卷作为连续函数的零层设置参数,即暗中代表3D 卷作为空间坐标的绘图,以相应的强度值。 我们提议的模型称为 InmplicitVol, 将一套2D 扫描及其估计位置作为3D 输入, 联合重新显示估计的 3D 点位置, 并学习3D 卷的全面重建。 当对真实的 2D 超声波图像进行测试时, 从 ImplitVol 抽样的新的跨部门观点显示的视觉质量大大高于从现有重建方法取样的图像质量, 超过 3D 卷量测试数据的产出和地面图的30% ( NCC 和 SSIM ) 。 代码将公开发布 。