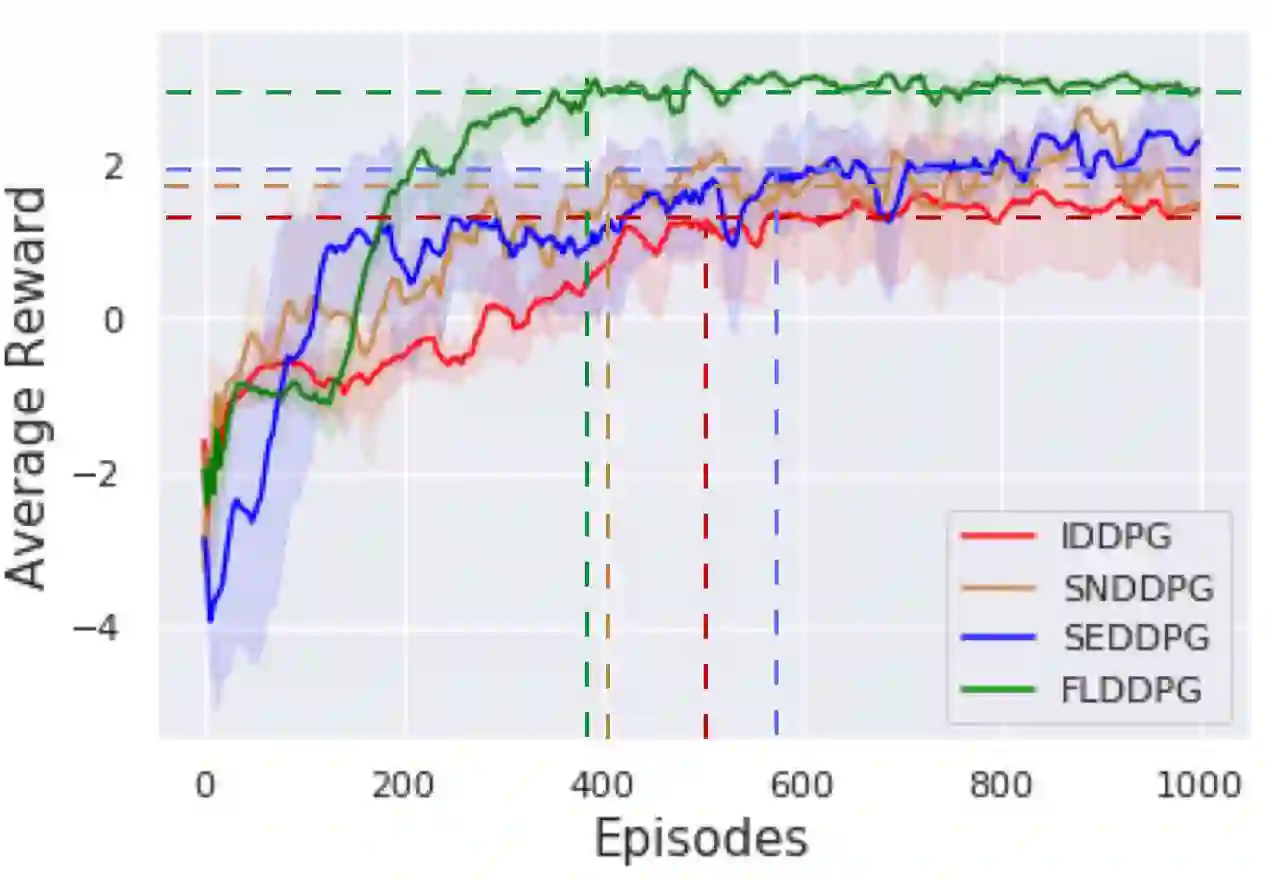

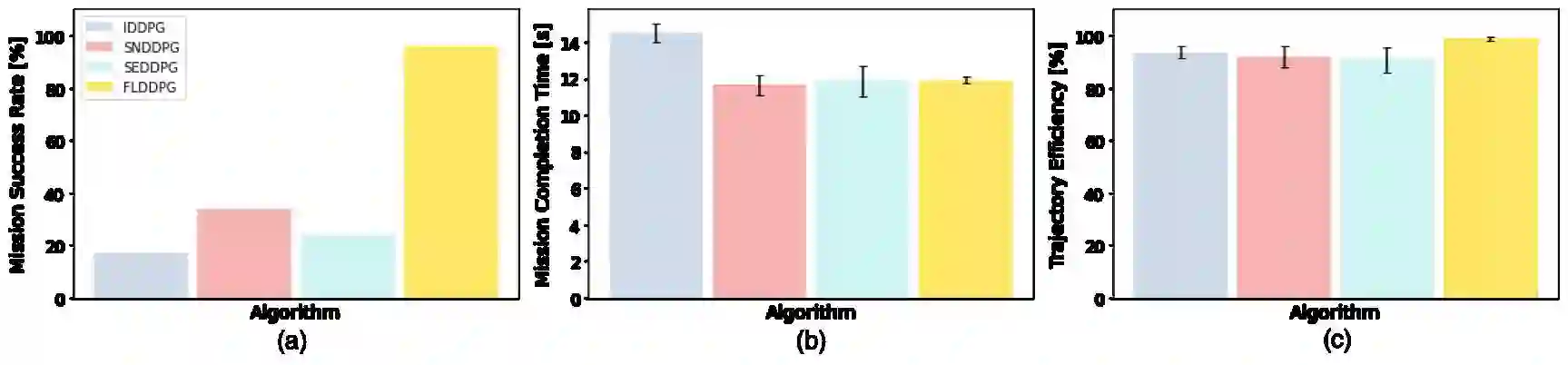

The recent advancement of Deep Reinforcement Learning (DRL) contributed to robotics by allowing automatic controller design. Automatic controller design is a crucial approach for designing swarm robotic systems, which require more complex controller than a single robot system to lead a desired collective behaviour. Although DRL-based controller design method showed its effectiveness, the reliance on the central training server is a critical problem in the real-world environments where the robot-server communication is unstable or limited. We propose a novel Federated Learning (FL) based DRL training strategy for use in swarm robotic applications. As FL reduces the number of robot-server communication by only sharing neural network model weights, not local data samples, the proposed strategy reduces the reliance on the central server during controller training with DRL. The experimental results from the collective learning scenario showed that the proposed FL-based strategy dramatically reduced the number of communication by minimum 1600 times and even increased the success rate of navigation with the trained controller by 2.8 times compared to the baseline strategies that share a central server. The results suggest that our proposed strategy can efficiently train swarm robotic systems in the real-world environments with the limited robot-server communication, e.g. agri-robotics, underwater and damaged nuclear facilities.

翻译:最近推进的深强化学习(DRL)通过允许自动控制器设计,促进了机器人的设计。自动控制器设计是设计群温机器人系统的关键方法,需要比单一机器人系统更复杂的控制器,才能领导理想的集体行为。虽然DRL的中央控制器设计方法显示了其有效性,但对中央培训服务器的依赖是机器人-服务器通信不稳定或有限的机器人-服务器通信不稳定或有限的现实环境中对中央培训服务器的依赖性是一个关键问题。我们建议采用一个新的基于Fed学习(FL)的基于FL培训(FL)的DRL培训战略,用于温暖机器人应用中。随着FL仅通过共享神经网络模型重量而不是当地数据样本来减少机器人-服务器通信的数量,因此拟议战略减少了在与DRL培训期间对中央服务器的依赖。 集体学习情景的实验结果表明,拟议的基于FL战略在机器人-服务器通信量最少至少减少1600次、甚至提高与受过培训的控制员与受过培训的导航成功率比共用中央服务器的基线战略增加2.8次。结果表明,我们拟议的战略可以有效地在现实-地球环境环境中的系统、有受限的机器人和受破坏的通信设施-服务器通信设施的系统、一个有效的通信,我们拟议的战略可以有效训练的系统、一个节能通信、一个节能和有限的设施、一个有效训练的系统、一个节能通信、一个节节节能通信、一个节节节节节节节能通信、一个节能通信的系统、一个节能通信的系统、一个节节节节节节节节节能通讯的系统、一个节节节能通讯。