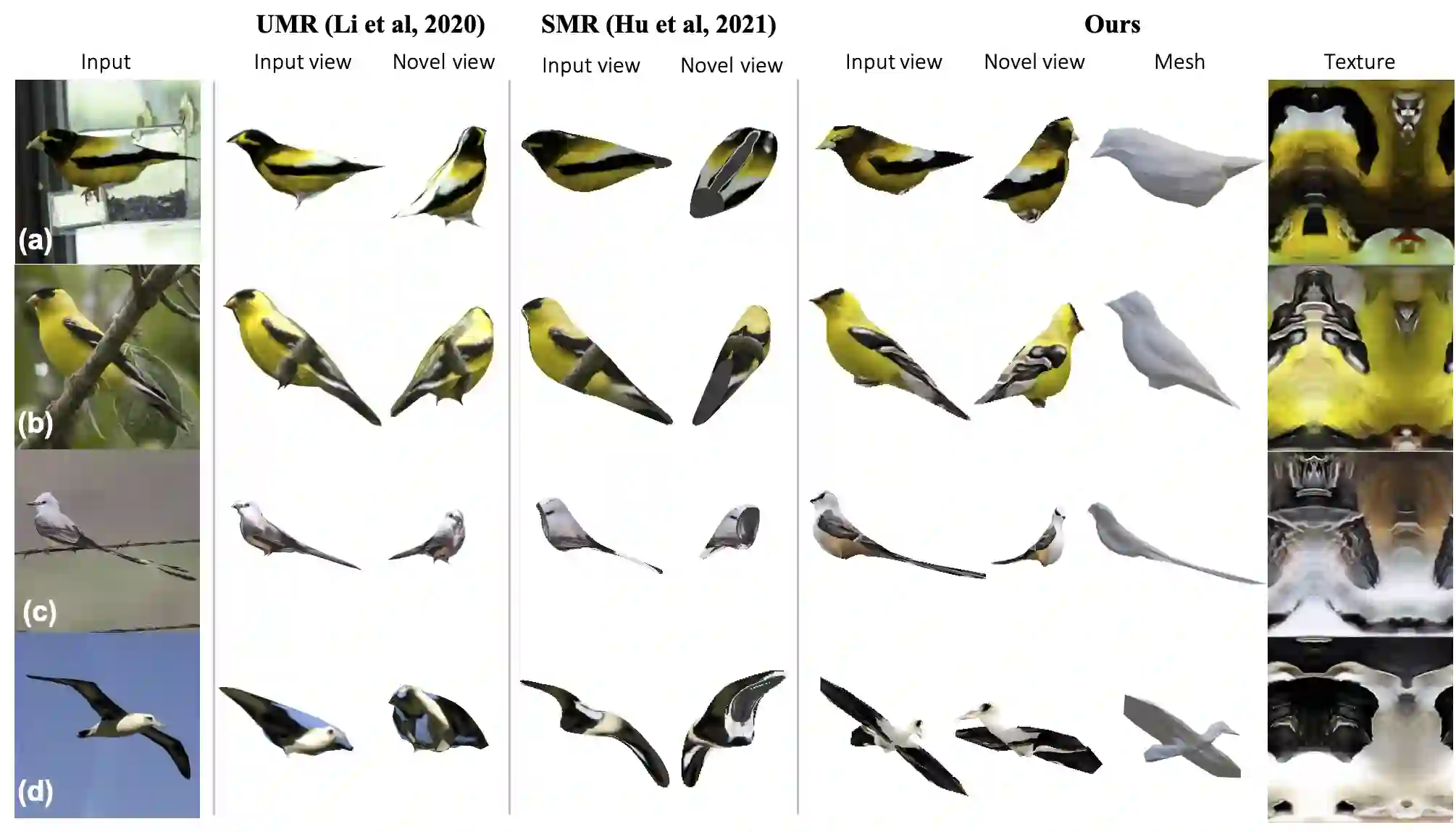

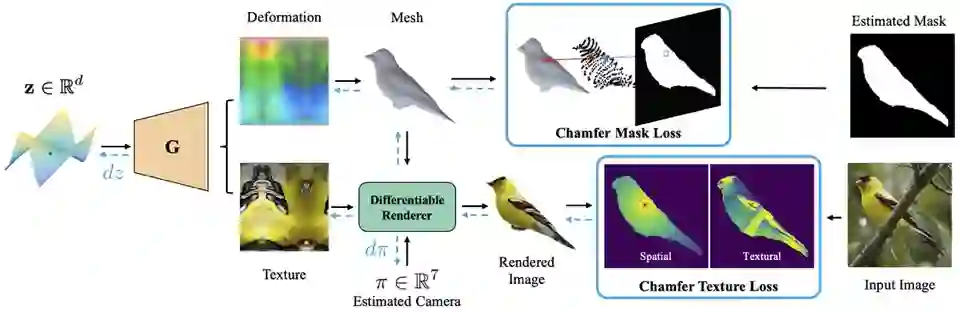

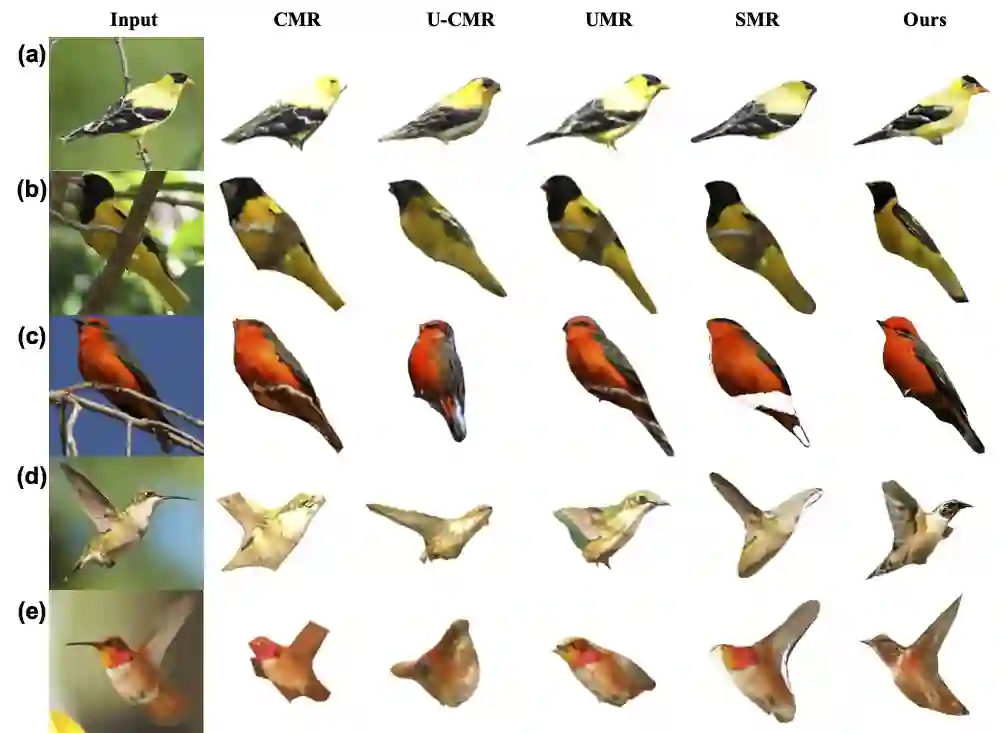

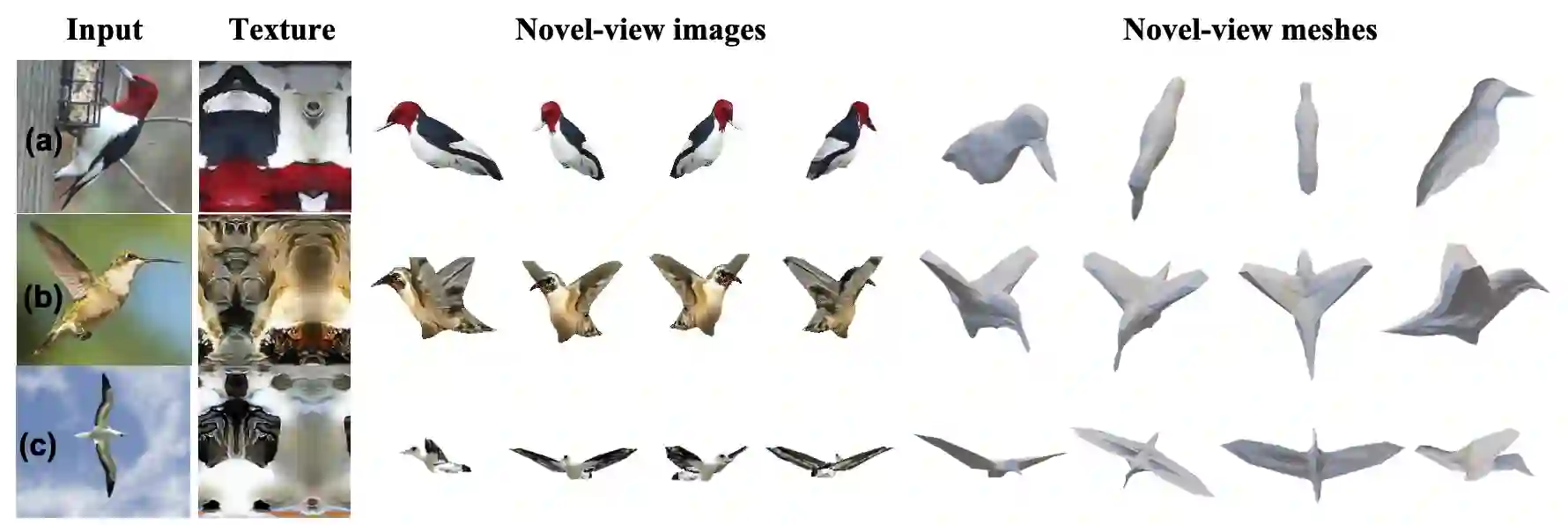

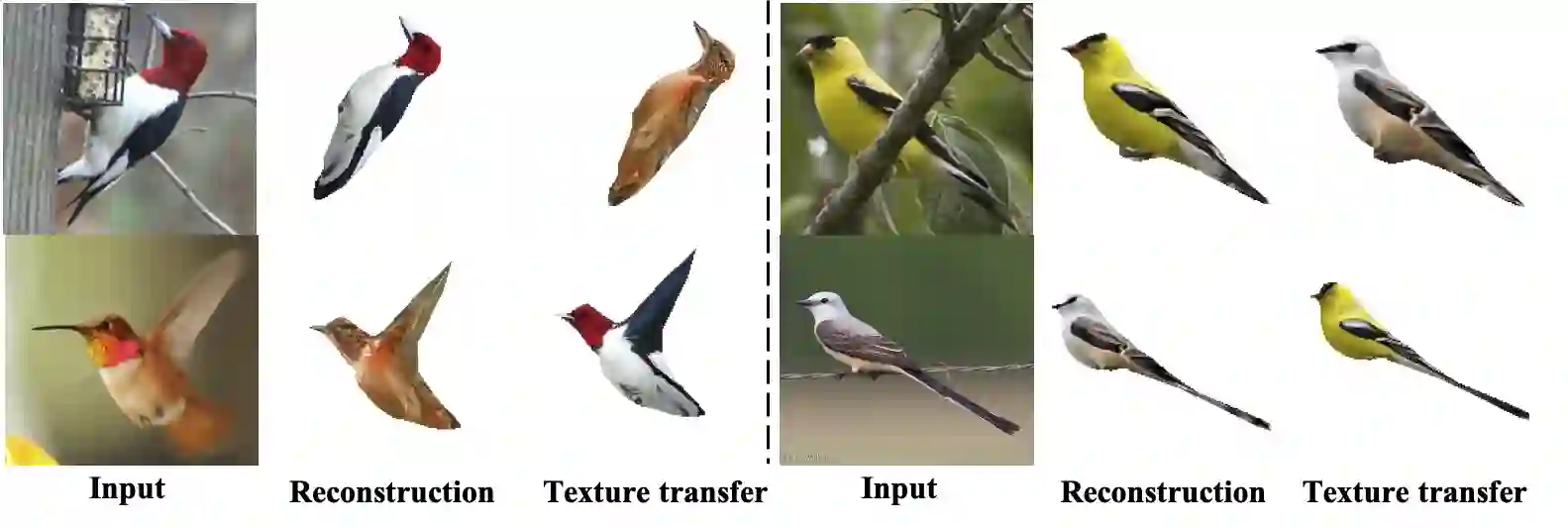

Recovering a textured 3D mesh from a monocular image is highly challenging, particularly for in-the-wild objects that lack 3D ground truths. In this work, we present MeshInversion, a novel framework to improve the reconstruction by exploiting the generative prior of a 3D GAN pre-trained for 3D textured mesh synthesis. Reconstruction is achieved by searching for a latent space in the 3D GAN that best resembles the target mesh in accordance with the single view observation. Since the pre-trained GAN encapsulates rich 3D semantics in terms of mesh geometry and texture, searching within the GAN manifold thus naturally regularizes the realness and fidelity of the reconstruction. Importantly, such regularization is directly applied in the 3D space, providing crucial guidance of mesh parts that are unobserved in the 2D space. Experiments on standard benchmarks show that our framework obtains faithful 3D reconstructions with consistent geometry and texture across both observed and unobserved parts. Moreover, it generalizes well to meshes that are less commonly seen, such as the extended articulation of deformable objects. Code is released at https://github.com/junzhezhang/mesh-inversion

翻译:在这项工作中,我们展示了MeshInversion,这是一个通过利用3DGAN预修的3D纹理网格合成技术来改进重建的新型框架。重建是通过在3DGAN中寻找最符合单一观察的3DGAN中目标网格的潜伏空间而实现的。由于预先训练的GAN包装了精密的3D语义,在网格几何和纹理方面是丰富的3D语义,因此在GAN中搜索自然地规范了重建的真实性和忠诚性。重要的是,这种正规化直接适用于3D空间,为2D空间中未观测到的网格部分提供了至关重要的指导。对标准基准的实验表明,我们的框架得到了忠实的3D语义重建,在所观测到和未观测到的部分都得到了一致的几何和纹理。此外,它还概括了不太常见的Memshes,例如扩展的变形/变形/变形法的扩展式。