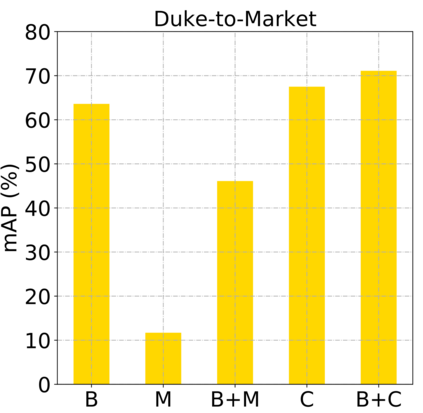

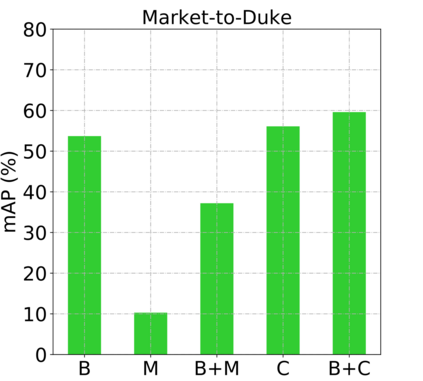

In this work, we address the problem of unsupervised domain adaptation for person re-ID where annotations are available for the source domain but not for target. Previous methods typically follow a two-stage optimization pipeline, where the network is first pre-trained on source and then fine-tuned on target with pseudo labels created by feature clustering. Such methods sustain two main limitations. (1) The label noise may hinder the learning of discriminative features for recognizing target classes. (2) The domain gap may hinder knowledge transferring from source to target. We propose three types of technical schemes to alleviate these issues. First, we propose a cluster-wise contrastive learning algorithm (CCL) by iterative optimization of feature learning and cluster refinery to learn noise-tolerant representations in the unsupervised manner. Second, we adopt a progressive domain adaptation (PDA) strategy to gradually mitigate the domain gap between source and target data. Third, we propose Fourier augmentation (FA) for further maximizing the class separability of re-ID models by imposing extra constraints in the Fourier space. We observe that these proposed schemes are capable of facilitating the learning of discriminative feature representations. Experiments demonstrate that our method consistently achieves notable improvements over the state-of-the-art unsupervised re-ID methods on multiple benchmarks, e.g., surpassing MMT largely by 8.1\%, 9.9\%, 11.4\% and 11.1\% mAP on the Market-to-Duke, Duke-to-Market, Market-to-MSMT and Duke-to-MSMT tasks, respectively.

翻译:在这项工作中,我们处理在有源域说明但非目标说明的情况下对人重新识别进行不受监督的域适应问题。以前的方法通常采用两阶段优化管道,先对源进行预先培训,然后对目标进行微调,使用特征分类制作假标签,这种方法有两个主要限制:(1) 标签噪音可能妨碍学习识别目标类别方面的歧视性特征。(2) 领域差距可能阻碍知识从源向目标转移。我们建议了三种技术计划来缓解这些问题。首先,我们建议了一种集群式对比学习算法(CCL),即对特征学习进行迭代优化和集群精炼,以便以不受监督的方式学习噪音容忍的表示。第二,我们采取了渐进式域适应战略(PDA),以逐步缩小源和目标数据之间的域间差距。第三,我们建议Fourier扩大(MFA),通过在四级空间施加额外的限制,进一步最大限度地实现重新开发模式的等级可变性。我们发现,这些拟议的计划能够促进学习非歧视特征表述,我们的方法、将MAS-9比G-MFM-M-M-M-M-B-M-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-MS-B-B-B-B-B-B-MT-B-B-B-B-B-B-B-B-B-B-B-B-B-G-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-B-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA-MA