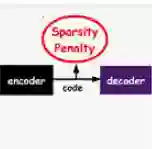

Real-world applications often require learning continuously from a stream of data under ever-changing conditions. When trying to learn from such non-stationary data, deep neural networks (DNNs) undergo catastrophic forgetting of previously learned information. Among the common approaches to avoid catastrophic forgetting, rehearsal-based methods have proven effective. However, they are still prone to forgetting due to task-interference as all parameters respond to all tasks. To counter this, we take inspiration from sparse coding in the brain and introduce dynamic modularity and sparsity (Dynamos) for rehearsal-based general continual learning. In this setup, the DNN learns to respond to stimuli by activating relevant subsets of neurons. We demonstrate the effectiveness of Dynamos on multiple datasets under challenging continual learning evaluation protocols. Finally, we show that our method learns representations that are modular and specialized, while maintaining reusability by activating subsets of neurons with overlaps corresponding to the similarity of stimuli.

翻译:现实世界应用往往需要在不断变化的条件下从数据流中不断学习。 深神经网络(DNNs)在试图从这种非静止数据中学习时,会灾难性地忘记以前学到的信息。 在避免灾难性的遗忘的共同方法中,基于排练的方法证明是有效的。 但是,由于所有参数都对所有任务作出反应,它们仍然容易因任务干预而忘记。 为了应对这一点,我们从大脑的稀疏编码中汲取灵感,并引入动态模块性和空间性(DyNAMs)用于排练基础的一般持续学习。 在这个设置中,DNNE学会通过激活相关的神经子群来应对刺激性。 我们展示了Dynas在挑战性的持续学习评估协议下对多个数据集的有效性。 最后,我们表明,我们的方法学会了模块化和专业化的表达方式,同时通过激活与相似性相似的神经子集来保持可重复性。

相关内容

Source: Apple - iOS 8