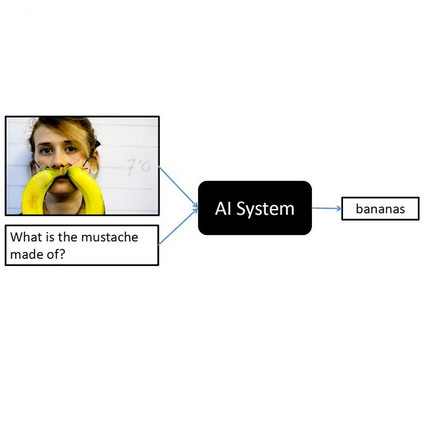

The development of Urdu scene text detection, recognition, and Visual Question Answering (VQA) technologies is crucial for advancing accessibility, information retrieval, and linguistic diversity in digital content, facilitating better understanding and interaction with Urdu-language visual data. This initiative seeks to bridge the gap between textual and visual comprehension. We propose a new multi-task Urdu scene text dataset comprising over 1000 natural scene images, which can be used for text detection, recognition, and VQA tasks. We provide fine-grained annotations for text instances, addressing the limitations of previous datasets for facing arbitrary-shaped texts. By incorporating additional annotation points, this dataset facilitates the development and assessment of methods that can handle diverse text layouts, intricate shapes, and non-standard orientations commonly encountered in real-world scenarios. Besides, the VQA annotations make it the first benchmark for the Urdu Text VQA method, which can prompt the development of Urdu scene text understanding. The proposed dataset is available at: https://github.com/Hiba-MeiRuan/Urdu-VQA-Dataset-/tree/main

翻译:暂无翻译