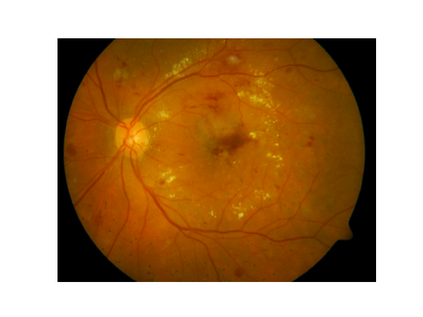

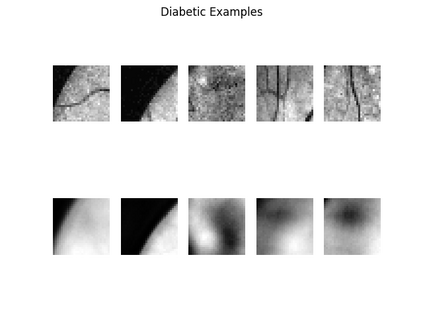

A key component to the success of deep learning is the availability of massive amounts of training data. Building and annotating large datasets for solving medical image classification problems is today a bottleneck for many applications. Recently, capsule networks were proposed to deal with shortcomings of Convolutional Neural Networks (ConvNets). In this work, we compare the behavior of capsule networks against ConvNets under typical datasets constraints of medical image analysis, namely, small amounts of annotated data and class-imbalance. We evaluate our experiments on MNIST, Fashion-MNIST and medical (histological and retina images) publicly available datasets. Our results suggest that capsule networks can be trained with less amount of data for the same or better performance and are more robust to an imbalanced class distribution, which makes our approach very promising for the medical imaging community.

翻译:深层学习成功的一个关键要素是提供大量培训数据。为了解决医学图像分类问题而建立和批注大型数据集,如今已成为许多应用的瓶颈。最近,有人提议建立胶囊网络,以应对进化神经网络(ConvNets)的缺陷。在这项工作中,我们比较了在典型的医学图像分析数据集限制下胶囊网络与ConvNet的行为,即少量附加说明的数据和阶级平衡。我们评估了我们在MNIST、时装-MNIST和医学(神学和视网膜图像)公开提供的数据集方面的实验。我们的结果表明,可以用较少的数据对胶囊网络进行培训,使其具有相同或更好的性能,并且更能适应阶级分布不平衡的现象,这使得我们的方法对医学成像界很有希望。