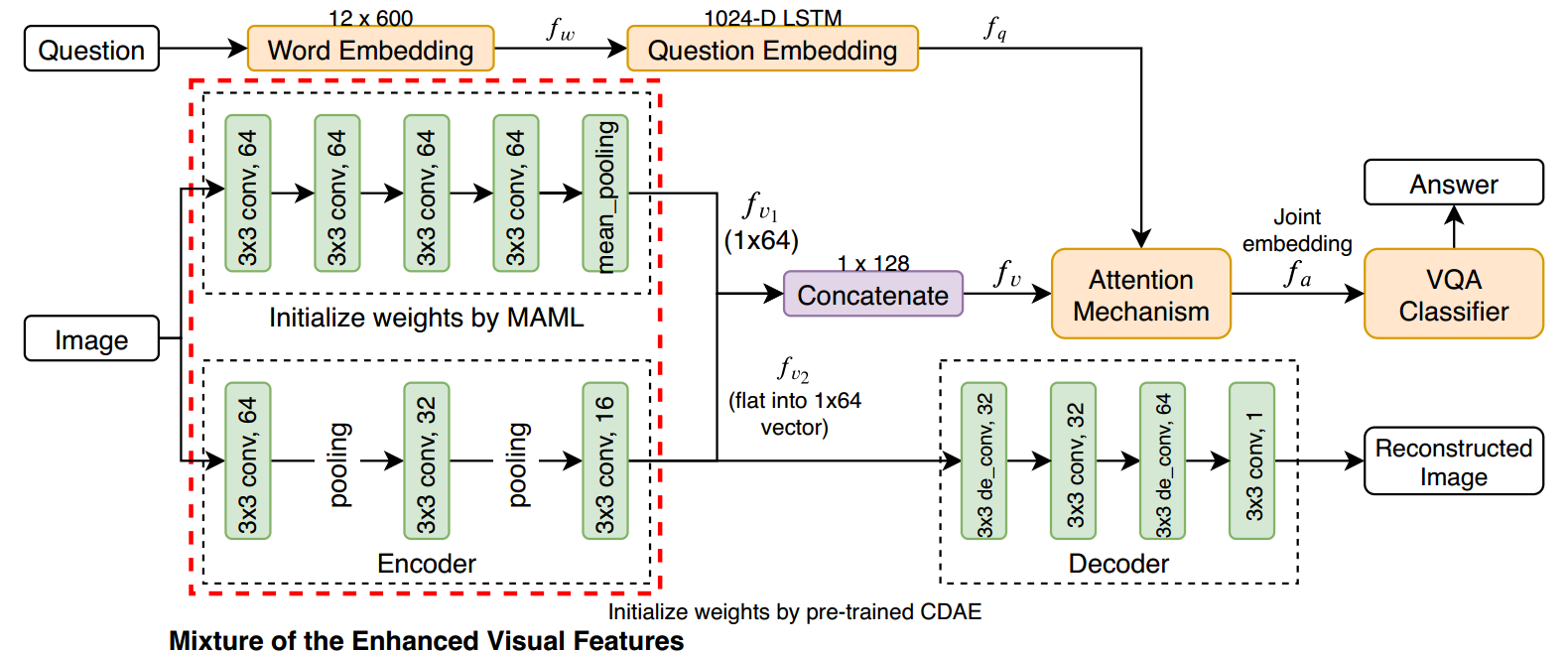

Traditional approaches for Visual Question Answering (VQA) require large amount of labeled data for training. Unfortunately, such large scale data is usually not available for medical domain. In this paper, we propose a novel medical VQA framework that overcomes the labeled data limitation. The proposed framework explores the use of the unsupervised Denoising Auto-Encoder (DAE) and the supervised Meta-Learning. The advantage of DAE is to leverage the large amount of unlabeled images while the advantage of Meta-Learning is to learn meta-weights that quickly adapt to VQA problem with limited labeled data. By leveraging the advantages of these techniques, it allows the proposed framework to be efficiently trained using a small labeled training set. The experimental results show that our proposed method significantly outperforms the state-of-the-art medical VQA.

翻译:传统的视觉问题解答(VQA)方法需要大量的标签数据用于培训。不幸的是,这类大型数据通常无法用于医疗领域。在本文件中,我们提议了一个克服标签数据限制的新型医学VQA框架。拟议框架探索了使用不受监督的Denoising自动编码器(DAE)和受监督的Meta-学习。DAE的优势是利用大量未标签图像,而Meta-Learch的优势是学习迅速适应标签数据有限的VQA问题的元重量。通过利用这些技术的优势,使得拟议的框架能够利用一个小型标签培训集得到有效的培训。实验结果表明,我们拟议的方法大大优于最新医学VQA。