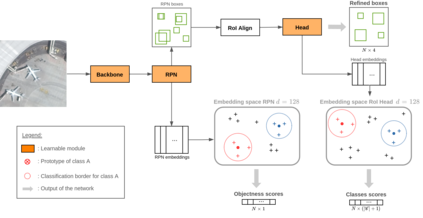

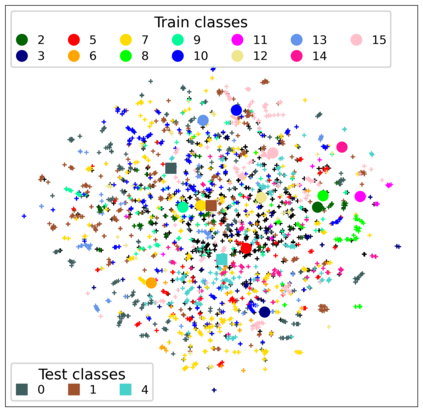

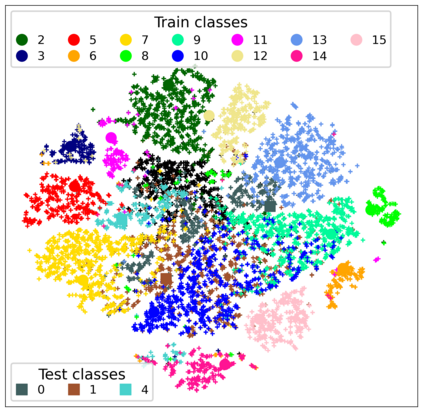

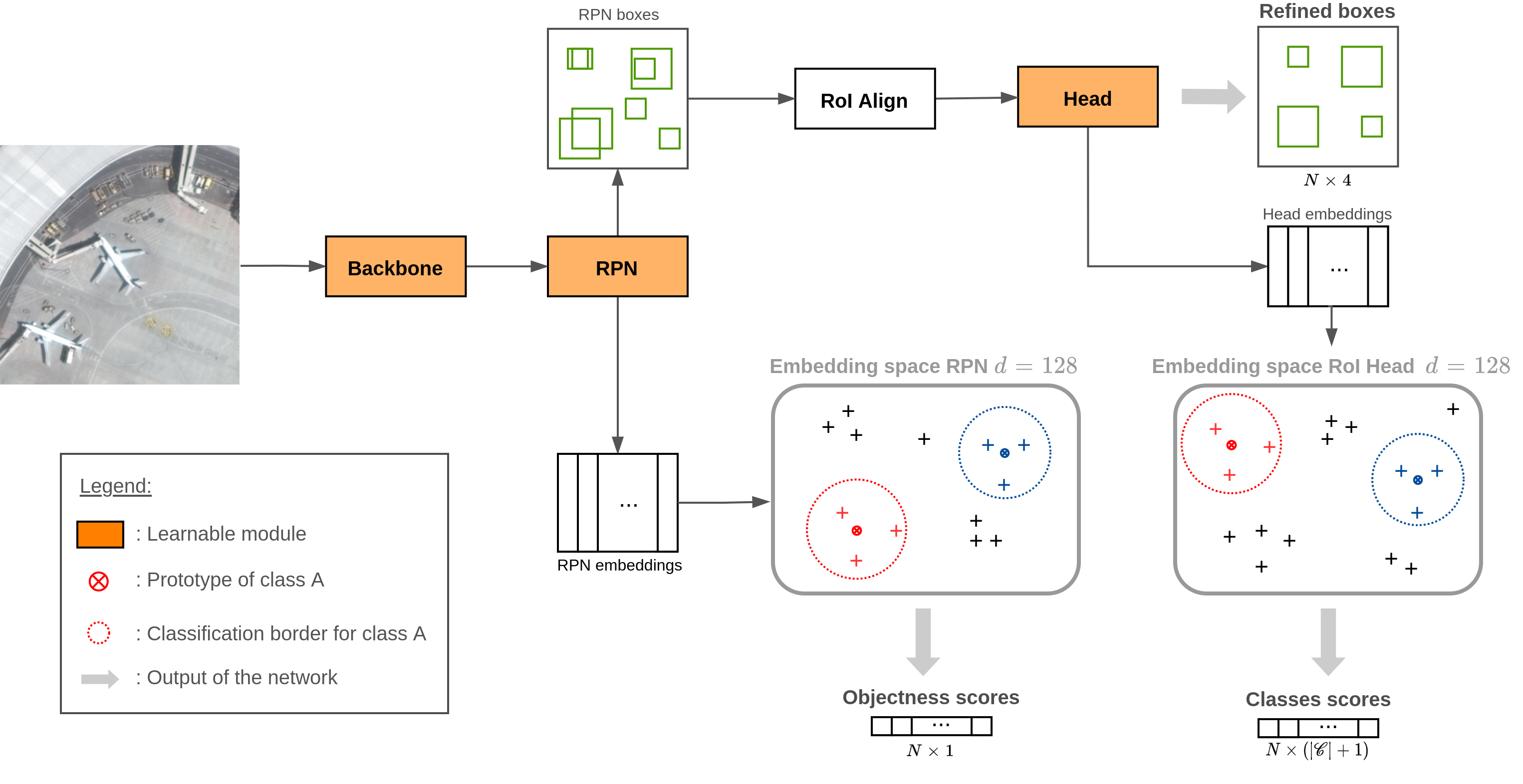

This paper proposes a few-shot method based on Faster R-CNN and representation learning for object detection in aerial images. The two classification branches of Faster R-CNN are replaced by prototypical networks for online adaptation to new classes. These networks produce embeddings vectors for each generated box, which are then compared with class prototypes. The distance between an embedding and a prototype determines the corresponding classification score. The resulting networks are trained in an episodic manner. A new detection task is randomly sampled at each epoch, consisting in detecting only a subset of the classes annotated in the dataset. This training strategy encourages the network to adapt to new classes as it would at test time. In addition, several ideas are explored to improve the proposed method such as a hard negative examples mining strategy and self-supervised clustering for background objects. The performance of our method is assessed on DOTA, a large-scale remote sensing images dataset. The experiments conducted provide a broader understanding of the capabilities of representation learning. It highlights in particular some intrinsic weaknesses for the few-shot object detection task. Finally, some suggestions and perspectives are formulated according to these insights.

翻译:本文根据更快的 R-CNN 和在空中图像中进行物体探测的演示性学习,提出了几分方法。 更快的 R- CNN 的两个分类分支被用于在线适应新类别的原型网络所取代。 这些网络为每个生成的框生成嵌入矢量,然后与分类原型进行比较。 嵌入和原型之间的距离决定了相应的分类分数。 由此形成的网络以偶发方式培训。 在每个小区随机抽样进行新的探测任务, 包括只发现数据集中附加说明的分类的子组。 这一培训战略鼓励网络在测试时适应新分类。 此外, 探索了几个想法来改进拟议方法, 如硬性的负面采掘策略和背景物体的自我监督组合。 我们的方法的性能在DOTA上评估, 大型遥感图像数据集。 所进行的实验为代表学习能力提供了更广泛的理解。 它特别突出了少数点对象探测任务的一些内在弱点。 最后, 根据这些洞察结果, 提出了一些建议和观点。