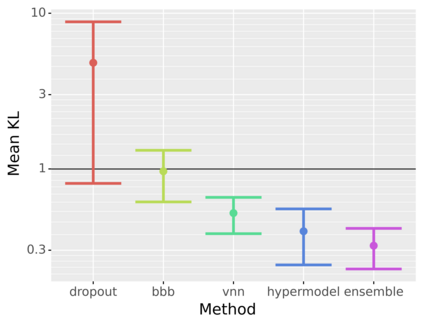

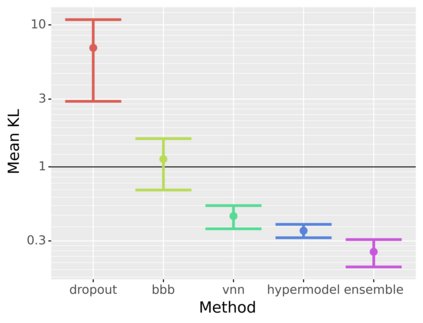

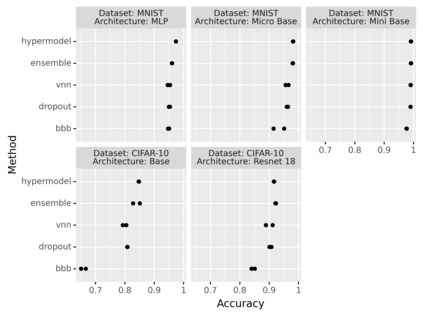

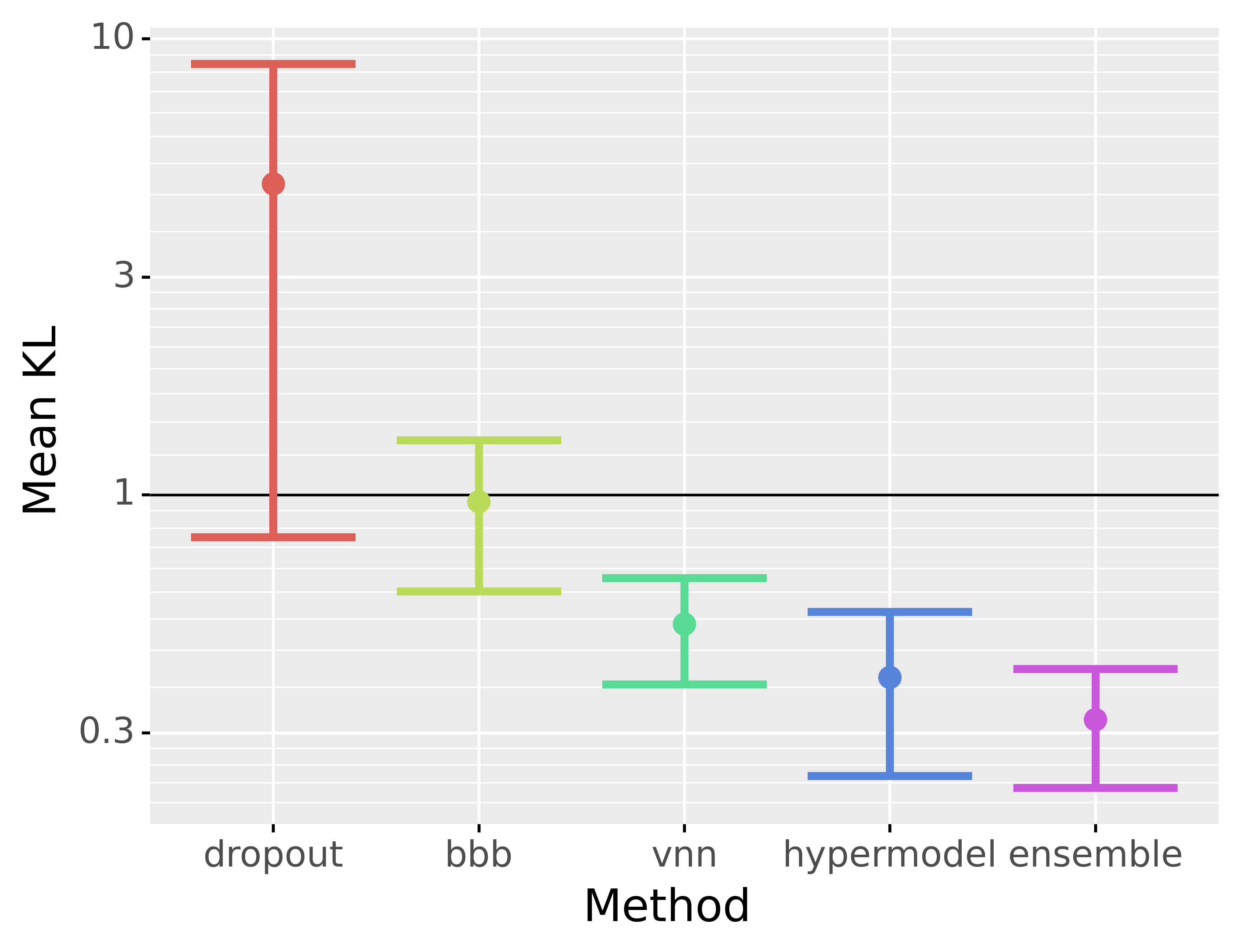

Bayesian Neural Networks (BNNs) provide a tool to estimate the uncertainty of a neural network by considering a distribution over weights and sampling different models for each input. In this paper, we propose a method for uncertainty estimation in neural networks called Variational Neural Network that, instead of considering a distribution over weights, generates parameters for the output distribution of a layer by transforming its inputs with learnable sub-layers. In uncertainty quality estimation experiments, we show that VNNs achieve better uncertainty quality than Monte Carlo Dropout or Bayes By Backpropagation methods.

翻译:Bayesian神经网络(BNNs)提供了一种工具,通过考虑神经网络的分布过重和对每种输入的不同模型取样来估计神经网络的不确定性。 在本文中,我们提出了一种方法,用于对神经网络的不确定性进行估算,称为变异神经网络,它不是考虑分配过重,而是通过将输入转换成可学习的子层,为一层层的输出分布产生参数。在不确定性质量估算实验中,我们表明VNs的不确定性质量比Monte Carlo dropout 或 Bayes Bayes Back Compropagation 方法要好。