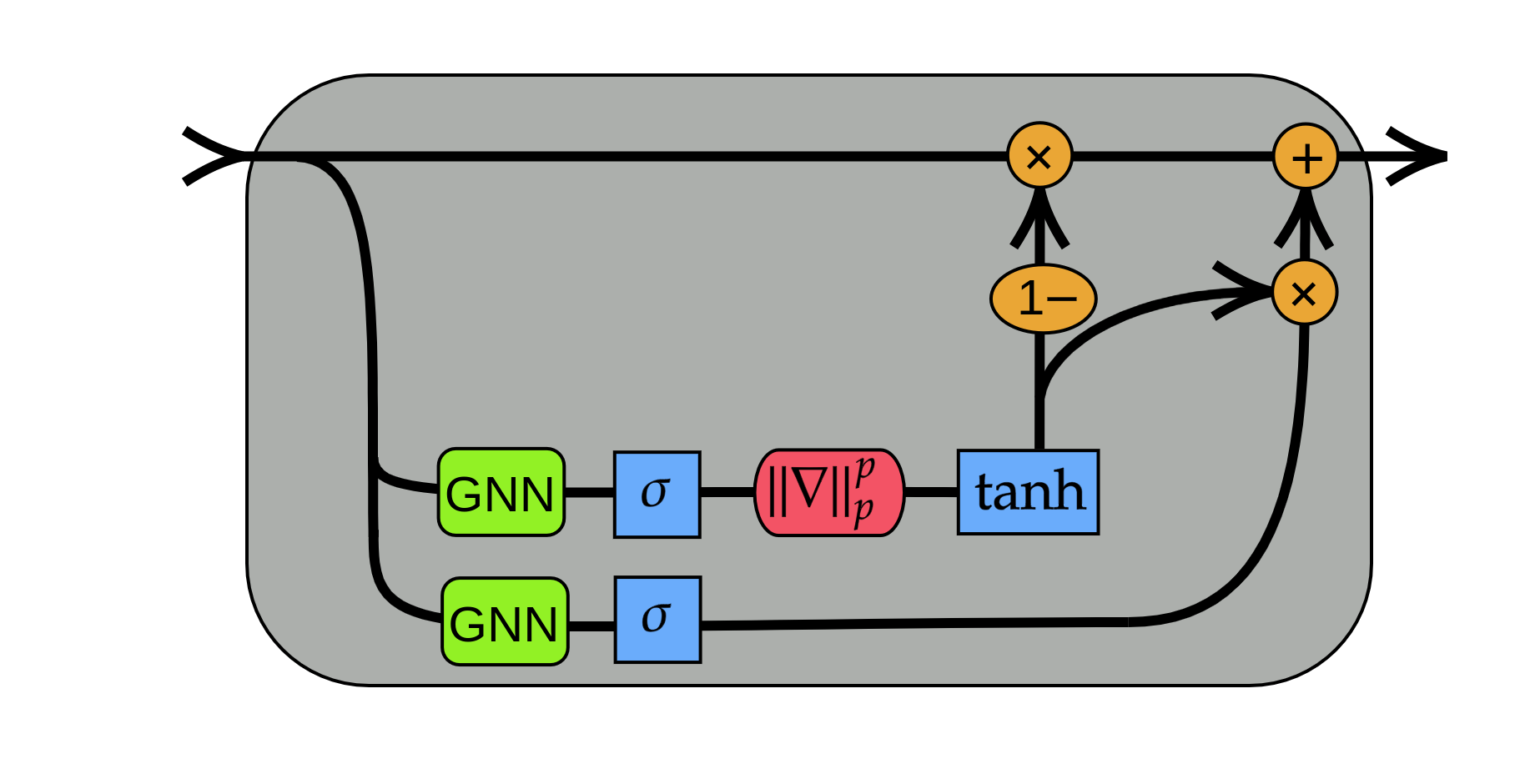

We present Gradient Gating (G$^2$), a novel framework for improving the performance of Graph Neural Networks (GNNs). Our framework is based on gating the output of GNN layers with a mechanism for multi-rate flow of message passing information across nodes of the underlying graph. Local gradients are harnessed to further modulate message passing updates. Our framework flexibly allows one to use any basic GNN layer as a wrapper around which the multi-rate gradient gating mechanism is built. We rigorously prove that G$^2$ alleviates the oversmoothing problem and allows the design of deep GNNs. Empirical results are presented to demonstrate that the proposed framework achieves state-of-the-art performance on a variety of graph learning tasks, including on large-scale heterophilic graphs.

翻译:我们提出了一个改进图形神经网络绩效的新框架,即G$2G$GNNT(GNNNs),我们的框架以GNN层的产出为基础,并有一个通过基本图形节点传递信息的多率信息流机制;利用当地梯度进一步调整信息传递更新;我们的框架灵活地允许人们使用任何基本的GNN层作为建立多率梯度机制的包装材料;我们严格证明G$2$缓解了过度覆盖的问题,并允许设计深层GNNNs。我们介绍了经验性结果,以证明拟议框架在各种图表学习任务(包括大型血清图表)上取得了最新业绩。</s>