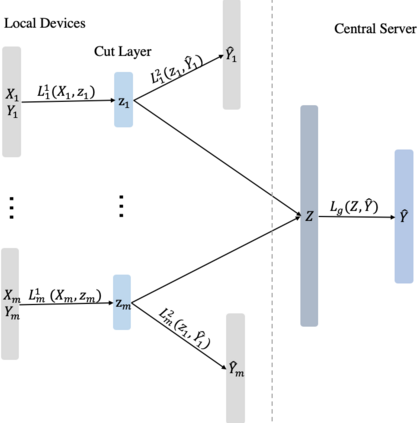

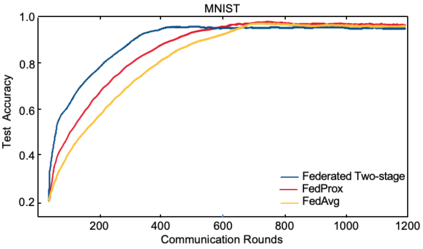

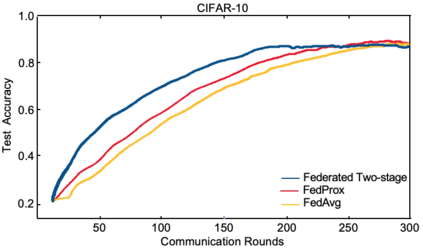

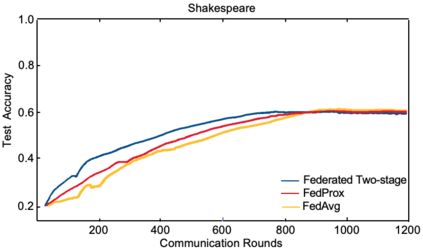

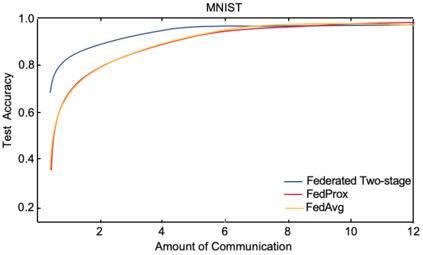

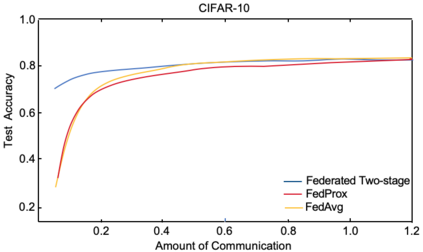

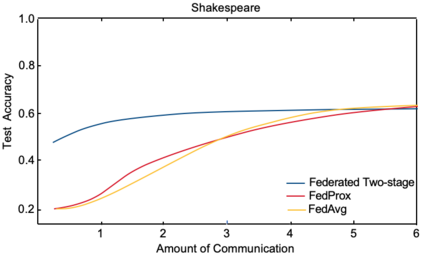

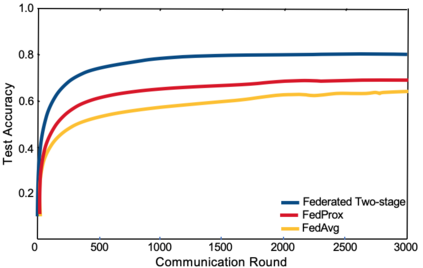

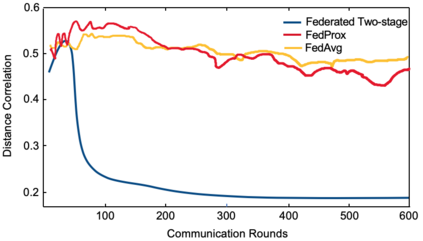

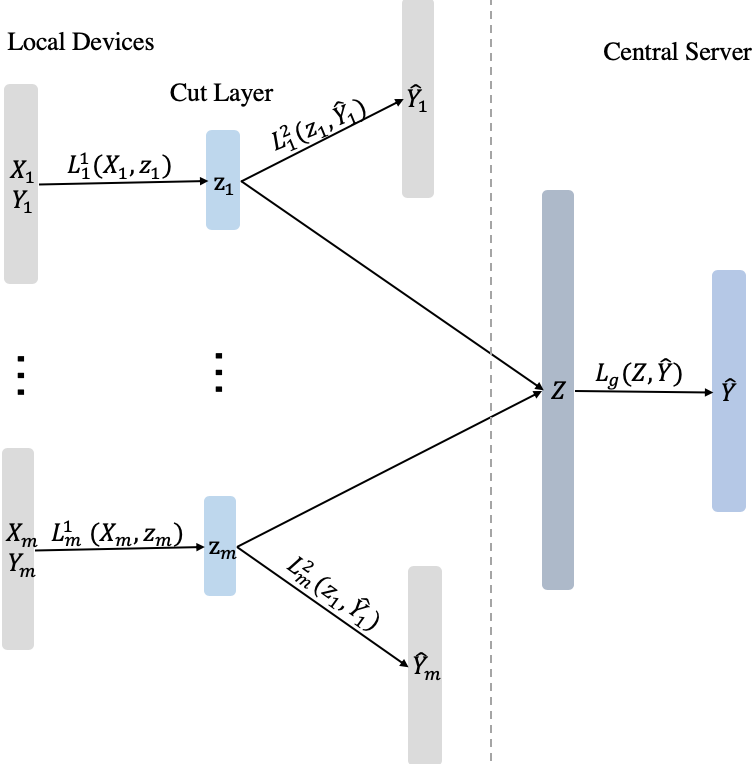

Federated learning is a distributed machine learning mechanism where local devices collaboratively train a shared global model under the orchestration of a central server, while keeping all private data decentralized. In the system, model parameters and its updates are transmitted instead of raw data, and thus the communication bottleneck has become a key challenge. Besides, recent larger and deeper machine learning models also pose more difficulties in deploying them in a federated environment. In this paper, we design a federated two-stage learning framework that augments prototypical federated learning with a cut layer on devices and uses sign-based stochastic gradient descent with the majority vote method on model updates. Cut layer on devices learns informative and low-dimension representations of raw data locally, which helps reduce global model parameters and prevents data leakage. Sign-based SGD with the majority vote method for model updates also helps alleviate communication limitations. Empirically, we show that our system is an efficient and privacy preserving federated learning scheme and suits for general application scenarios.

翻译:联邦学习是一种分布式的机器学习机制,当地设备在中央服务器的管弦下合作培训一个共享的全球模式,同时保持所有私人数据分散。在系统中,模型参数及其更新被传输,而不是原始数据,因此通信瓶颈已成为一项关键挑战。此外,最近较大和更深层次的机器学习模型也给在联邦环境中部署这些模型带来更多困难。在本文中,我们设计了一个联合的两阶段学习框架,用基于多数票的模型更新方法,用基于信号的随机梯度梯度下降法来扩大设备上的分层,并使用基于信号的随机梯度下降法。 设备切割层学习本地原始数据的信息和低差异表,这有助于减少全球模型参数,防止数据泄漏。基于多数票的基于签名的SGD模型更新方法也有助于缓解通信限制。我们很生动地指出,我们的系统是一种高效和隐私保护联邦学习计划,并适合一般应用情景。