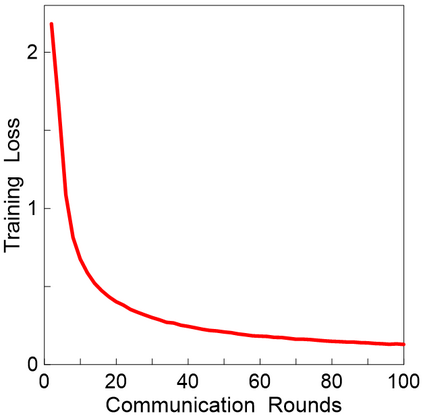

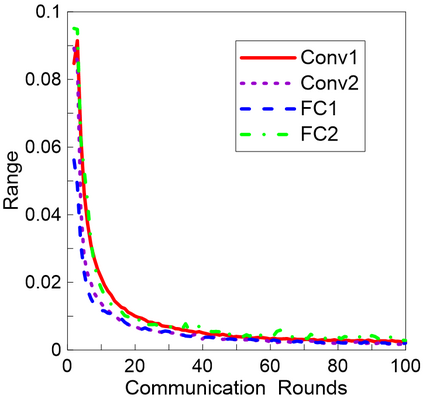

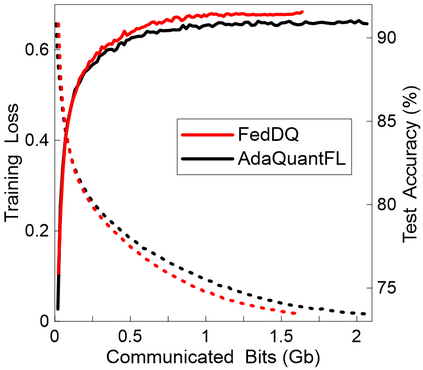

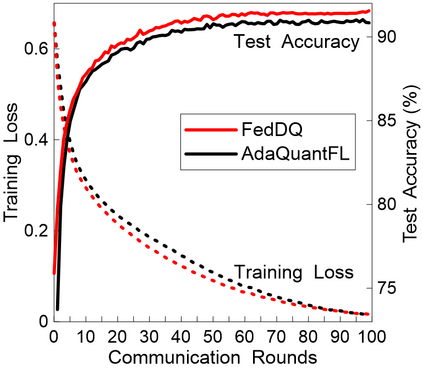

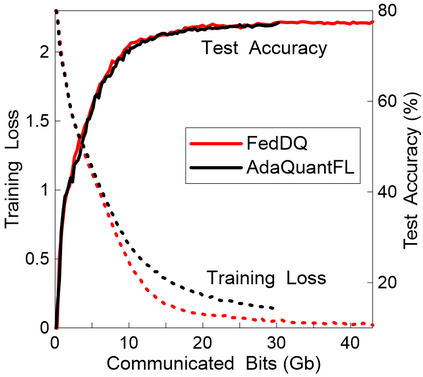

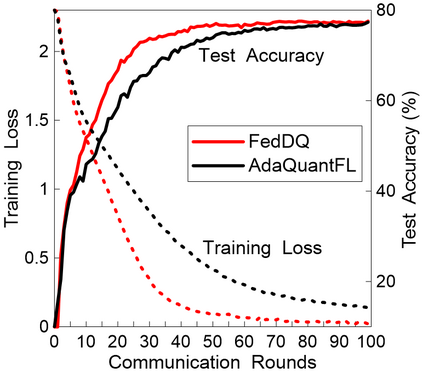

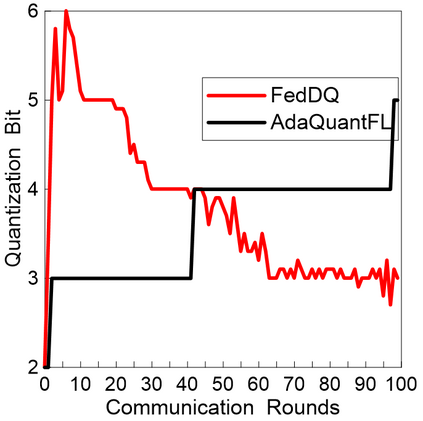

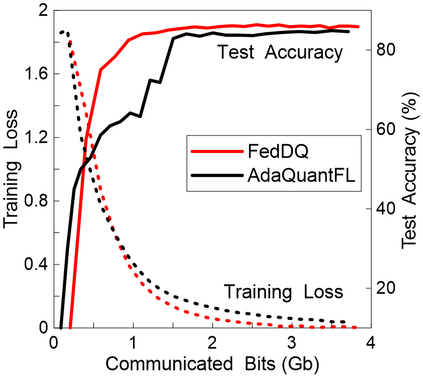

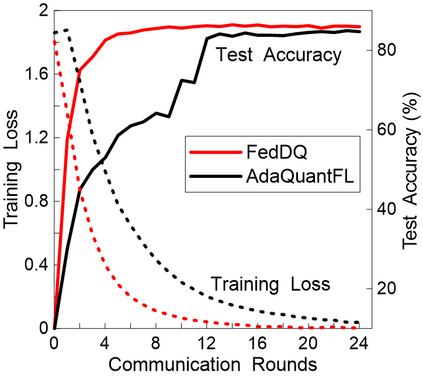

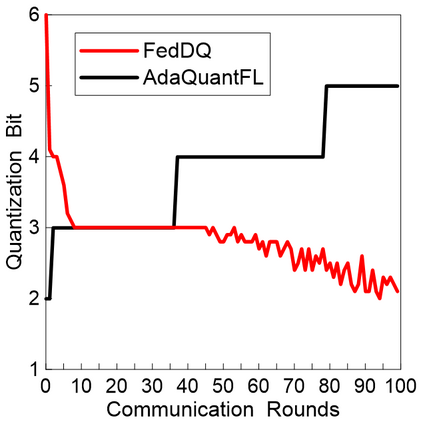

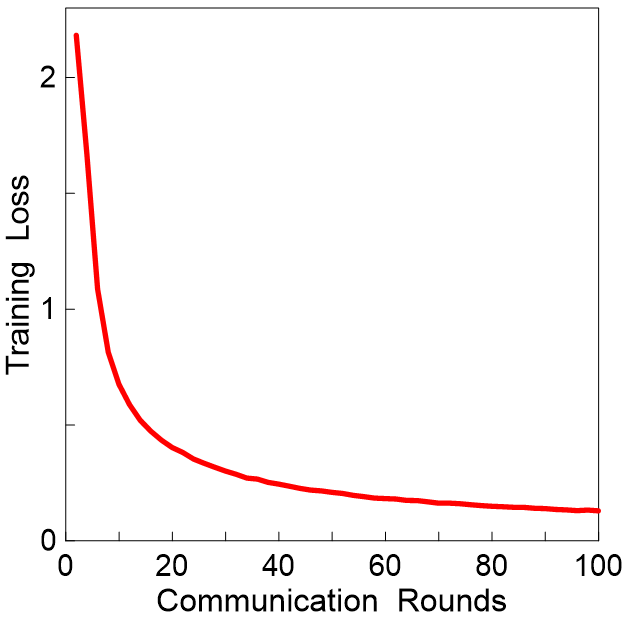

Federated learning (FL) is an emerging privacy-preserving distributed learning scheme. The large model size and frequent model aggregation cause serious communication issue for FL. Many techniques, such as model compression and quantization, have been proposed to reduce the communication volume. Existing adaptive quantization schemes use ascending-trend quantization where the quantization level increases with the training stages. In this paper, we investigate the adaptive quantization scheme by maximizing the convergence rate. It is shown that the optimal quantization level is directly related to the range of the model updates. Given the model is supposed to converge with training, the range of model updates will get smaller, indicating that the quantization level should decrease with the training stages. Based on the theoretical analysis, a descending quantization scheme is thus proposed. Experimental results show that the proposed descending quantization scheme not only reduces more communication volume but also helps FL converge faster, when compared with current ascending quantization scheme.

翻译:联邦学习(FL) 是一个新兴的隐私保护分布式学习计划。 大型模型规模和频繁的模型聚合给FL带来严重的沟通问题。 许多技术,例如模型压缩和量化,都提议减少沟通量。 现有的适应性量化计划使用递升- 趋势量化办法,因为量化水平随着培训阶段而增加。 在本文件中,我们通过最大限度地提高聚合率来调查适应性量化办法。 这表明最佳的四分制水平与模型更新的范围直接相关。 鉴于模型与培训相融合,模型更新的范围将缩小,表明量化水平随着培训阶段而减少。 根据理论分析,因此提出了递增四分制办法。 实验结果显示,拟议的递增四分制计划不仅减少了更多的沟通量,而且有助于FL更快地融合,而与目前的递升量化计划相比。