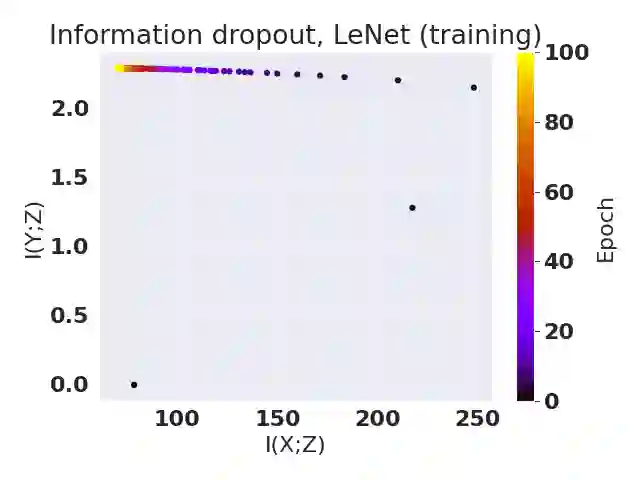

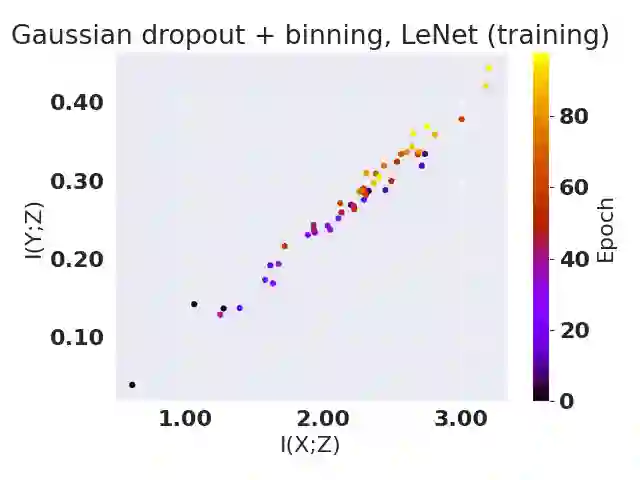

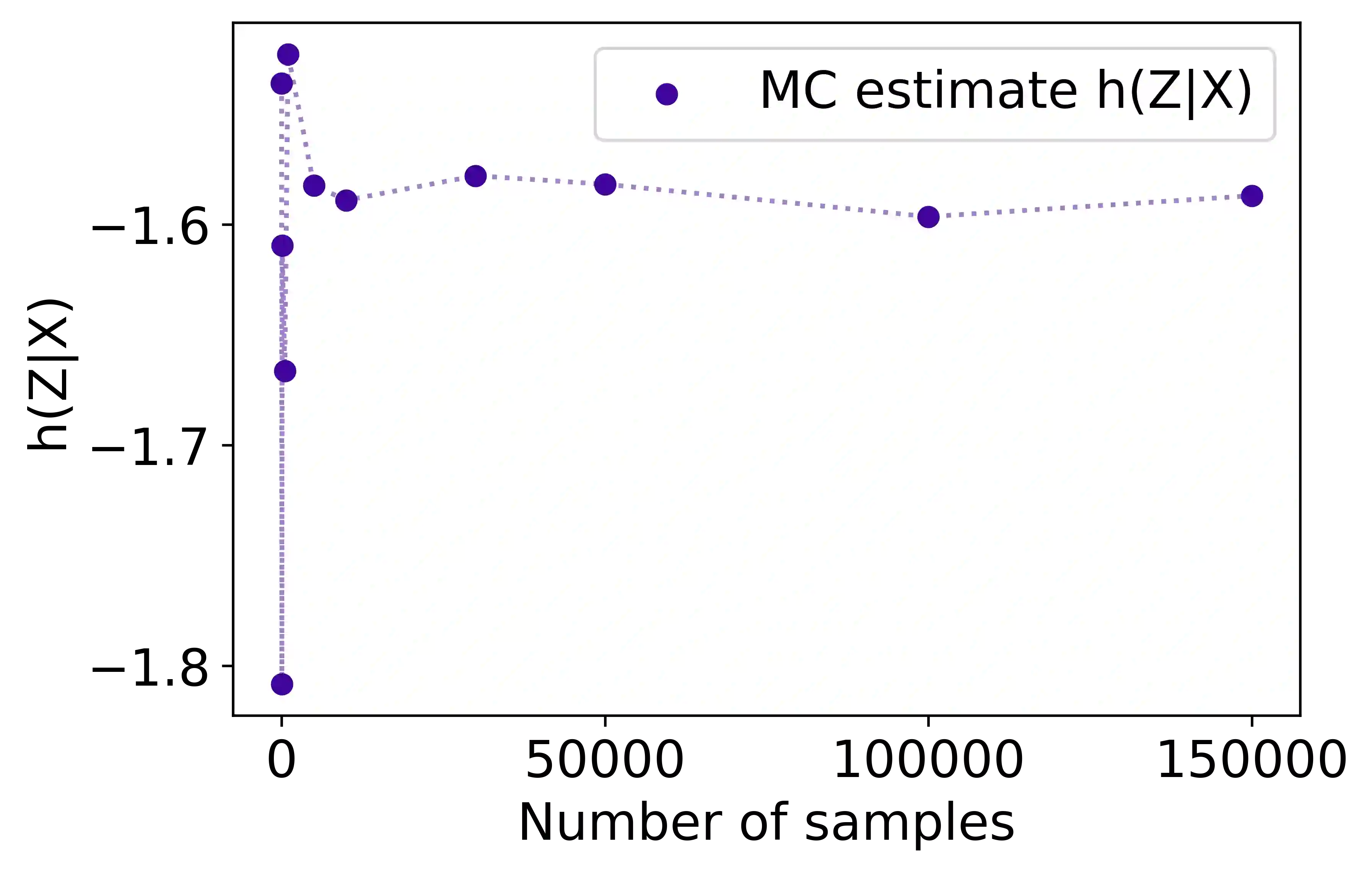

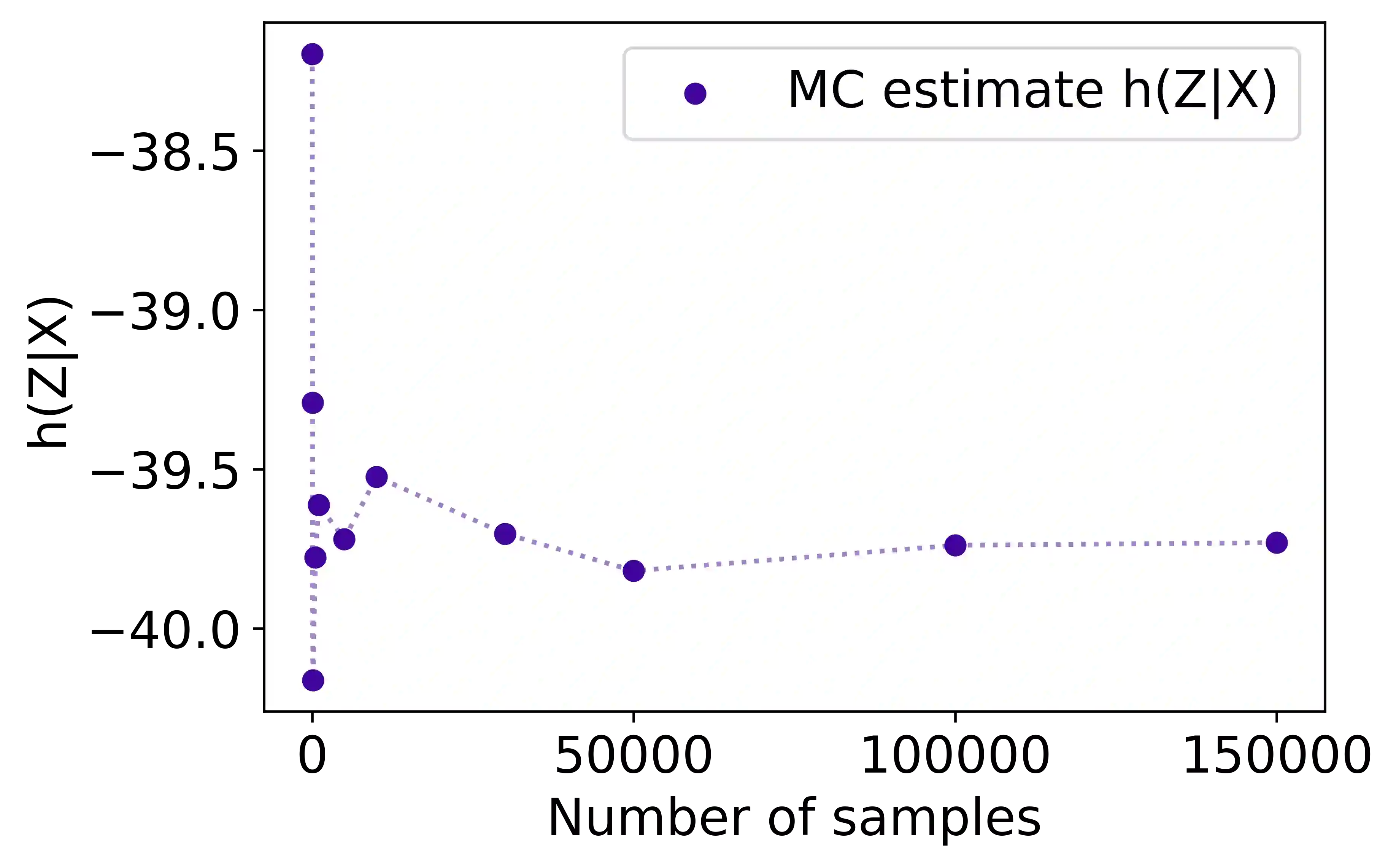

The information-theoretic framework promises to explain the predictive power of neural networks. In particular, the information plane analysis, which measures mutual information (MI) between input and representation as well as representation and output, should give rich insights into the training process. This approach, however, was shown to strongly depend on the choice of estimator of the MI. The problem is amplified for deterministic networks if the MI between input and representation is infinite. Thus, the estimated values are defined by the different approaches for estimation, but do not adequately represent the training process from an information-theoretic perspective. In this work, we show that dropout with continuously distributed noise ensures that MI is finite. We demonstrate in a range of experiments that this enables a meaningful information plane analysis for a class of dropout neural networks that is widely used in practice.

翻译:信息理论框架可以解释神经网络的预测力,特别是测量输入和表达以及表达和输出之间相互信息的信息平面分析(MI)应该对培训过程有丰富的洞察力。不过,这一方法表明,这在很大程度上取决于对管理信息系统估算师的选择。如果管理信息系统在输入和表达之间是无限的,那么确定性网络的问题就会扩大。因此,估计值是由不同的估算方法确定的,但不能从信息理论的角度充分代表培训过程。在这项工作中,我们表明,由于持续散布噪音而辍学确保管理信息系统是有限的。我们在一系列实验中表明,这有利于对在实际中广泛使用的各类辍学神经网络进行有意义的信息平面分析。</s>