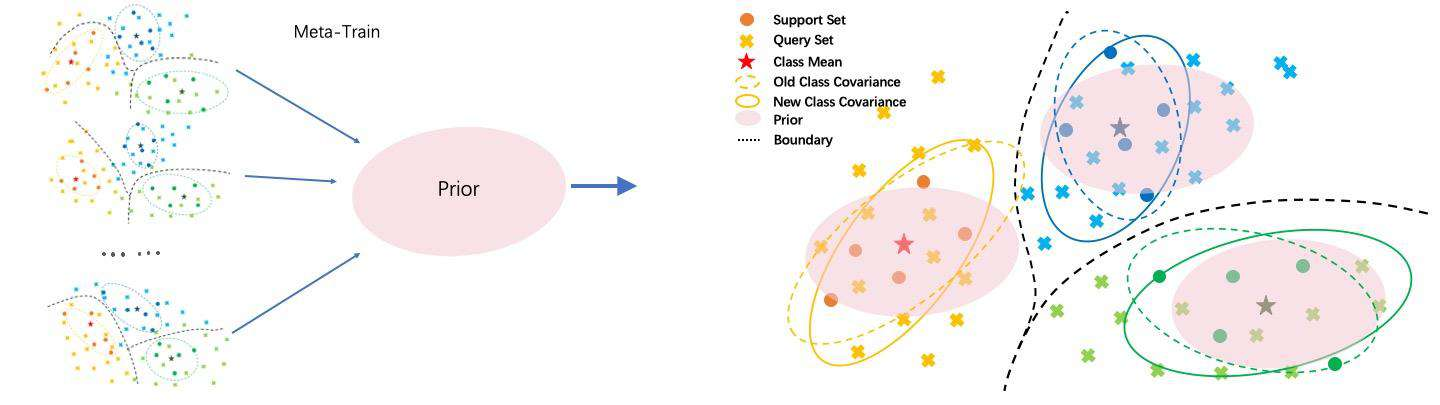

Current state-of-the-art few-shot learners focus on developing effective training procedures for feature representations, before using simple, e.g. nearest centroid, classifiers. In this paper, we take an orthogonal approach that is agnostic to the features used and focus exclusively on meta-learning the actual classifier layer. Specifically, we introduce MetaQDA, a Bayesian meta-learning generalization of the classic quadratic discriminant analysis. This setup has several benefits of interest to practitioners: meta-learning is fast and memory-efficient, without the need to fine-tune features. It is agnostic to the off-the-shelf features chosen and thus will continue to benefit from advances in feature representations. Empirically, it leads to robust performance in cross-domain few-shot learning and, crucially for real-world applications, it leads to better uncertainty calibration in predictions.

翻译:目前最先进的少数学员侧重于在使用简单的特征表述方法之前,为特征描述制定有效的培训程序,例如使用最近的近亲机器人、分类器。在本文中,我们采取对所使用的特征不可知的正方位方法,专门侧重于实际分类层的元学习。具体地说,我们引入了贝叶斯的典型四面形色分析的典型二次元学习通用MetaQDA。这个设置对实践者有若干好处:元学习是快速的,记忆效率高,不需要微调特征。它对于所选的现成特征具有可知性,因此将继续受益于特征描述的进步。它经常导致交叉学习的强力表现,对于现实世界应用来说,它能带来更好的不确定性校准。