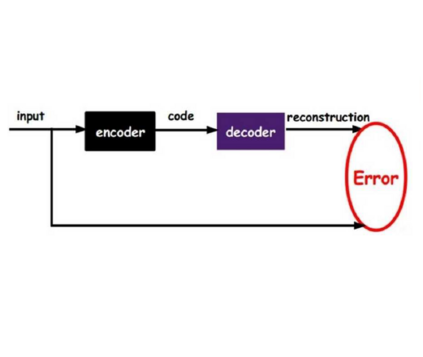

Self-attention based transformer models have been dominating many computer vision tasks in the past few years. Their superb model qualities heavily depend on the excessively large labeled image datasets. In order to reduce the reliance on large labeled datasets, reconstruction based masked autoencoders are gaining popularity, which learn high quality transferable representations from unlabeled images. For the same purpose, recent weakly supervised image pretraining methods explore language supervision from text captions accompanying the images. In this work, we propose masked image pretraining on language assisted representation, dubbed as MILAN. Instead of predicting raw pixels or low level features, our pretraining objective is to reconstruct the image features with substantial semantic signals that are obtained using caption supervision. Moreover, to accommodate our reconstruction target, we propose a more effective prompting decoder architecture and a semantic aware mask sampling mechanism, which further advance the transfer performance of the pretrained model. Experimental results demonstrate that MILAN delivers higher accuracy than the previous works. When the masked autoencoder is pretrained and finetuned on ImageNet-1K dataset with an input resolution of 224x224, MILAN achieves a top-1 accuracy of 85.4% on ViT-Base, surpassing previous state-of-the-arts by 1%. In the downstream semantic segmentation task, MILAN achieves 52.7 mIoU using ViT-Base on ADE20K dataset, outperforming previous masked pretraining results by 4 points.

翻译:在过去几年里,基于自我注意的变压器模型一直主导着许多计算机视觉任务。它们的超模模型品质在很大程度上取决于过大的标签图像数据集。为了减少对大标签数据集的依赖,重建基于掩码的自动校正器越来越受欢迎,从未贴标签的图像中学习高质量的可转移演示。为了同样的目的,最近监督不力的图像前训练方法从图像所附的文本说明中探索语言监督。在这项工作中,我们提议对语言辅助代表处进行隐蔽的图像预培训,代之以MILAN。与其预测原始像素或低等级的图像数据集,我们的培训前的目标是用大量使用标题监管获得的语义信号来重建图像特征。此外,为了适应我们的重建目标,我们提议一个更有效的快速解码架构和一个具有语义意识的遮罩取样机制,以进一步推进预先训练模型的传输性能。实验结果表明,当遮掩的自动coder在图像Net-1-K级结构图样点上预设和微调的图像Net-T的图像信号信号信号,使用前的MI224号前的分辨率,通过前的IMLA的分辨率,在上实现前的图像-BA-BLA-BLA的S-R-BLE-S-S-C-C-C-S-S-C-C-S-S-S-S-S-S-D-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-SIM-S-S-S-S-S-S-S-S-S-R-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-