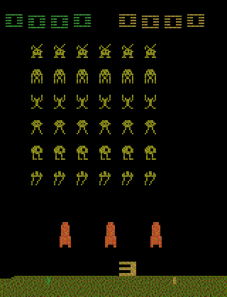

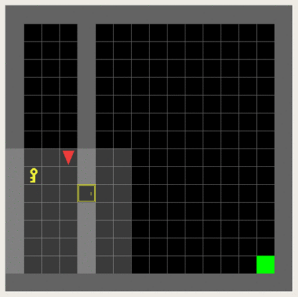

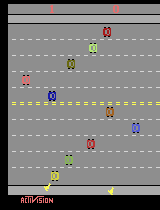

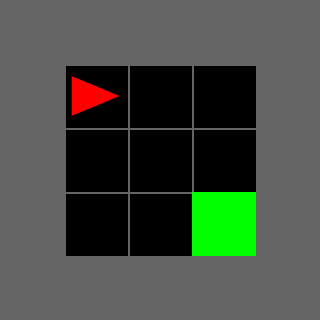

Deep reinforcement learning (RL) works impressively in some environments and fails catastrophically in others. Ideally, RL theory should be able to provide an understanding of why this is, i.e. bounds predictive of practical performance. Unfortunately, current theory does not quite have this ability. We compare standard deep RL algorithms to prior sample complexity prior bounds by introducing a new dataset, BRIDGE. It consists of 155 MDPs from common deep RL benchmarks, along with their corresponding tabular representations, which enables us to exactly compute instance-dependent bounds. We find that prior bounds do not correlate well with when deep RL succeeds vs. fails, but discover a surprising property that does. When actions with the highest Q-values under the random policy also have the highest Q-values under the optimal policy, deep RL tends to succeed; when they don't, deep RL tends to fail. We generalize this property into a new complexity measure of an MDP that we call the effective horizon, which roughly corresponds to how many steps of lookahead search are needed in order to identify the next optimal action when leaf nodes are evaluated with random rollouts. Using BRIDGE, we show that the effective horizon-based bounds are more closely reflective of the empirical performance of PPO and DQN than prior sample complexity bounds across four metrics. We also show that, unlike existing bounds, the effective horizon can predict the effects of using reward shaping or a pre-trained exploration policy.

翻译:深度强化学习在某些环境中表现出色,但在其他环境中则失败得惨不忍睹。理想情况下,强化学习理论应该能够理解这一现象背后的原因,也就是能够为实践中的性能提供界限预测。然而,当前的理论并没有完全具备这种能力。本文通过引入新的数据集BRIDGE,将标准深度强化学习算法与之前的样本复杂性上限进行对比。BRIDGE数据集包含了155个常见的深度强化学习基准测试MDP及其对应的表格表示,这使我们能够完全计算依赖于实例的上限。我们发现之前的上限并不与深度强化学习的成功或失败相关,但我们发现一个令人惊讶的属性与此相关。当随机策略下具有最高Q值的行动在最优策略下也具有最高Q值时,深度强化学习往往会成功;当它们没有时,深度强化学习往往会失败。我们将这个属性推广为一种新的MDP复杂度度量,称为有效时域,这个度量大致对应于在评估叶节点时需要进行多少步前瞻搜索才能确定下一个最优行动。使用BRIDGE,我们发现基于有效时域的上限更能反映PPO和DQN的实证性能,而不是先前的样本复杂性上限,这适用于四个指标。我们还证明,与现有界限不同,有效时域可以预测使用奖励塑形或预先训练的探索策略的效果。