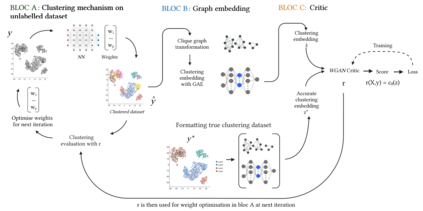

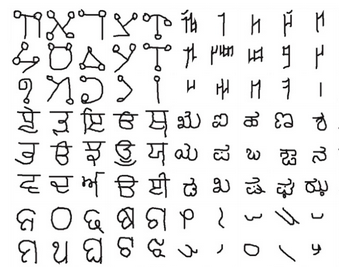

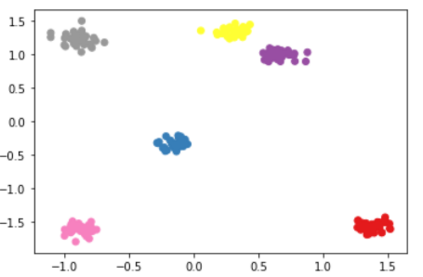

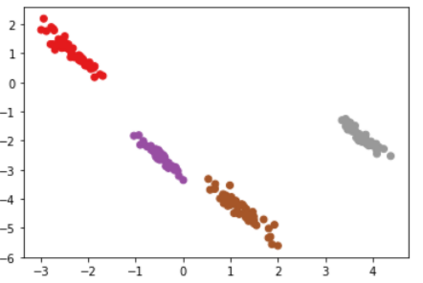

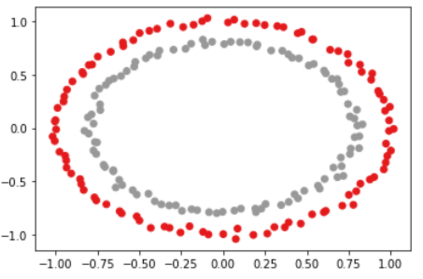

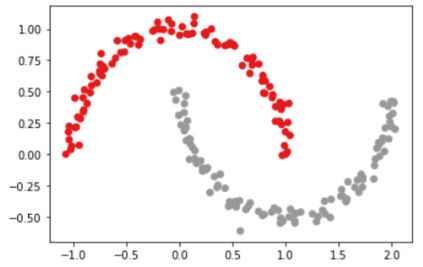

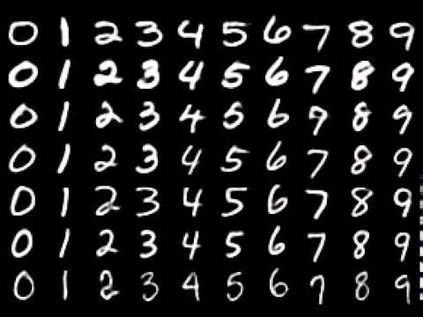

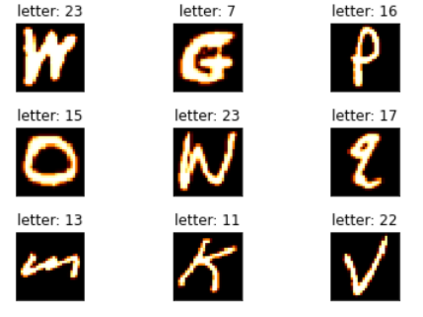

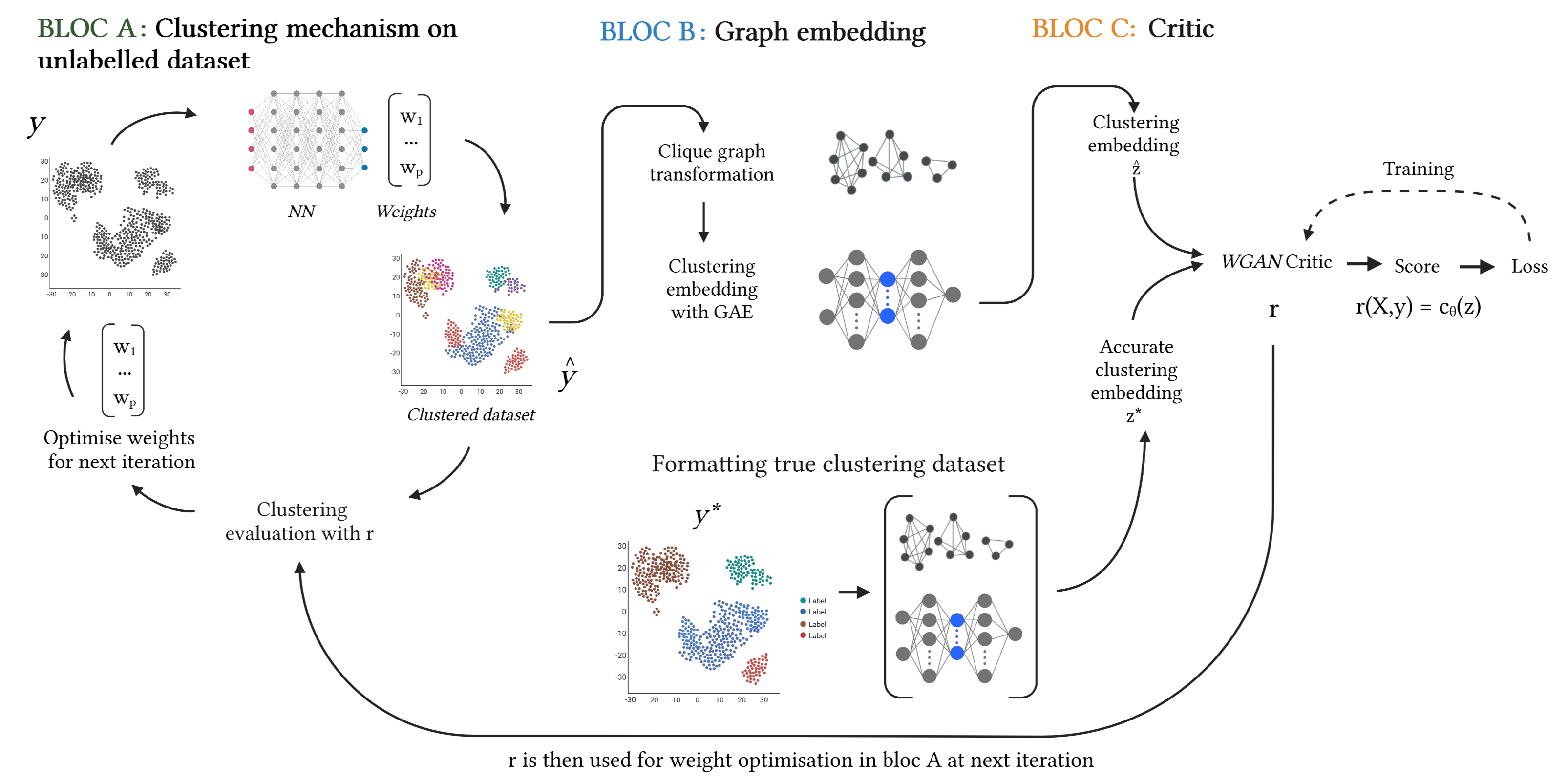

Clustering in high dimension spaces is a difficult task; the usual distance metrics may no longer be appropriate under the curse of dimensionality. Indeed, the choice of the metric is crucial, and it is highly dependent on the dataset characteristics. However a single metric could be used to correctly perform clustering on multiple datasets of different domains. We propose to do so, providing a framework for learning a transferable metric. We show that we can learn a metric on a labelled dataset, then apply it to cluster a different dataset, using an embedding space that characterises a desired clustering in the generic sense. We learn and test such metrics on several datasets of variable complexity (synthetic, MNIST, SVHN, omniglot) and achieve results competitive with the state-of-the-art while using only a small number of labelled training datasets and shallow networks.

翻译:在高维度空间群集是一项艰巨的任务;通常的距离指标在维度的诅咒下可能不再适合。 事实上,选择衡量标准至关重要,而且高度依赖数据集特性。 但是,可以使用单一的衡量标准对不同域的多个数据集进行正确分组。 我们提议这样做,为学习可转让的衡量标准提供一个框架。 我们显示,我们可以在标签数据集上学习一个衡量标准,然后将它应用到不同的数据集群集中,使用嵌入空间来描述通用意义上的预期集聚特征。 我们学习并测试不同复杂数据集(合成、MNIST、SVHN、聚niglot)的这类衡量标准,并取得与最新数据相竞的结果,同时只使用少量标签培训数据集和浅端网络。