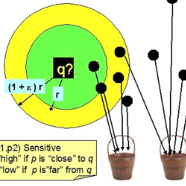

Approximate nearest neighbour (ANN) search is an essential component of search engines, recommendation systems, etc. Many recent works focus on learning-based data-distribution-dependent hashing and achieve good retrieval performance. However, due to increasing demand for users' privacy and security, we often need to remove users' data information from Machine Learning (ML) models to satisfy specific privacy and security requirements. This need requires the ANN search algorithm to support fast online data deletion and insertion. Current learning-based hashing methods need retraining the hash function, which is prohibitable due to the vast time-cost of large-scale data. To address this problem, we propose a novel data-dependent hashing method named unfolded self-reconstruction locality-sensitive hashing (USR-LSH). Our USR-LSH unfolded the optimization update for instance-wise data reconstruction, which is better for preserving data information than data-independent LSH. Moreover, our USR-LSH supports fast online data deletion and insertion without retraining. To the best of our knowledge, we are the first to address the machine unlearning of retrieval problems. Empirically, we demonstrate that USR-LSH outperforms the state-of-the-art data-distribution-independent LSH in ANN tasks in terms of precision and recall. We also show that USR-LSH has significantly faster data deletion and insertion time than learning-based data-dependent hashing.

翻译:近似最近邻(ANN)搜索是搜索引擎、推荐系统等的重要组成部分。许多最近的工作集中在基于学习的数据分布依赖哈希上,并实现了良好的检索性能。然而,由于对用户隐私和安全的需求不断增加,我们经常需要从机器学习(ML)模型中移除用户数据信息以满足特定的隐私和安全要求。这种需求需要 ANN 搜索算法支持快速在线数据删除和插入。当前的学习型哈希方法需要重新训练哈希函数,这是由于大规模数据的训练时间成本巨大而难以实现的。为了解决这个问题,我们提出了一种新的数据依赖哈希方法,名为自我重构展开局部敏感哈希(USR-LSH)。我们的 USR-LSH 展开了实例级数据重构的优化更新,这对于保留数据信息比数据无关 LSH 更好。此外,我们的 USR-LSH 支持快速在线数据删除和插入,无需重新训练。据我们所知,我们是第一个关注检索问题机器遗忘的人。实验证明,USR-LSH 在ANN任务中的精度和召回率方面优于最先进的数据分布无关LSH。我们还证明,USR-LSH的数据删除和插入速度比基于学习的数据依赖哈希明显快。