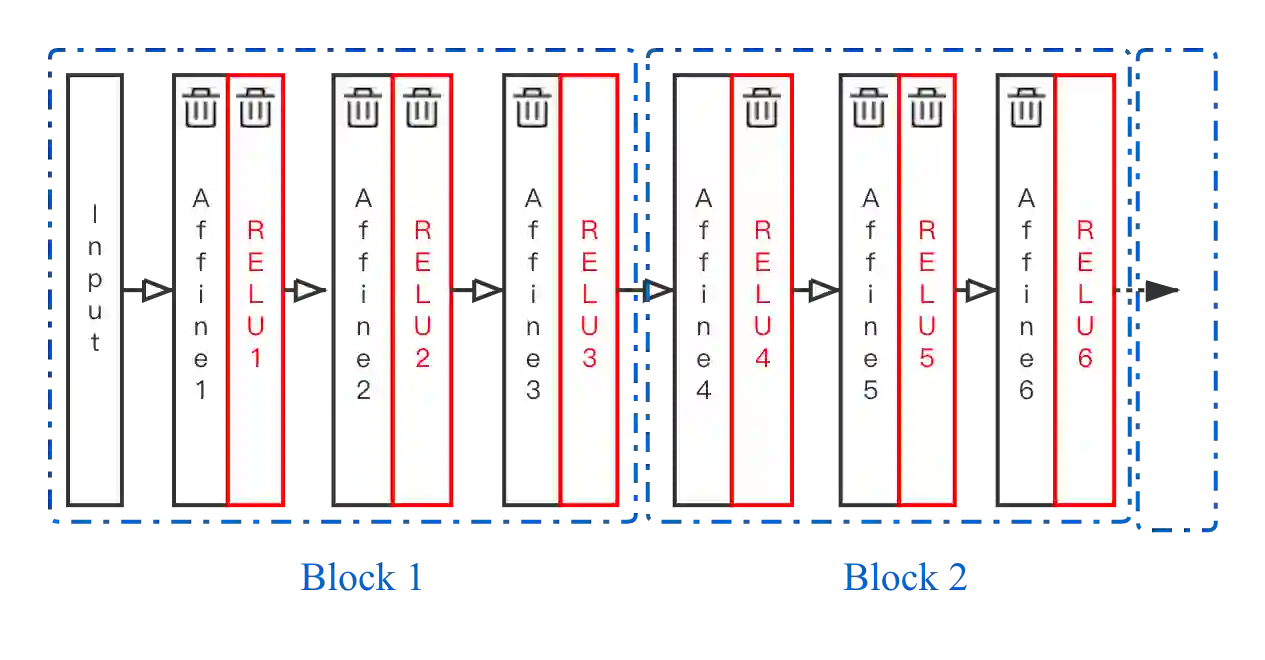

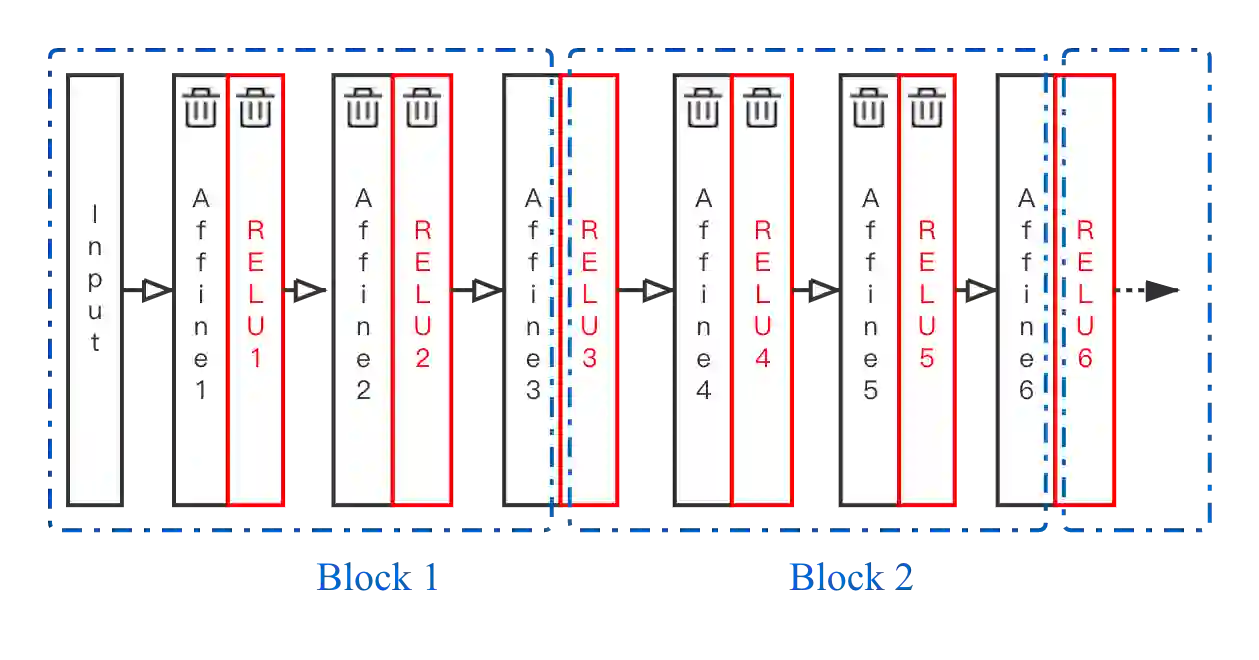

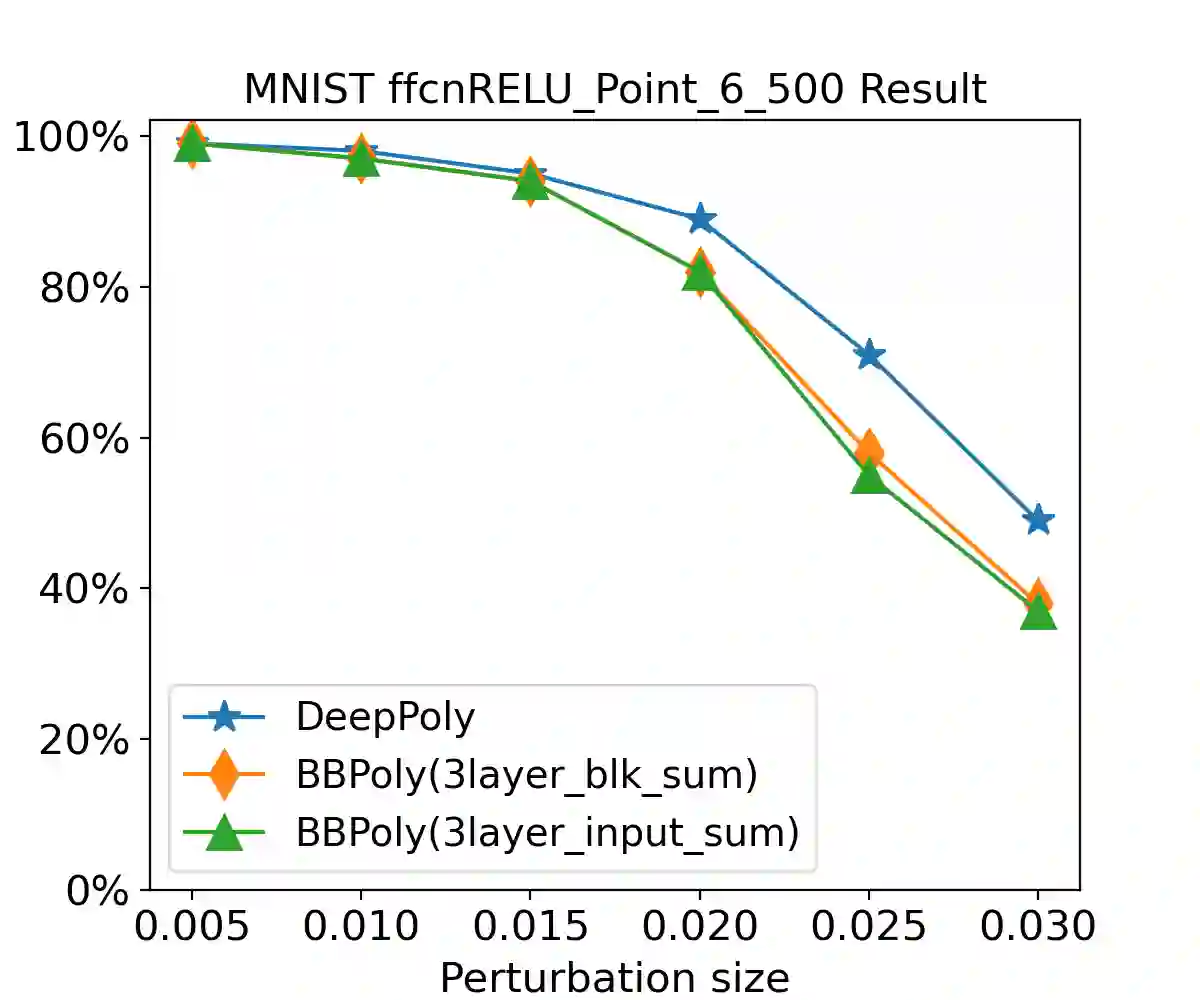

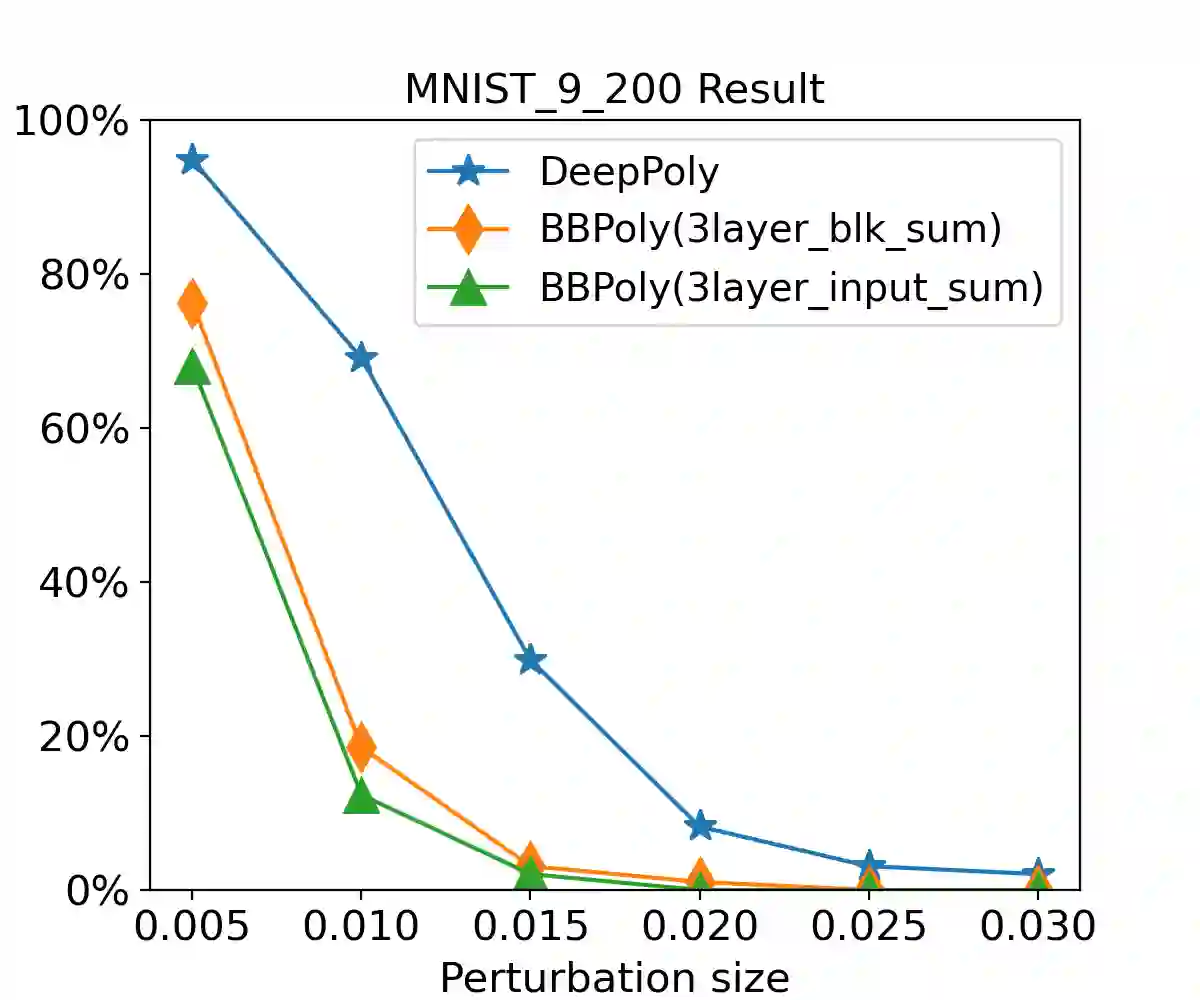

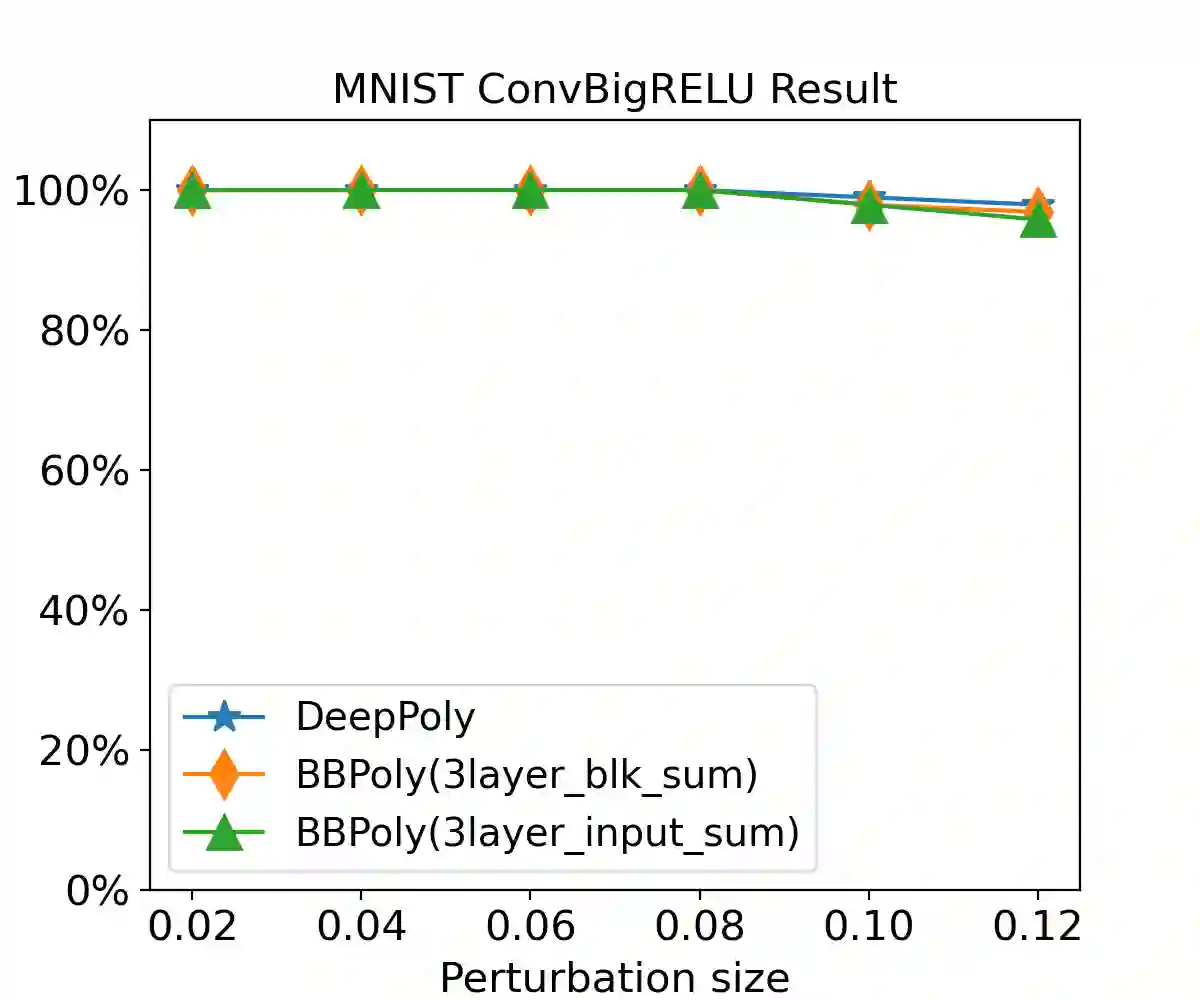

As neural networks are trained to be deeper and larger, the scalability of neural network analyzers is urgently required. The main technical insight of our method is modularly analyzing neural networks by segmenting a network into blocks and conduct the analysis for each block. In particular, we propose the network block summarization technique to capture the behaviors within a network block using a block summary and leverage the summary to speed up the analysis process. We instantiate our method in the context of a CPU-version of the state-of-the-art analyzer DeepPoly and name our system as Bounded-Block Poly (BBPoly). We evaluate BBPoly extensively on various experiment settings. The experimental result indicates that our method yields comparable precision as DeepPoly but runs faster and requires less computational resources. For example, BBPoly can analyze really large neural networks like SkipNet or ResNet which contain up to one million neurons in less than around 1 hour per input image, while DeepPoly needs to spend even 40 hours to analyze one image.

翻译:随着神经网络的深度和广度的训练,神经网络分析器的可扩缩性是迫切需要的。我们方法的主要技术洞察力是模块分析神经网络,将网络分为各个区块,并对每个区块进行分析。特别是,我们建议使用网络块块总和技术来捕捉网络块内的行为,使用块状摘要并利用摘要来加速分析过程。我们用CPU转换最先进的分析器DeepPolly,并命名我们的系统为Bound-Block Poly(BBBPolly)。我们广泛评估了各种实验设置的BBBBoboly。实验结果表明,我们的方法的精确度与DeepPolly相当,但运行速度更快,需要较少计算资源。例如,BBoboly可以分析真正的大型神经网络,如SppNet或ResNet,它包含100万个神经元,每个输入图像不到1小时,而Deeppoly则需要花费40小时来分析一个图像。