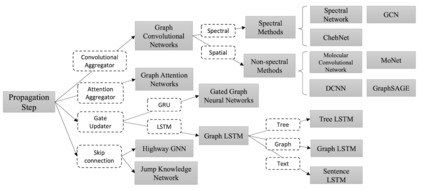

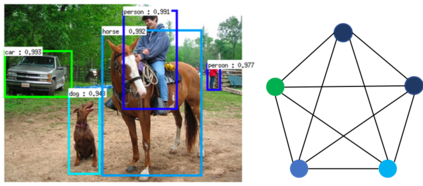

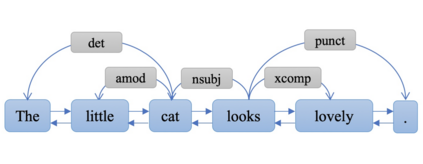

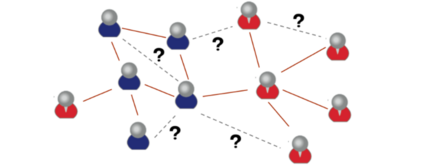

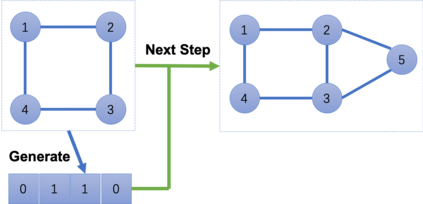

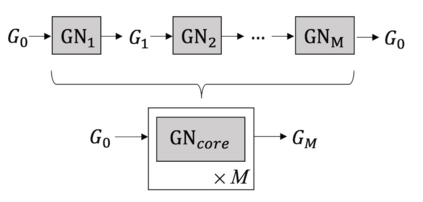

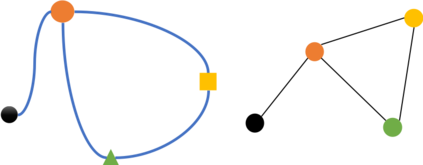

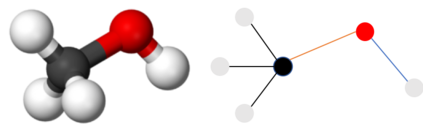

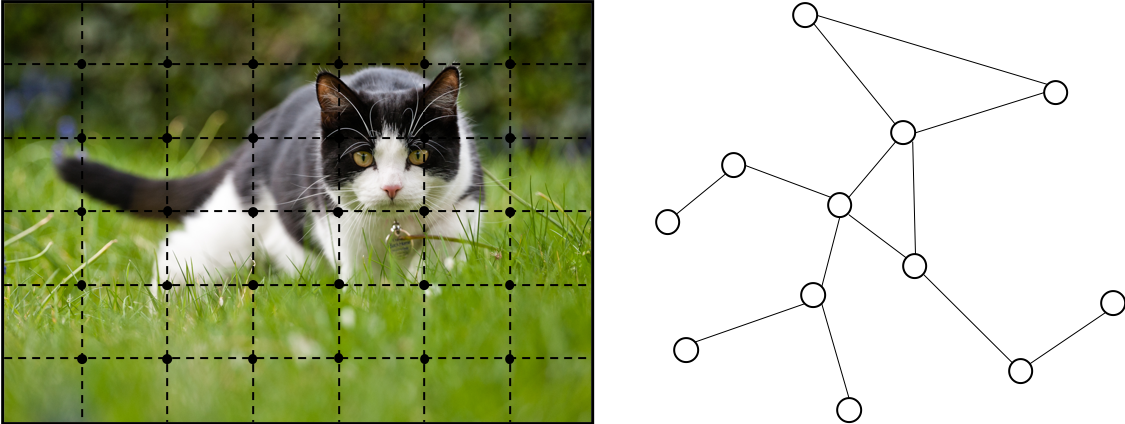

Lots of learning tasks require dealing with graph data which contains rich relation information among elements. Modeling physics system, learning molecular fingerprints, predicting protein interface, and classifying diseases require that a model learns from graph inputs. In other domains such as learning from non-structural data like texts and images, reasoning on extracted structures, like the dependency tree of sentences and the scene graph of images, is an important research topic which also needs graph reasoning models. Graph neural networks (GNNs) are connectionist models that capture the dependence of graphs via message passing between the nodes of graphs. Unlike standard neural networks, graph neural networks retain a state that can represent information from its neighborhood with arbitrary depth. Although the primitive GNNs have been found difficult to train for a fixed point, recent advances in network architectures, optimization techniques, and parallel computation have enabled successful learning with them. In recent years, systems based on graph convolutional network (GCN) and gated graph neural network (GGNN) have demonstrated ground-breaking performance on many tasks mentioned above. In this survey, we provide a detailed review over existing graph neural network models, systematically categorize the applications, and propose four open problems for future research.

翻译:大量学习任务需要处理含有各元素之间丰富关联信息的图表数据。 建模物理系统、 学习分子指纹、 预测蛋白接口和疾病分类要求模型从图形输入中学习。 在从非结构性数据(如文本和图像)中学习的其他领域,例如从文本和图像等学习,关于提取结构的推理(如依赖的句子树和图像的场景图)是一个重要的研究课题,也需要图形推理模型。 图神经网络(GNNs)是连接模型,通过图形节点之间的信息传递来捕捉图形的依赖性。 与标准的神经网络不同, 图形神经网络保留能够任意深度代表来自其周边的信息的状态。 尽管发现原始GNNs难以为固定点进行训练, 网络结构、 优化技术和平行计算的最新进展使得它们得以成功学习。 近年来, 基于图形革命网络(GCN) 和 Gated 图形神经网络(GNNN) 的系统展示了上述许多任务的地面突破性表现。 在本次调查中, 我们详细审查现有图表神经网络模型, 系统将四种问题分类, 并提议未来 。