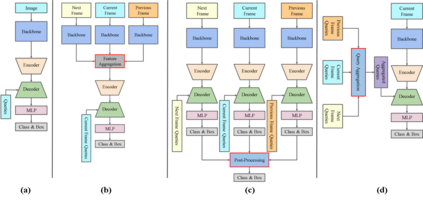

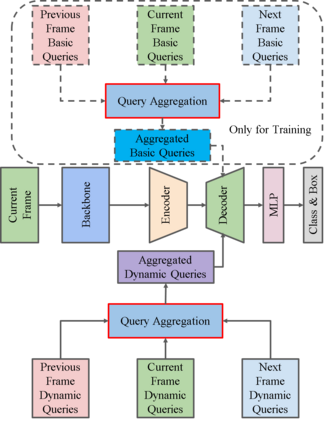

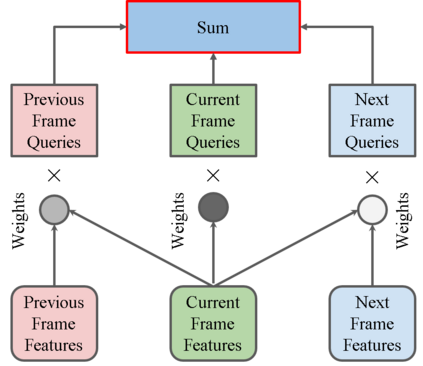

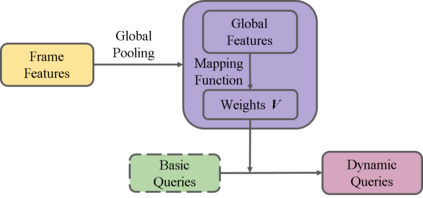

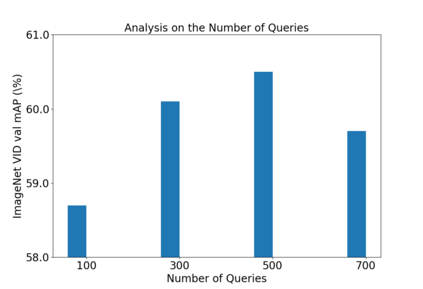

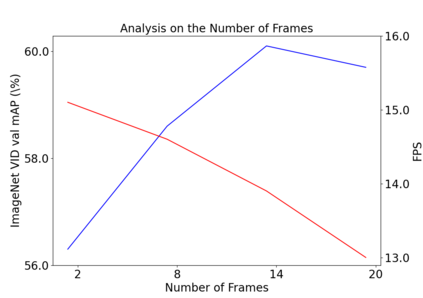

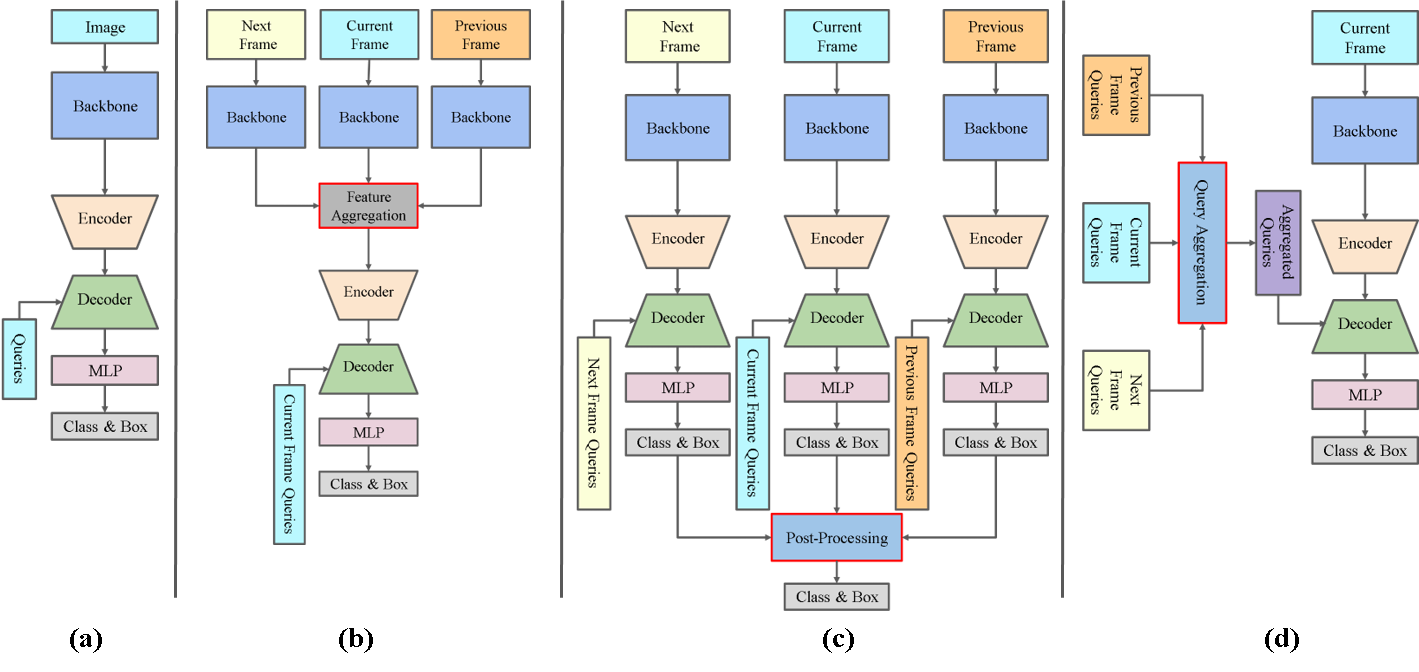

Video object detection needs to solve feature degradation situations that rarely happen in the image domain. One solution is to use the temporal information and fuse the features from the neighboring frames. With Transformerbased object detectors getting a better performance on the image domain tasks, recent works began to extend those methods to video object detection. However, those existing Transformer-based video object detectors still follow the same pipeline as those used for classical object detectors, like enhancing the object feature representations by aggregation. In this work, we take a different perspective on video object detection. In detail, we improve the qualities of queries for the Transformer-based models by aggregation. To achieve this goal, we first propose a vanilla query aggregation module that weighted averages the queries according to the features of the neighboring frames. Then, we extend the vanilla module to a more practical version, which generates and aggregates queries according to the features of the input frames. Extensive experimental results validate the effectiveness of our proposed methods: On the challenging ImageNet VID benchmark, when integrated with our proposed modules, the current state-of-the-art Transformer-based object detectors can be improved by more than 2.4% on mAP and 4.2% on AP50.

翻译:视频目标检测需要解决图像领域很少出现的特征降级情况。其中一种解决方案是利用时间信息并融合相邻帧的特征。随着基于Transformer的目标检测器在图像领域任务中获得更好的性能,最近的研究开始将这些方法扩展到视频目标检测。然而,这些现有的基于Transformer的视频目标检测器仍然遵循与经典目标检测器相同的流程,如通过聚合增强物体特征表征。在本文中,我们从不同的角度来看待视频目标检测。具体而言,我们通过聚合改进Transformer模型的查询质量。为实现此目标,我们首先提出了一个香草查询聚合模块,根据相邻帧的特征加权平均查询。然后,我们将香草模块扩展为一个更实用的版本,根据输入帧的特征生成并聚合查询。广泛的实验结果验证了我们所提出的方法的有效性:在具有挑战性的ImageNet VID基准测试中,当与我们提出的模块集成时,目前最先进的基于Transformer的目标检测器在mAP上可以提高超过2.4%并在AP50上提高4.2%。