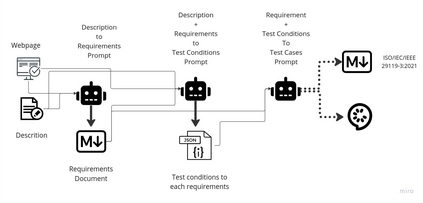

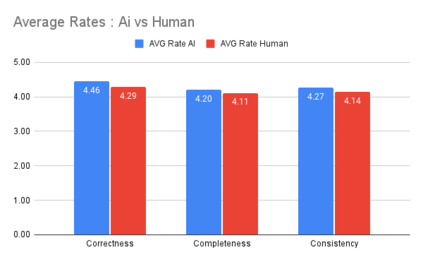

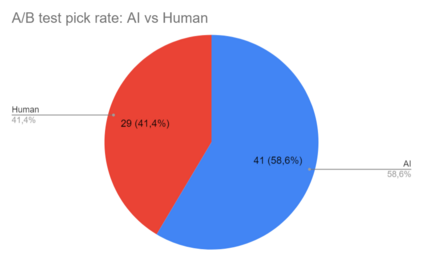

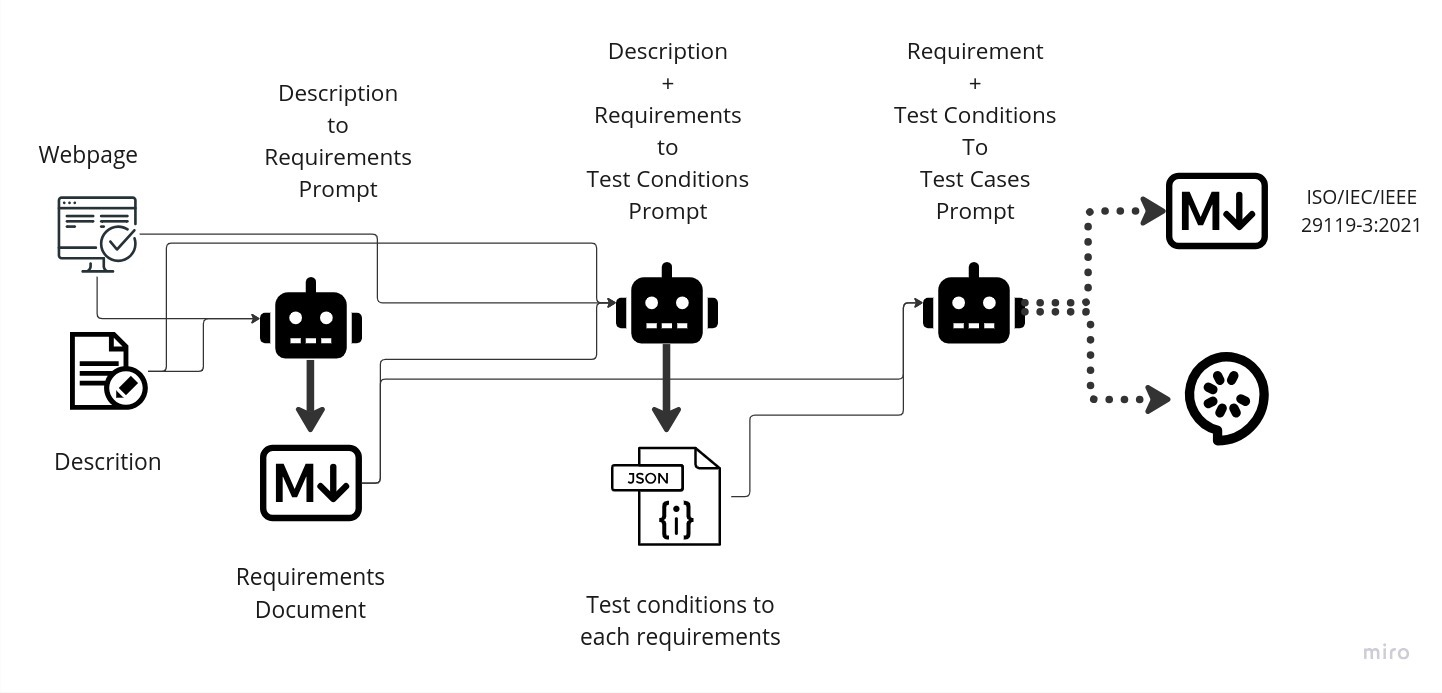

This paper presents a detailed case study examining the application of Large Language Models (LLMs) in the construction of test cases within the context of software engineering. LLMs, characterized by their advanced natural language processing capabilities, are increasingly garnering attention as tools to automate and enhance various aspects of the software development life cycle. Leveraging a case study methodology, we systematically explore the integration of LLMs in the test case construction process, aiming to shed light on their practical efficacy, challenges encountered, and implications for software quality assurance. The study encompasses the selection of a representative software application, the formulation of test case construction methodologies employing LLMs, and the subsequent evaluation of outcomes. Through a blend of qualitative and quantitative analyses, this study assesses the impact of LLMs on test case comprehensiveness, accuracy, and efficiency. Additionally, delves into challenges such as model interpretability and adaptation to diverse software contexts. The findings from this case study contributes with nuanced insights into the practical utility of LLMs in the domain of test case construction, elucidating their potential benefits and limitations. By addressing real-world scenarios and complexities, this research aims to inform software practitioners and researchers alike about the tangible implications of incorporating LLMs into the software testing landscape, fostering a more comprehensive understanding of their role in optimizing the software development process.

翻译:暂无翻译