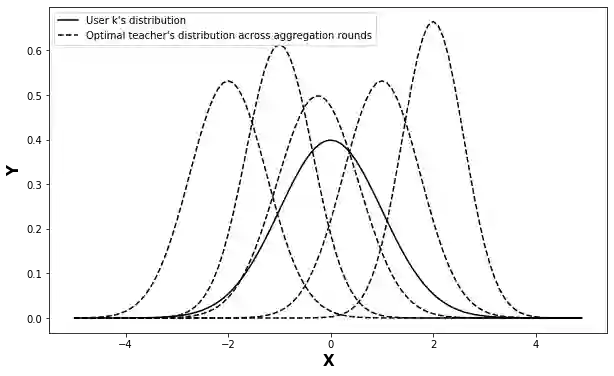

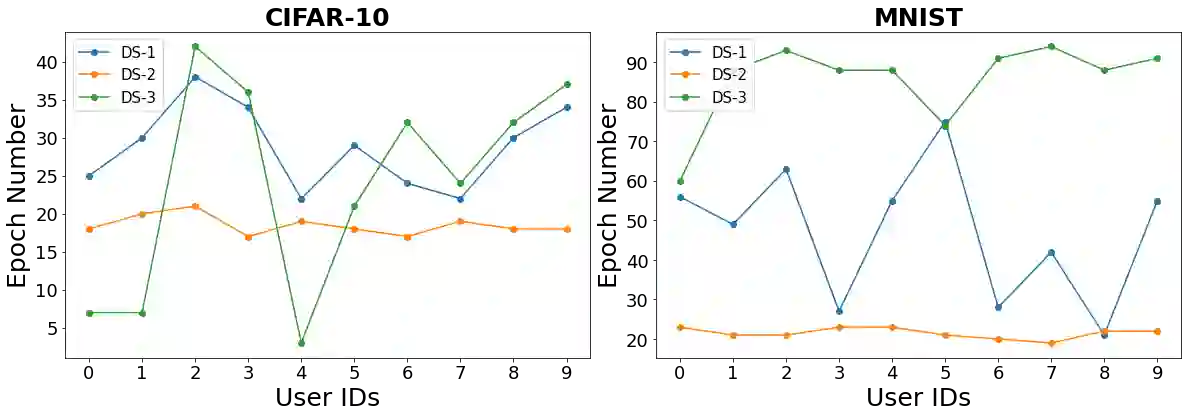

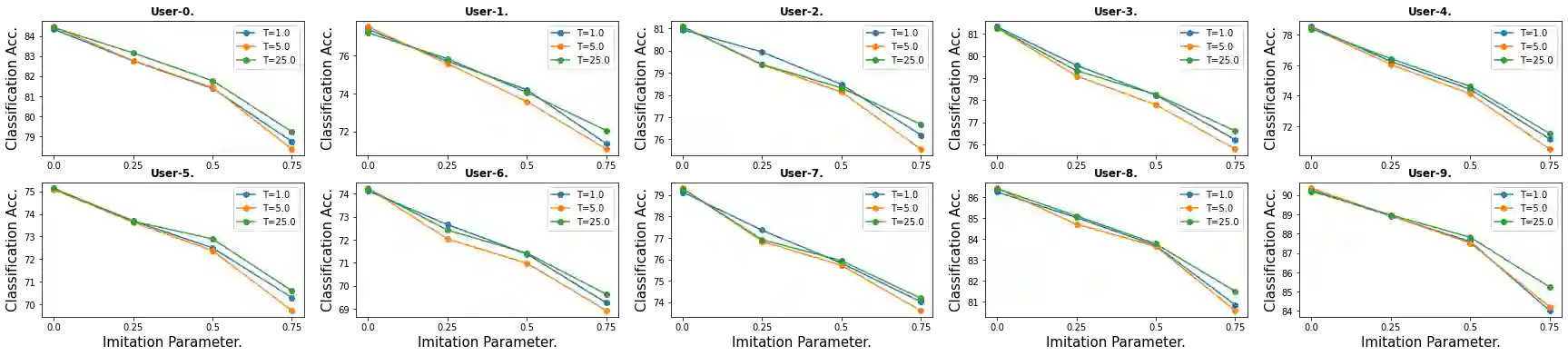

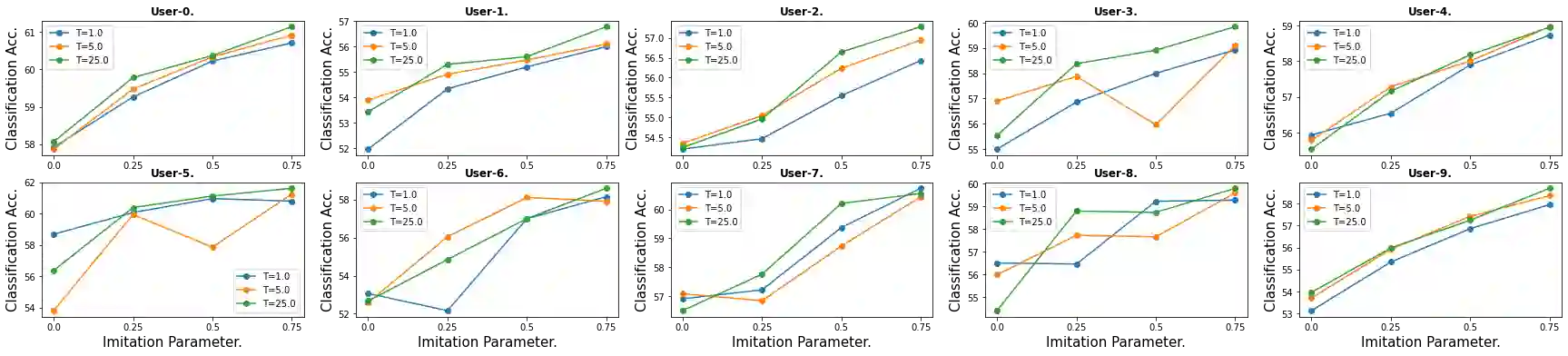

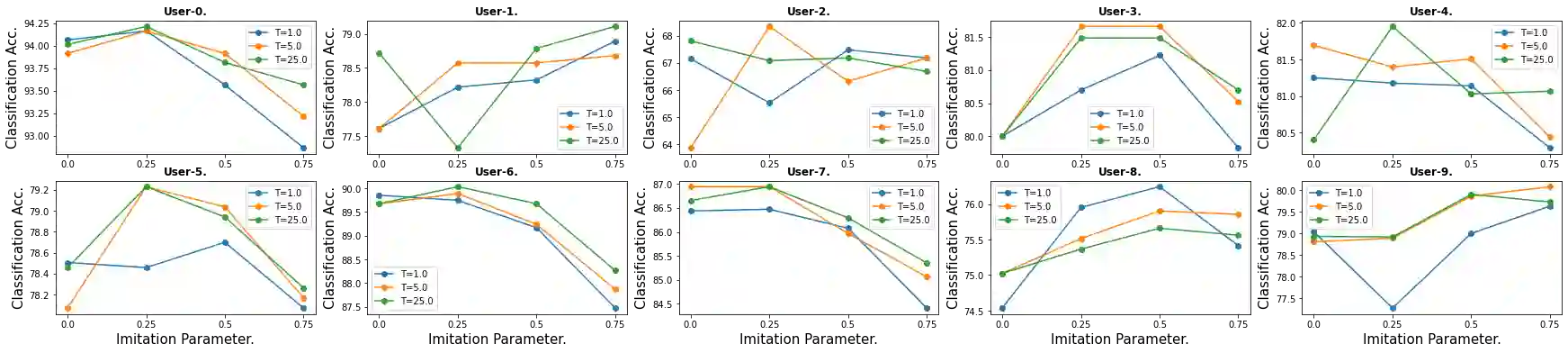

Federated learning (FL) is a decentralized privacy-preserving learning technique in which clients learn a joint collaborative model through a central aggregator without sharing their data. In this setting, all clients learn a single common predictor (FedAvg), which does not generalize well on each client's local data due to the statistical data heterogeneity among clients. In this paper, we address this problem with PersFL, a discrete two-stage personalized learning algorithm. In the first stage, PersFL finds the optimal teacher model of each client during the FL training phase. In the second stage, PersFL distills the useful knowledge from optimal teachers into each user's local model. The teacher model provides each client with some rich, high-level representation that a client can easily adapt to its local model, which overcomes the statistical heterogeneity present at different clients. We evaluate PersFL on CIFAR-10 and MNIST datasets using three data-splitting strategies to control the diversity between clients' data distributions. We empirically show that PersFL outperforms FedAvg and three state-of-the-art personalization methods, pFedMe, Per-FedAvg, and FedPer on majority data-splits with minimal communication cost. Further, we study the performance of PersFL on different distillation objectives, how this performance is affected by the equitable notion of fairness among clients, and the number of required communication rounds. PersFL code is available at https://tinyurl.com/hdh5zhxs for public use and validation.

翻译:联邦学习(FL)是一种分散的隐私保护学习技术,客户通过中央聚合器学习联合协作模式,但不分享数据。在这个环境中,所有客户学习单一的共同预测器(FedAvg),由于客户之间统计数据差异性强,无法对每个客户的本地数据进行全面概括。在本文中,我们与PersFL(一个分立的两阶段个性化学习算法)一起解决这个问题。在第一阶段,PersFL(PersFL)找到每个客户在FL培训阶段通过中央聚合器学习一个联合合作模式学习一个联合协作模式。在第二阶段,PersFL(PersFL)将最佳教师的有用知识注入每个用户的本地模型(FedAvAvg)中。 教师模型为每个客户提供了一些丰富、高层次的代表,客户可以很容易地适应其本地模型,从而克服不同客户之间的统计差异性能。 我们用三个数据分享策略来控制客户之间数据传播的多样化。 我们从经验上显示PersFL(F)超越了FA5最佳教师的实用知识, 和三种个人业绩分析方法,通过FDFA-FAR-al-al-al-al-al-al-al(Pest-al-al) press-al-al-al-ald-fal-fal-fal-fal-fal) 3 和三种最低成本-de-al-al-fald-fal-fal-fal-al-fald-fal-al-al-al-fald-ald-ald-ald-ald-fx-f) 和三种方法进一步使用这种最低成本-de-fald-f-fal-s-fal-fx-fal-fal-fal-fal-f-fx-f-fal-fx-fal-fal-fal-fal-fal-fal-fal-fal-f-f-fal-f-f-f-f-f-f-f-f-f-f-f-f-f-f-f-l-l-f-l-fal-f-f-f-f-f-fal-f-f-f-