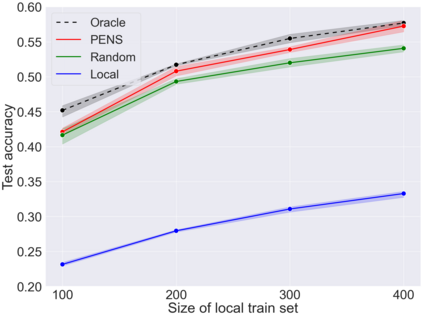

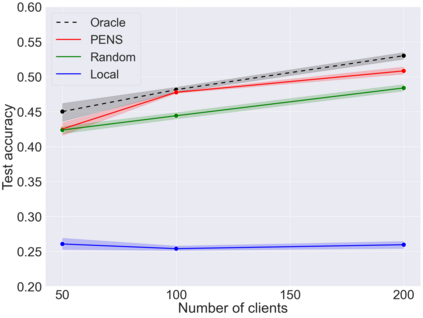

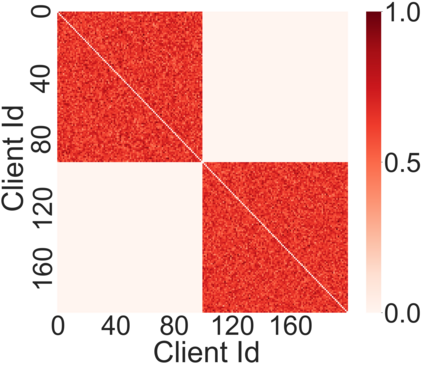

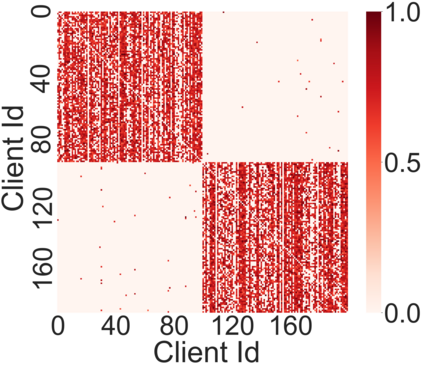

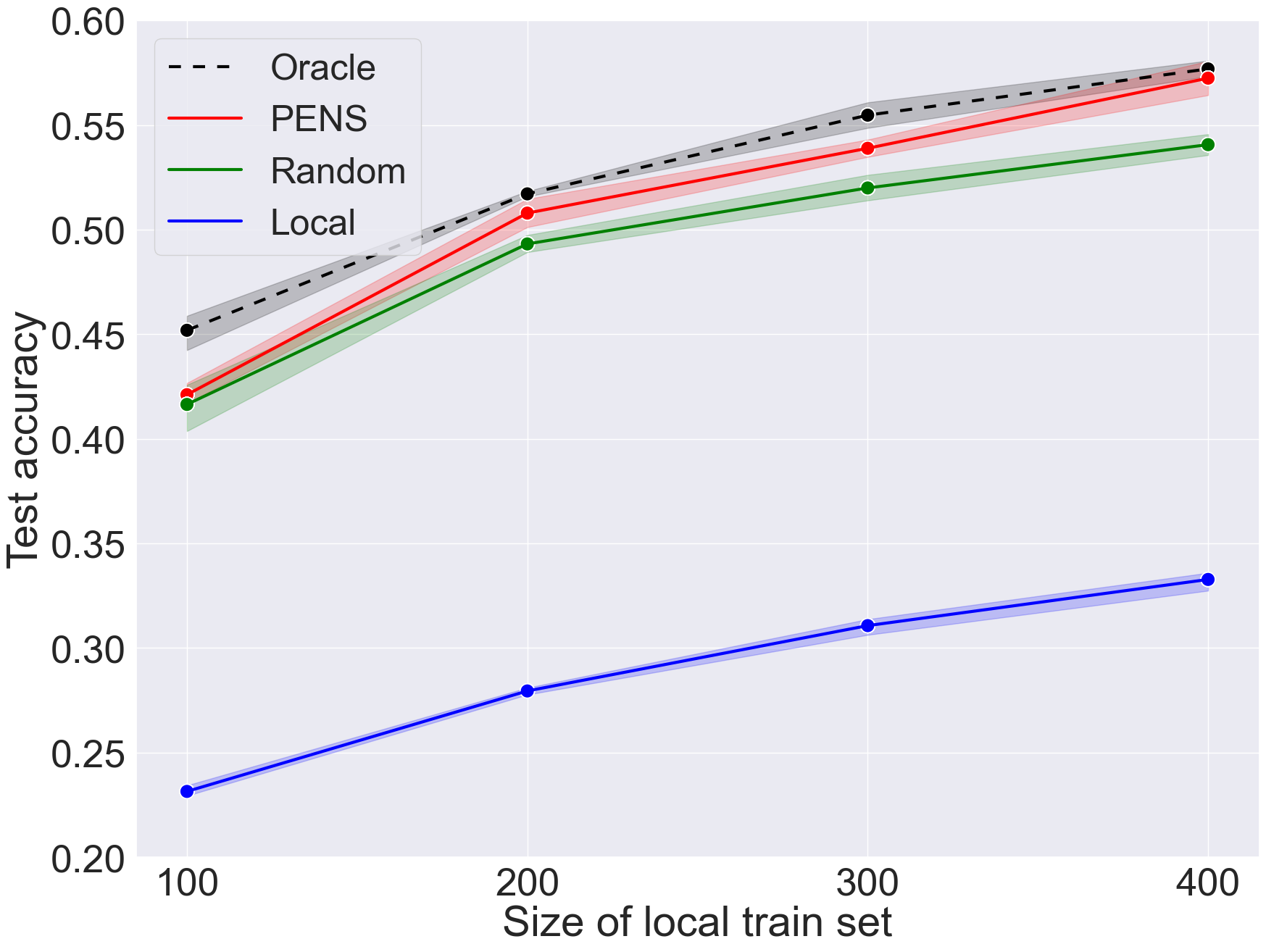

We tackle the non-convex problem of learning a personalized deep learning model in a decentralized setting. More specifically, we study decentralized federated learning, a peer-to-peer setting where data is distributed among many clients and where there is no central server to orchestrate the training. In real world scenarios, the data distributions are often heterogeneous between clients. Therefore, in this work we study the problem of how to efficiently learn a model in a peer-to-peer system with non-iid client data. We propose a method named Performance-Based Neighbor Selection (PENS) where clients with similar data distributions detect each other and cooperate by evaluating their training losses on each other's data to learn a model suitable for the local data distribution. Our experiments on benchmark datasets show that our proposed method is able to achieve higher accuracies as compared to strong baselines.

翻译:更具体地说,我们研究分散化的联盟式学习,即同侪学习,即将数据分配给许多客户,而且没有中央服务器来安排培训的同侪学习。在现实世界中,用户之间的数据分布往往各不相同。因此,在这项工作中,我们研究如何在同侪系统中以非二类客户数据高效率地学习模式的问题。我们提出了一种名为“基于性能的邻居选择”的方法,在这种方法中,拥有类似数据分发的客户相互检测,并通过评价对方数据的培训损失来合作,学习适合本地数据分布的模型。我们在基准数据集方面的实验表明,我们提出的方法能够达到比强基线更高的理解度。