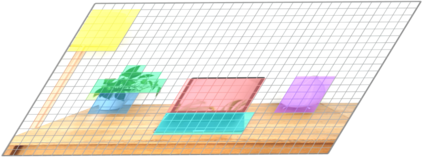

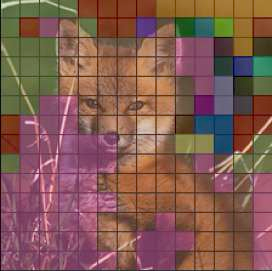

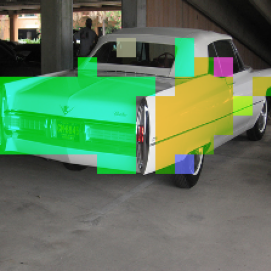

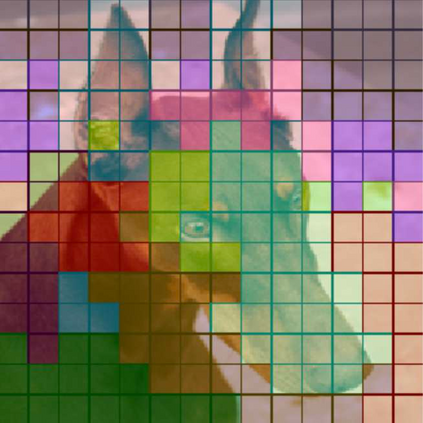

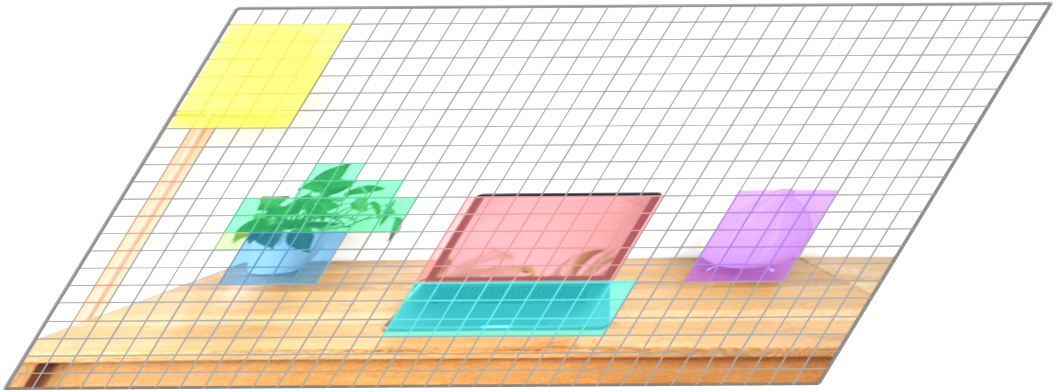

Humans possess a versatile mechanism for extracting structured representations of our visual world. When looking at an image, we can decompose the scene into entities and their parts as well as obtain the dependencies between them. To mimic such capability, we propose Visual Dependency Transformers (DependencyViT) that can induce visual dependencies without any labels. We achieve that with a novel neural operator called \emph{reversed attention} that can naturally capture long-range visual dependencies between image patches. Specifically, we formulate it as a dependency graph where a child token in reversed attention is trained to attend to its parent tokens and send information following a normalized probability distribution rather than gathering information in conventional self-attention. With such a design, hierarchies naturally emerge from reversed attention layers, and a dependency tree is progressively induced from leaf nodes to the root node unsupervisedly. DependencyViT offers several appealing benefits. (i) Entities and their parts in an image are represented by different subtrees, enabling part partitioning from dependencies; (ii) Dynamic visual pooling is made possible. The leaf nodes which rarely send messages can be pruned without hindering the model performance, based on which we propose the lightweight DependencyViT-Lite to reduce the computational and memory footprints; (iii) DependencyViT works well on both self- and weakly-supervised pretraining paradigms on ImageNet, and demonstrates its effectiveness on 8 datasets and 5 tasks, such as unsupervised part and saliency segmentation, recognition, and detection.

翻译:人类拥有一个多才多艺的机制,可以提取我们的视觉世界的结构化表示。当观察图像时,我们可以将其分解为实体及其部分,以及获取它们之间的依赖关系。为了模仿这种能力,我们提出了视觉依赖变形器(DependencyViT),可以在没有标签的情况下引出视觉依赖关系。我们通过一种新颖的神经算子——被称为反向注意力实现了这一点,它能够自然地捕捉到图像块之间的远距离可视依赖关系。具体而言,我们将它的设计形式化为一个依赖图,其中反向注意力中的子代仅能够关注其父代令牌,并按照规范化的概率分布发送信息,而非通过传统的自我注意聚集信息。通过这样的设计,层叠自然而然地从反向注意力层中崛起,并且从叶节点向根节点逐渐诱导出一个依赖树。DependencyViT提供了若干令人称道的优点。第一,图像中的实体及其部分可以由不同的子树表示,从而实现了依赖关系的部分分配;第二,可以实现动态的视觉池化。通过对很少发送信息的叶节点进行剪枝,可以减少计算和内存开销,而不会影响模型的性能。基于此,我们提出减轻计算和内存负担的轻量级DependencyViT-Lite。第三,DependencyViT在Imagenet的自监督和弱监督预训练范式上表现良好,并在8个数据集和5个任务上展示了其有效性,例如无监督的部分和显着性分割,识别和检测。