【泡泡一分钟】基于单目小运动视频的快速深度估计算法(3dv-20)

每天一分钟,带你读遍机器人顶级会议文章

标题:Monocular Depth from Small Motion Video Accelerated

作者:Christopher Ham, Ming-Fang Chang, Simon Lucey, Surya Singh

来源:3dv 2017 ( International Conference on 3D Vision)

播音员:格子

编译:颜青松

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

为快速从短基线视频序列中重建密集深度,本文提出一种新的重建流程,共分为四个步骤。目前,大多数智能手机都具有高帧率(HFR)视频拍摄功能,能够获取到帧间运动和运动模糊更小的视频,但是其缺点在于为补偿高速快门而调高的感光度增加了影像噪声。本文的工作主要是针对此类视频数据。虽然此类视频的短基线意味着深度估计具有更大的不确定性,但是这也意味着点跟踪更加容易,亮度不变的假设更加可靠。在本文提出的方法中,作者使用了具有亚像素精度的直接光度光束平差法,能够使用更少的跟踪点来估计精确的外参数,作者发现在这种方法中考虑像素在整个视频中的强度能够使其对影像噪声更鲁棒。并且,本文使用一种基于PatchMatch的算法进行密集深度图快速计算,而不是使用短基线视频处理中常用的穷举平面扫描法。为解决立体匹配错误,本文中使用了一种鲁棒的平均值计算来解决此问题。

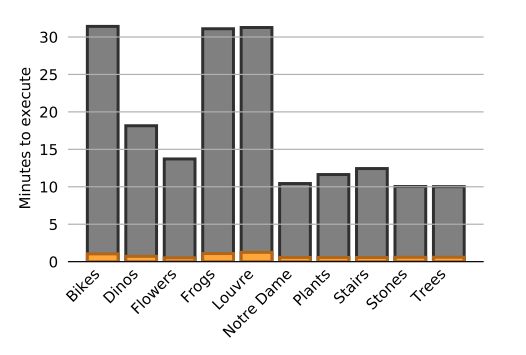

在论文中,作者将自己的算法与目前最新的短基线算法(Ha)进行了全面、定量的比较,包括速度、质量、基线长度和视频大小,使用的数据是人工合成真实感照片。作者的实验结果表明提出的算法能够处理更大范围的基线和数据量更大的视频。作者也使用了Ha中的数据进行了定量实验,发现文中算法在密集深度图质量近似或更好的情况下,速度至少有10倍以上的提升。

下图中橙色表示是作者的算法的运行时间,灰色表示Ha的算法的运行时间。

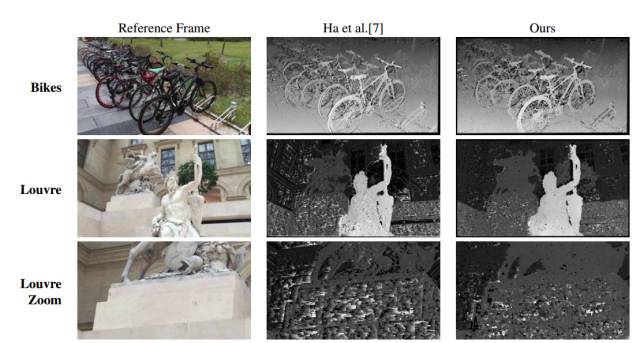

下图是作者算法和Ha算法的运行结果对比图。

Abstract

We propose a novel four-stage pipeline densely reconstructing depth from video sequences with small baselines with the goal of being fast. This work is particularly motivated by the high frame rate (HFR) ability of many modern smartphones which leads to smaller inter-frame motion and less motion blur, but with more image noise as the sensitivity is adjusted to compensate for the increased shutter speed. While small baselines lead to larger uncertainties in depth estimations, they allow for easier point tracking and assumptions of brightness constancy hold more true. In our pipeline we make use of the sub-pixel precision of direct photometric bundle adjustment to reduce the number of tracked points required to estimate an accurate pose. We show that by considering pixel intensities across the entire video, it is more robust to image noise. Instead of using the exhaustive plane sweeping approach of existing small base-line methods, dense depth maps are calculated efficiently using an algorithm inspired by PatchMatch[2, 3]. Instead of a stereo matching error, our algorithm minimizes the variance over multiple frames with a robust mean estimation.

We present a detailed, quantitative comparison between our method and the most recent small baseline method from Ha et al. [7] for speed and quality across and spectrum of baselines and sequence sizes using a synthesized, photore-alistic dataset. The results suggest that our method has the ability to cope with a wider range of baselines and sequence sizes. We also compare qualitative results on real small motion clips from Ha et al. [7] in addition to our own, and show that our method outputs dense depth maps of similar or better quality and at least 10 times faster.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

在【泡泡机器人SLAM】公众号(paopaorobot_slam)中回复关键字“3dv-20”,即可获取本文下载链接。

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com