【泡泡一分钟】终极SLAM?结合事件相机、RGB和IMU用于高动态、高速场景的鲁棒视觉SLAM

每天一分钟,带你读遍机器人顶级会议文章

标题:Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High-Speed Scenarios

作者:T. Rosinol Vidal, H.Rebecq, T. Horstschaefer, D. Scaramuzza

来源:IEEE Robotics and Automation Letters (RA-L), 2018.

播音员:堃堃

编译: 蔡纪源

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

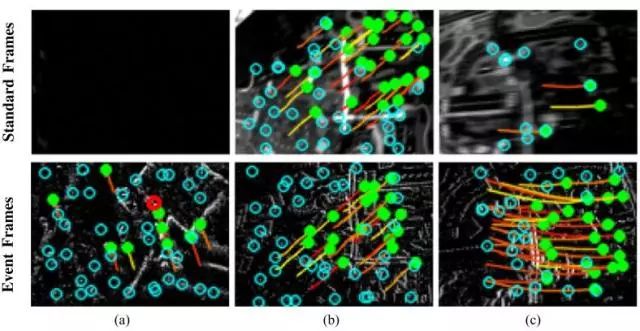

与之相比,标准相机大多数的时间(低速和良好的光照场景)都能提供丰富实时的环境灰度信息,但在快速运动、高动态范围或低光照等糟糕的环境情中,它们经常会失效。下图1中,通过三种不同难易场景下做特征跟踪任务时的标准相机与事件相机的结果对比。

图1 标准相机与事件相机在不同环境下的特征跟踪对比 (a)光线暗(b)光照好,速度适中 (c)动态模糊

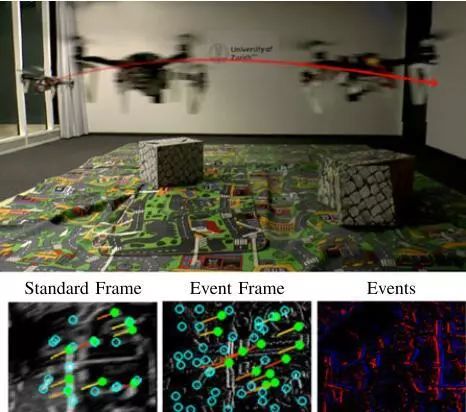

在本文中,作者结合了这两种传感器的互补优势,首次提出了一种对事件、标准帧和惯性测量进行紧耦合融合的状态估计方法。此外,作者还用四旋翼作为验证平台,结果表明提出的方法能够实现自主飞行,能够适应传统视觉-惯性里程计失效的飞行场景,比如低光照环境和高动态范围场景。

实验视频链接:http://rpg.ifi.uzh.ch/ultimateslam.html

图2 本文算法在四旋翼上机载运行示意图

左下:标准帧 底部中间:事件帧 右下:事件(蓝色:

正事件,红色:负事件)。

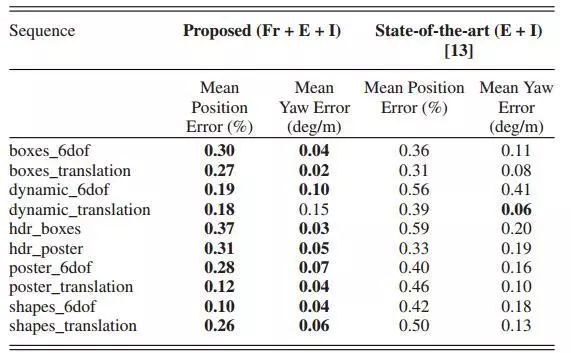

最后,在事件相机的公开数据集上的实验结果显示,我们的混合方法相比纯事件相机的状态估计方法精度提高了130%,相比标准的视觉惯性系统精度提高了85%,且在计算上是易进行的。

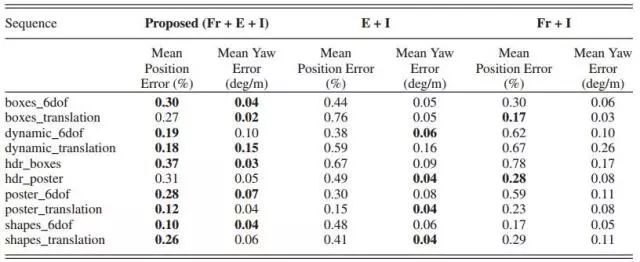

图3 提出的方法搭配不同的传感器时的精度对比

标准帧(FR),事件(E)和IMU(I)

图4 提出的方法与State-of-art方法对比

Abstract

Abstract—Event cameras are bioinspired vision sensors that output pixel-level brightness changes instead of standard intensity frames. These cameras do not suffer from motion blur and have a very high dynamic range, which enables them to provide reliable visual information during high-speed motions or in scenes characterized by high dynamic range. However, event cameras output only little information when the amount of motion is limited, such as in the case of almost still motion. Conversely, standard cameras provide instant and rich information about the environment most of the time (in low-speed and good lighting scenarios), but they fail severely in case of fast motions, or difficult lighting such as high dynamic range or low light scenes. In this letter, we present the first state estimation pipeline that leverages the complementary advantages of these two sensors by fusing in a tightly coupled manner events, standard frames, and inertial measurements. We show on the publicly available Event Camera Dataset that our hybrid pipeline leads to an accuracy improvement of 130% over eventonly pipelines, and 85% over standard-frames-only visual-inertial systems, while still being computationally tractable. Furthermore, we use our pipeline to demonstrate—to the best of our knowledge—the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual-inertial odometry, such as low-light environments and high dynamic range scenes. Videos of the experiments:

http://rpg.ifi.uzh.ch/ultimateslam.html

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号。

点击阅读原文,即可获取本文下载链接。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com