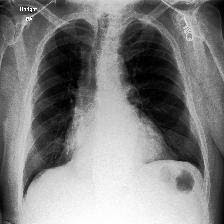

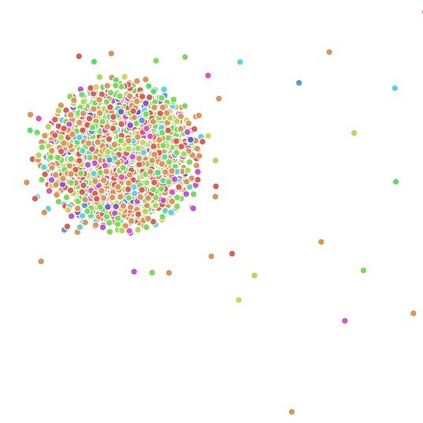

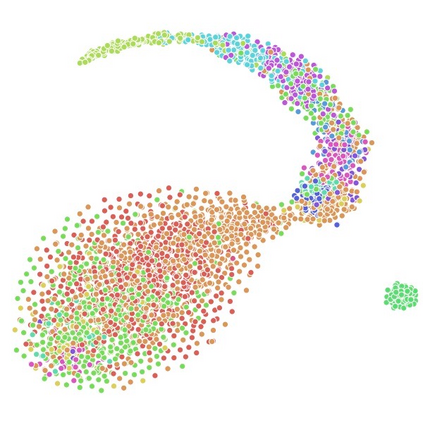

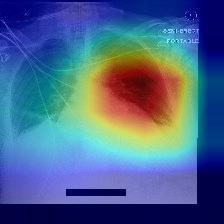

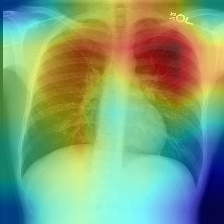

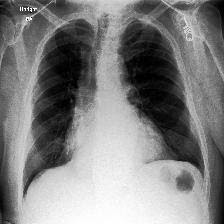

Disease classification relying solely on imaging data attracts great interest in medical image analysis. Current models could be further improved, however, by also employing Electronic Health Records (EHRs), which contain rich information on patients and findings from clinicians. It is challenging to incorporate this information into disease classification due to the high reliance on clinician input in EHRs, limiting the possibility for automated diagnosis. In this paper, we propose \textit{variational knowledge distillation} (VKD), which is a new probabilistic inference framework for disease classification based on X-rays that leverages knowledge from EHRs. Specifically, we introduce a conditional latent variable model, where we infer the latent representation of the X-ray image with the variational posterior conditioning on the associated EHR text. By doing so, the model acquires the ability to extract the visual features relevant to the disease during learning and can therefore perform more accurate classification for unseen patients at inference based solely on their X-ray scans. We demonstrate the effectiveness of our method on three public benchmark datasets with paired X-ray images and EHRs. The results show that the proposed variational knowledge distillation can consistently improve the performance of medical image classification and significantly surpasses current methods.

翻译:仅依靠成像数据的疾病分类吸引了对医学图像分析的极大兴趣。但是,目前的模型还可以通过采用包含关于病人和临床医生发现情况的丰富信息的电子健康记录(EHRs)来进一步改进。由于在EHRs中高度依赖临床投入,因此将这一信息纳入疾病分类具有挑战性,限制了自动诊断的可能性。在本文件中,我们提议了\textit{ varitional point squining} (VKD),这是基于X光的疾病分类的一个新的概率推论框架,它利用了EHRs的知识。具体地说,我们采用了一个有条件的潜伏变异模型,我们通过该模型将X光图像与相关EHR文本的变异后视镜调节条件的潜在的X射线图像的显示纳入疾病分类。通过这样做,模型获得了在学习期间提取与该疾病相关的视觉特征的能力,因此能够仅仅根据X光扫描结果对隐蔽病人进行更精确的推断。我们用三个公共基准数据集与配对X光图像和EHRs 的模拟方法证明了我们的方法的有效性。我们采用了一个有条件的隐隐隐隐隐隐性潜变量,从而能够持续地改进目前医学图像的成绩。结果,从而显示了拟议的变异性。