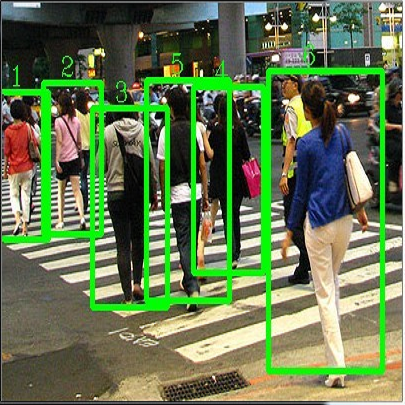

Cross-domain object detection is more challenging than object classification since multiple objects exist in an image and the location of each object is unknown in the unlabeled target domain. As a result, when we adapt features of different objects to enhance the transferability of the detector, the features of the foreground and the background are easy to be confused, which may hurt the discriminability of the detector. Besides, previous methods focused on category adaptation but ignored another important part for object detection, i.e., the adaptation on bounding box regression. To this end, we propose D-adapt, namely Decoupled Adaptation, to decouple the adversarial adaptation and the training of the detector. Besides, we fill the blank of regression domain adaptation in object detection by introducing a bounding box adaptor. Experiments show that D-adapt achieves state-of-the-art results on four cross-domain object detection tasks and yields 17% and 21% relative improvement on benchmark datasets Clipart1k and Comic2k in particular.

翻译:由于图像中存在多个对象,而且未贴标签的目标域中每个对象的位置未知,因此跨域天体的探测比物体分类更具挑战性。因此,当我们调整不同对象的特征以加强探测器的可转移性时,前景和背景的特征很容易混淆,这可能会损害探测器的不相容性。此外,以往侧重于类别适应的方法却忽视了物体探测的另一个重要部分,即约束框回归的适应。为此,我们提议D适应,即分解适应,将对称适应与探测器的培训脱钩。此外,我们通过引入捆绑框适配器来填补物体探测中回归域适应的空白。实验表明,D适应在四个跨界对象的探测任务中取得了最新艺术结果,并特别在Clipart1k和Com2k的基准数据集上实现了17%和21%的相对改进。