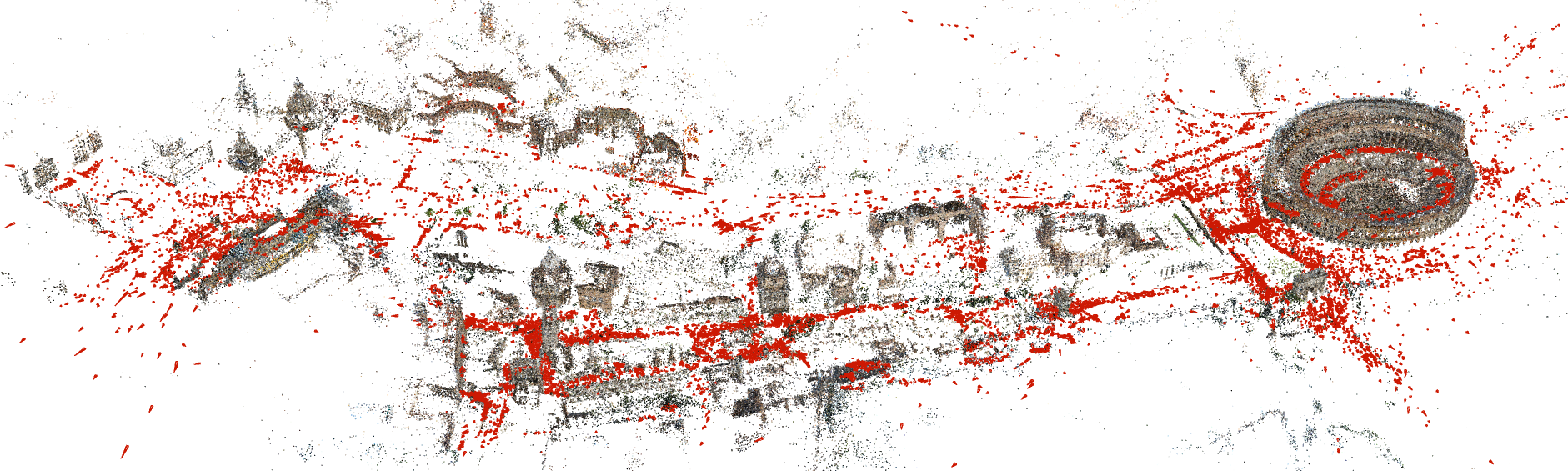

Visual localization and mapping is the key technology underlying the majority of Mixed Reality and robotics systems. Most state-of-the-art approaches rely on local features to establish correspondences between images. In this paper, we present three novel scenarios for localization and mapping which require the continuous update of feature representations and the ability to match across different feature types. While localization and mapping is a fundamental computer vision problem, the traditional setup treats it as a single-shot process using the same local image features throughout the evolution of a map. This assumes the whole process is repeated from scratch whenever the underlying features are changed. However, reiterating it is typically impossible in practice, because raw images are often not stored and re-building the maps could lead to loss of the attached digital content. To overcome the limitations of current approaches, we present the first principled solution to cross-descriptor localization and mapping. Our data-driven approach is agnostic to the feature descriptor type, has low computational requirements, and scales linearly with the number of description algorithms. Extensive experiments demonstrate the effectiveness of our approach on state-of-the-art benchmarks for a variety of handcrafted and learned features.

翻译:视觉定位和绘图是大多数混合现实和机器人系统的主要基础技术,大多数最先进的方法都依靠当地特征来建立图像之间的对应关系。在本文件中,我们提出了三种新的本地化和绘图方案,要求不断更新特征的表达方式和不同特征类型之间的匹配能力。虽然本地化和绘图是一个基本的计算机视觉问题,但传统设置在地图演进过程中将它视为一个单发过程,使用相同的本地图像特征。这假定整个过程在基本特征发生变化时从零开始重复。然而,在实际中,通常不可能重复整个过程,因为原始图像往往没有存储,而重新制作地图可能导致附带数字内容的丢失。为了克服当前方法的局限性,我们提出了跨描述性本地化和绘图的第一个原则性解决办法。我们的数据驱动方法对特征描述型类型具有指导性,低的计算要求,与描述算法的数量具有线性。广泛的实验表明我们对于各种手工艺特征和所学特征的状态基准方法的有效性。