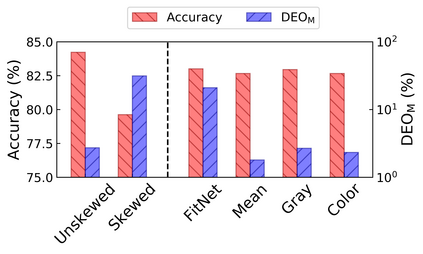

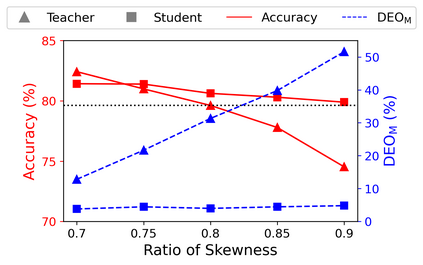

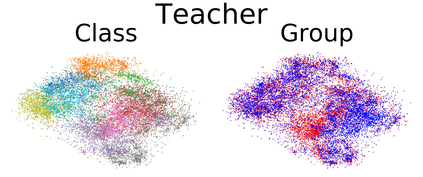

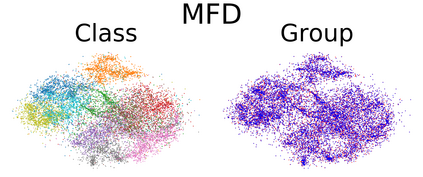

Fairness is becoming an increasingly crucial issue for computer vision, especially in the human-related decision systems. However, achieving algorithmic fairness, which makes a model produce indiscriminative outcomes against protected groups, is still an unresolved problem. In this paper, we devise a systematic approach which reduces algorithmic biases via feature distillation for visual recognition tasks, dubbed as MMD-based Fair Distillation (MFD). While the distillation technique has been widely used in general to improve the prediction accuracy, to the best of our knowledge, there has been no explicit work that also tries to improve fairness via distillation. Furthermore, We give a theoretical justification of our MFD on the effect of knowledge distillation and fairness. Throughout the extensive experiments, we show our MFD significantly mitigates the bias against specific minorities without any loss of the accuracy on both synthetic and real-world face datasets.

翻译:公平正日益成为计算机愿景的关键问题,特别是在与人类有关的决策系统中。然而,实现算法公平使模型产生对受保护群体具有偏见的结果,这仍然是一个尚未解决的问题。在本文件中,我们设计了一种系统的方法,通过为视觉识别任务进行特征蒸馏,减少算法偏向,称为MMD-基于公平蒸馏(MFD ) 。 虽然蒸馏技术在总体上被广泛用来提高预测准确性,在我们最了解的情况下,但没有开展明确的工作,试图通过蒸馏提高公平性。此外,我们从理论上为MFD提供了知识蒸馏和公平影响的理论依据。在整个广泛的实验中,我们展示了我们的MFD显著地减轻了对特定少数群体的偏向,同时不丧失合成和现实世界面对面数据集的准确性。