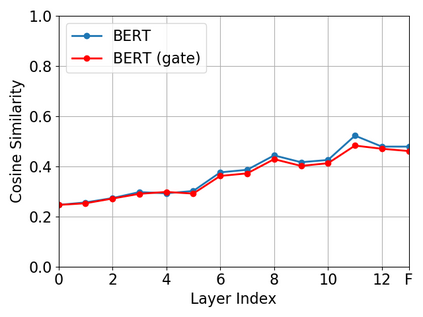

Recently over-smoothing phenomenon of Transformer-based models is observed in both vision and language fields. However, no existing work has delved deeper to further investigate the main cause of this phenomenon. In this work, we make the attempt to analyze the over-smoothing problem from the perspective of graph, where such problem was first discovered and explored. Intuitively, the self-attention matrix can be seen as a normalized adjacent matrix of a corresponding graph. Based on the above connection, we provide some theoretical analysis and find that layer normalization plays a key role in the over-smoothing issue of Transformer-based models. Specifically, if the standard deviation of layer normalization is sufficiently large, the output of Transformer stacks will converge to a specific low-rank subspace and result in over-smoothing. To alleviate the over-smoothing problem, we consider hierarchical fusion strategies, which combine the representations from different layers adaptively to make the output more diverse. Extensive experiment results on various data sets illustrate the effect of our fusion method.

翻译:最近,在视觉和语言领域都观察到了以变异器为基础的模型最近超动的现象,然而,没有对现有工作进行更深入的研究,以进一步调查这一现象的主要原因。在这项工作中,我们试图从最初发现和探索过此类问题的图表的角度分析过动问题。从直觉上看,自我注意矩阵可被视为一个相邻的普通图示。根据上述联系,我们提供一些理论分析,发现层正常化在变异器模型的过度移动问题中起着关键作用。具体地说,如果层态正常化的标准偏差足够大,变异器堆的输出将集中到一个特定的低位子空间,并导致超动。为了缓解过动问题,我们考虑等级融合战略,将不同层的描述结合起来,使产出更加多样化。对各种数据集的广泛实验结果说明了我们聚变方法的效果。